Back when I first got into AI, I relied on tools like ChatGPT. It was fast and fun—until I hit the limits. I couldn't run anything offline. I worried about privacy. I hated waiting on the cloud. That's when I discovered a better way: using a local LLM.

It's like having ChatGPT right on your computer. It runs fully offline, with no internet and no cloud. You control everything. No company sees your data. In this guide, I'll show you what local llm models really are, why they matter, and how you can use one—even as a complete beginner.

CONTENT:

What Is a Local LLM?

A local LLM is a large language model that runs right on your own device—your laptop, desktop, or even a home server. No cloud. No internet. No outside company involved.

It's kind of like downloading Spotify music to your phone. Instead of streaming from the cloud, you're keeping the brain of the AI right in your hands.

When you use ChatGPT online, your data goes through someone else's servers. But with a llm local setup, everything happens on your machine. You ask questions, and the answers are generated locally, without sending anything out.

A local ai model gives you full control. You decide what model to use, when to update it, and how it runs. You don't have to wait for server lag or worry about hidden data sharing. In short: you own the model. You're not just renting it.

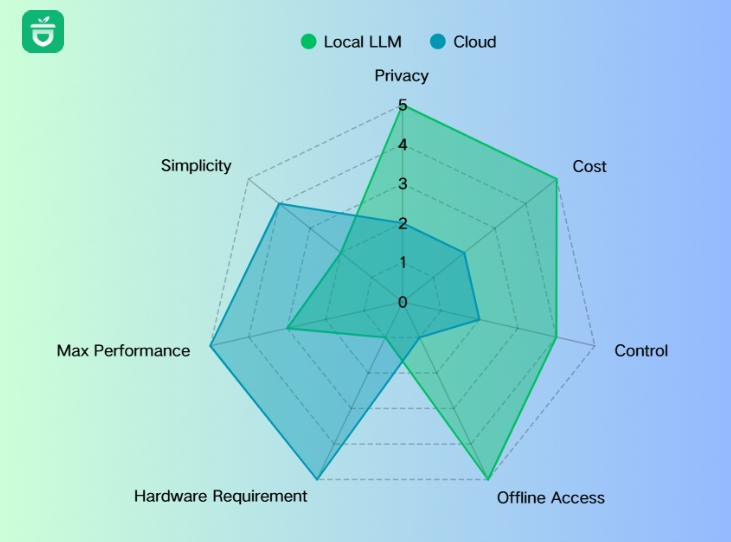

What's the Difference of Local LLM vs Cloud?

Since you've learned what it means to run local llm, you might wonder: how does it really compare to using cloud-based AI services? Which one fits your needs best? Let's break down the main differences.

| Factor | Local LLM | Cloud LLM | Notes / Impact for You |

|---|---|---|---|

| Privacy | Full control, data stays on your device | Data sent to provider's servers | Best for sensitive or personal data |

| Control | You manage model choice and settings | Provider controls updates and policies | Local gives you more flexibility and autonomy |

| Cost | Mostly one-time hardware and setup costs | Pay per use or subscription fees | Local saves money long-term if used often |

| Speed / Latency | Fast, no internet delay | Depends on internet speed | Local runs instantly without server delay |

| Internet Required | No, works offline | Yes, requires stable connection | Local is great in travel or low-connectivity areas |

| Model Updates | Manual updates by you | Automatic and frequent updates | Cloud gets latest features; local offers more control |

| Ease of Use | More setup and tech skills needed | Easy to start, minimal setup | Beginners may prefer cloud at first |

| Scalability | Limited by your hardware | Automatically scales to demand | Cloud better for large teams or high demand |

Cloud LLMs, like OpenAI's ChatGPT or Google Gemini, run on huge servers you access through the internet. You send your prompts online, and the servers return answers. These services give you access to the most advanced models without needing powerful hardware.

But there are some downsides. Cloud AI costs add up fast, usually based on how much you use it. Your data must travel to the cloud, which raises privacy concerns. You need a stable internet connection, and your control over the model is limited—you rely on the provider's updates and rules.

On the other hand, local LLMs run right on your own device. Your data never leaves your machine, so privacy is solid. After buying or having the right hardware, running the model is mostly free—no monthly fees or per-use charges. You can work offline anytime and customize your model's behavior fully.

Here's a quick summary to help you compare:

Scores are based on a 5-point scale from the user's perspective, where 5 means high/good and 1 means low/poor. For hardware requirement, 5 indicates low demand on the user's device; for cost, 5 means lower ongoing expenses.

- Choose cloud LLM if you want the latest, most powerful AI without managing hardware. Good for teams, businesses, or casual users who don't want to deal with tech setup.

- Choose local LLM deployment if privacy matters most, you want no ongoing fees, need offline access, or want full control over customization. Ideal for developers, hobbyists, and privacy-conscious users with decent hardware.

Sometimes, the smartest setup is a mix. With Nut Studio, you can run big models like Qwen3 fully offline for private tasks—or switch to cloud mode when you need web search or extra muscle. It’s all in one app, and you stay in control.

Local or cloud, switch anytime. Nut Studio lets you run top AI models your way

[Local LLM Use Cases] For What Purpose to Run Local LLM?

After learning how local LLMs give you more privacy, control, and speed than cloud models, the next big question is: what are people actually doing with them?

I dug through Reddit and AI forums to find real user stories. The answers surprised me — not just developers and techies, but students, therapists, roleplayers, and regular folks are all using LLMs locally in powerful and creative ways. Let me show you the most popular local LLM use cases, straight from real users.

Summary Table of Local LLMs Real Use Cases

| Use Case | Example Task | Privacy Benefit |

|---|---|---|

| Psychology & Support | Journaling, late-night talk, emotion processing | No cloud logs of sensitive chats |

| Finance Assistant | Budget summary, RSS news reordering | Personal finance stays offline |

| Smart Home Assistant | Device control, note-taking, photo sorting | No upload of images or metadata |

| Coding & Dev Help | Debugging, finetuning, secret-safe scripting | API keys stay on device |

| Roleplay & Creativity | Uncensored character chats, script writing | No filter, no monthly fee |

| Academic & Legal Research | Government doc analysis, PhD proofreading | Sensitive content never leaked |

1 Emotional Support & Therapy Helpers

Many users turn to local LLMs when they need someone (or something) to talk to — privately.

One Redditor said they've been building a model to support grief and mental health therapy. Their model helps patients "talk their way through a solution", even when a real therapist isn't available. Because it's local, everything stays confidential — no servers, no third parties.

"Medically speaking, privacy is everything… Local LLM is a guaranteed situation of privacy."

Another user added:

"I bully my AI sometimes and feel bad for it later — but it helps me process emotions."

2 Budgeting and Finance Analysis

If you're tracking your spending or planning big purchases, local LLMs can act as your offline financial assistant. Several users use models to sort RSS feeds for financial news or summarize personal finance reports.

One user shared:

"I have a program where the local LLM reads my RSS feed and reorders it based on my interests before I open it."

That's like having your own best local AI LLM for finances — one that learns your habits and serves only you. If you upload receipts, invoices, or tax forms, local models can summarize and search them — without ever sending them to the cloud.

Nut Studio can supports file upload (PDF, Excel, etc.) to turn your data into a smart offline knowledge base.

3 Smart Home Assistant and Productivity Tools

Several Redditors use home assistant local LLMs to power smart tools around the house. One person used an LLM to read and summarize 20 years' worth of personal photos and scanned documents — including passport photos, receipts, and private notes.

"Vision-LLM locally means I don't worry about images with data I don't want to share… like payment info or people who didn't give consent."

Others use local models to help with:

- Managing to-do lists

- Voice commands for smart devices

- Taking quick notes without cloud sync

4 Coding Without Leaks

If you're a developer or hobby coder, you'll love this one. Running a local LLM for coding means your code, secrets, and API keys never leave your device.

"I don't worry about putting API keys in the prompt. It all runs at home and at work, fully offline."

Others shared that they finetune models for dev tasks or even use uncensored models to bypass cloud filter restrictions — especially useful in research or niche fields.

5 Roleplay and Creative Exploration

Some of the most enthusiastic users? Roleplayers.

With a local LLM, they enjoy unrestricted, uncensored roleplay. No filters, no usage limits, no need to pay subscriptions.

"I use it for roleplay and simple tasks… and I don't have to worry about censorship or filters."

One person even said they use it for fun chats, story writing, or to explore characters that commercial tools don't allow.

6 Academic & Research Projects

Researchers also benefit from running LLM locally, especially when working with sensitive topics or datasets.

One Redditor shared that they used a local uncensored model to study official Canadian firearm safety documents — something ChatGPT and Gemini refused to process.

"In a few hours of coding, I had my own study assistant… fully local."

Another PhD student uses a local model to translate and proofread academic papers:

"I use it mainly for my PhD research texts. I don't want to feed that into online tools."

If that's your use case too, see: Best LLM for Translation

What's the Best Local LLM Available in 2025?

Now that you've seen all the powerful use cases for running AI locally, the next question is: Which local LLM models are actually worth using in 2025?

Good news — 2025 is a golden year for open source local LLMs. They're faster, smarter, and more accessible than ever. Thanks to communities like Hugging Face Open LLM Leaderboard, you now have dozens of amazing models to choose from — many can even run on a mid-range GPU or laptop.

In fact, this focus on efficiency has led to the rise of Small Language Models (SLMs), which are optimized to run on consumer hardware. To understand how these compact models differ from their larger counterparts, check out our detailed guide on SLM vs LLM.

2025 Best Local LLM Models Comparison:

Here's a curated selection of popular, production-ready local LLM models that professionals are deploying for private, offline tasks:

| Model Name | Size | Strengths | VRAM Needed | Open Source? |

|---|---|---|---|---|

| Qwen 7B | 7B | Multilingual, accurate | 12GB | ✅ |

| Mistral Instruct | 7B | Fast, responsive, chat-focused | 10GB | ✅ |

| LLaMA 3 8B | 8B | Balanced, versatile conversations | 14GB | ✅ |

| Yi-1.5 9B | 9B | Excellent writing & creativity | 14GB | ✅ |

| DeepSeek-V2 | 16B | Strong logic, math & code | 24GB+ | ✅ |

As the table above shows, there's no single "best" local model — it all comes down to what you want to do, and what your hardware can handle.

- If you're working across multiple languages or need accurate translation, Qwen 7B is surprisingly capable — especially given its small footprint.

- For everyday conversations or local assistants, Mistral Instruct stands out with its snappy responses and low VRAM demands.

- Looking for something more balanced? LLaMA 3 8B offers a strong middle ground for both casual and task-oriented use.

- Writers and roleplayers tend to prefer Yi-1.5, especially for long-form content with emotional tone or narrative flow.

- And if you're coding, debugging, or building something logic-heavy, DeepSeek-V2 is worth exploring — though you'll want a beefier GPU.

We've covered the hands-on setup process for many of these in separate guides, so feel free to explore them if a specific model caught your eye — whether it's installing Llama, getting started with Mistral, or setting up DeepSeek for local coding. All of them are open-source and can be run with tools like Nut Studio or Ollama, depending on your preference.

Running 50+ Ai Models in One-click with Nut Studio.

What's the Local LLM Hardware Requirements?

You've probably found a model or two that looks perfect for your needs — but before hitting that install button, there's one more question worth asking:

Will it actually run well on your machine?

Don't worry — you don't need a supercomputer to get started with local LLMs. Many small models today are surprisingly efficient, and even laptops with mid-range GPUs can handle them well. That said, understanding the basic local LLM hardware requirements will help you avoid slowdowns, crashes, or wasted downloads.

Before running a local model, do this quick 3-step check:

| Component | Minimum Recommended | Why It Matters |

|---|---|---|

| GPU | ≥ 8GB VRAM | LLMs mostly run on GPU. More VRAM = faster response & larger model support |

| RAM | ≥ 16GB | Helps load models & context smoothly, especially for multitasking |

| Storage (SSD) | ≥ 20GB free | Models can be big (especially unquantized ones); SSD is key for speed |

You can still run models on CPU-only systems, but performance will be much slower and limited to smaller quantized models (like Q4_K_M or GGUF versions).

Here's a breakdown of what GPUs work best at different budget levels:

| Tier | Example GPUs | What You Can Expect |

|---|---|---|

| Beginner | RTX 3060, Mac M1/M2 | Run 7B models like Qwen or Mistral at decent speed |

| Intermediate | RTX 4070, 4080 | Handle 13B+ models smoothly, great for multitask agents |

| Power User | RTX 4090, dual 3090, etc. | Real-time generation, code LLMs, 16B+ models, multitasking like a beast |

If your GPU has less than 8GB VRAM, don't worry — many models come in quantized formats, meaning they've been compressed without losing much performance. These include 4-bit and 5-bit versions, which run faster and fit on smaller cards or even CPUs.

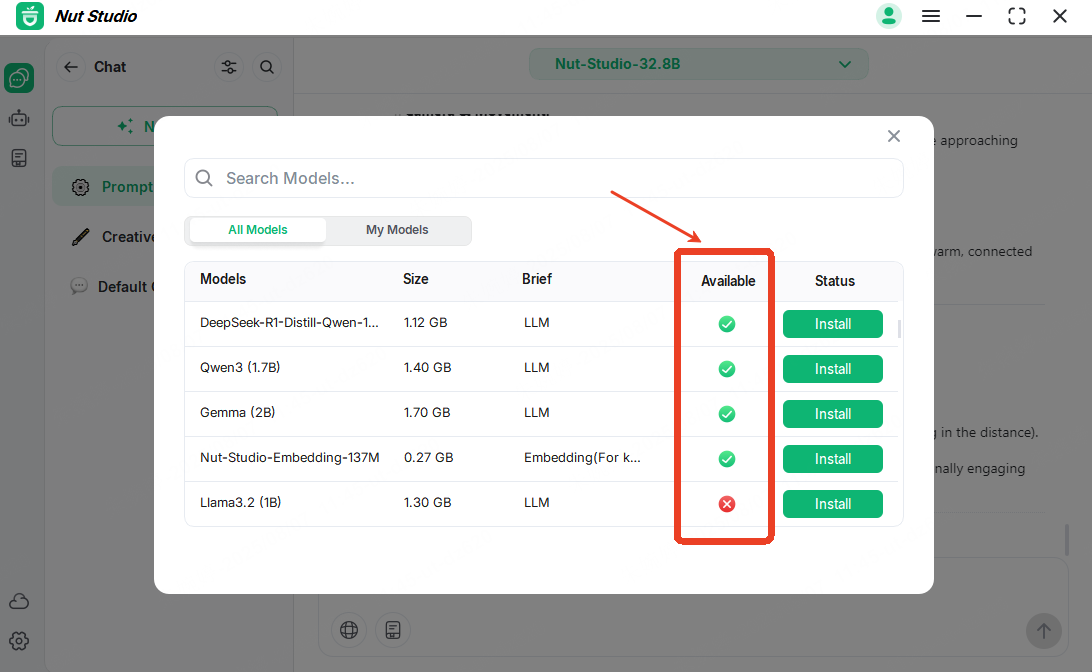

If you're not a hardware expert (most of us aren't), there's a simple solution:

Nut Studio auto-detects your system specs and shows you, at a glance, whether a model is compatible with your device — no guesswork, no trial and error.

Just open the app — it automatically detects your hardware and tells you which models your system can run.

This is especially helpful if you're switching between machines or want to build your personal offline AI lab without spending hours benchmarking GPUs.

How to Run a LLM Locally Without Coding?

So now you know what a local LLM is, why it matters, and where it beats the cloud. But here's the real question: How do you actually run an LLM locally — without writing a single line of code?

I tried everything from developer-focused apps to beginner tools with simple UIs. After testing them hands-on, I found four tools that cover everything from one-click setups to full customization.

Let's compare the best options you can use today:

| Tool | Best For | Key Features | Platforms | Newbie Score (⭐) |

|---|---|---|---|---|

| Nut Studio | Beginners who want one-click AI use | 50+ models, agent templates, doc upload, no setup | Windows | ⭐⭐⭐⭐⭐ |

| LM Studio | Tinkerers who want fine-tuning | Custom params, model search, model compatibility check | Windows, Mac, Linux | ⭐⭐⭐⭐ |

| Ollama | Developers & hobbyists | Model import, mobile/web integrations | Mac (best), Linux, Windows | ⭐⭐⭐ |

| Jan | Offline open-source fans | 70+ models, full local control, open source | Mac, Windows, Linux | ⭐⭐⭐⭐ |

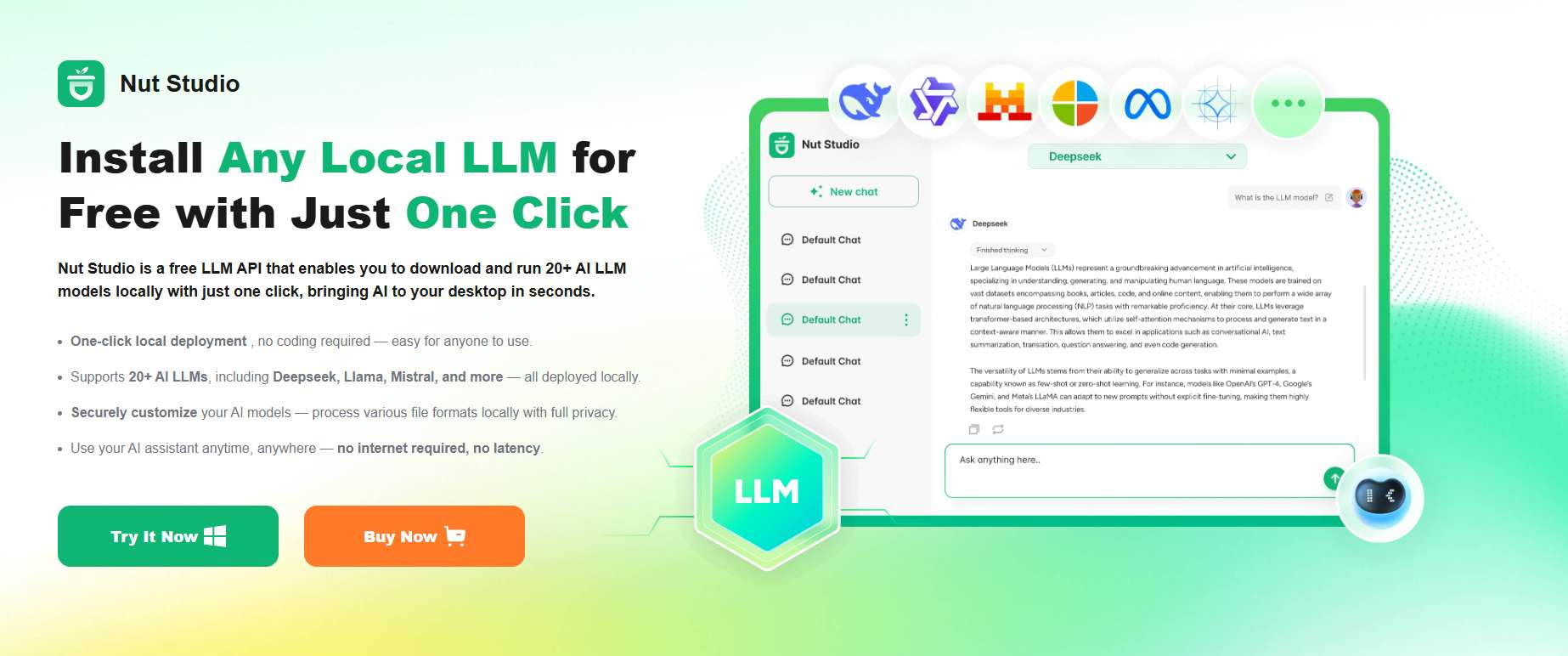

1 [Recommended] Nut Studio: One-Click Local LLM for Beginners

If you're just starting out and want the easiest way to run LLM locally, I highly recommend Nut Studio. It's designed for non-coders, and it works right out of the box.

You can run over 50 local LLM models — including 30 optimized GGUF versions — with just a few clicks. No command line. No setup. Just download, pick a model, and start chatting.

Why I love Nut Studio:

- You can build your own AI agents using templates (like "life coach" or "writing buddy").

- It lets you upload documents (PDFs, DOCX, PowerPoints…) so your AI can read and respond based on your own knowledge base.

- Nut Studio auto-checks your PC specs and tells you if the model will work — perfect for beginners with no idea what "VRAM" means.

Personally, this was the first time I felt like running an LLM locally didn’t require a degree in computer science. You can download Nut Studio here and try it yourself.

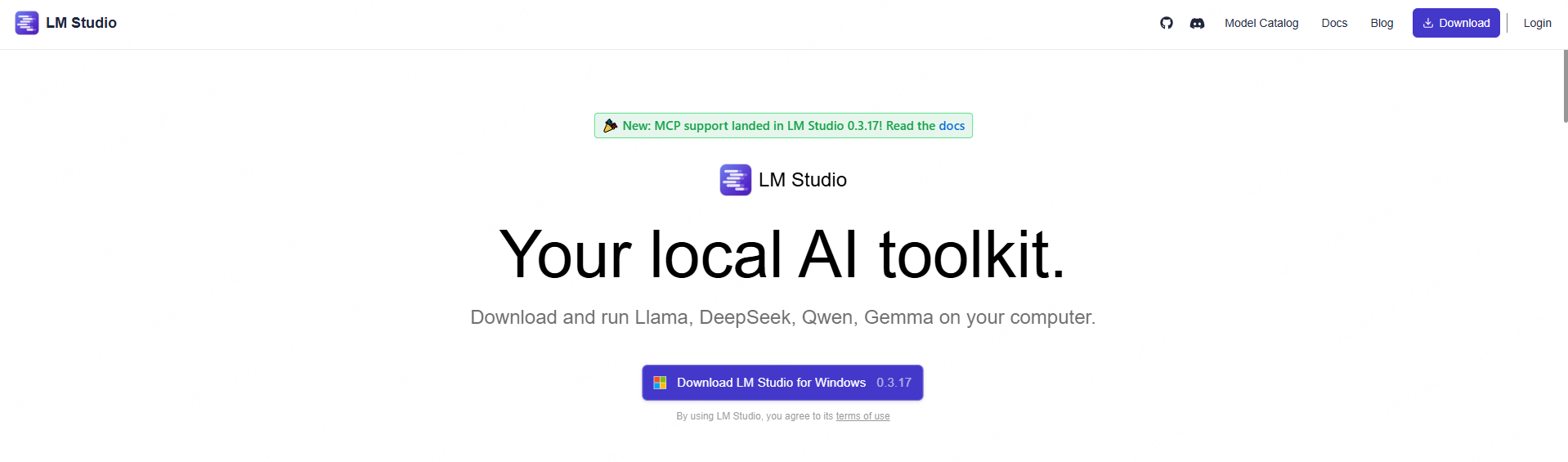

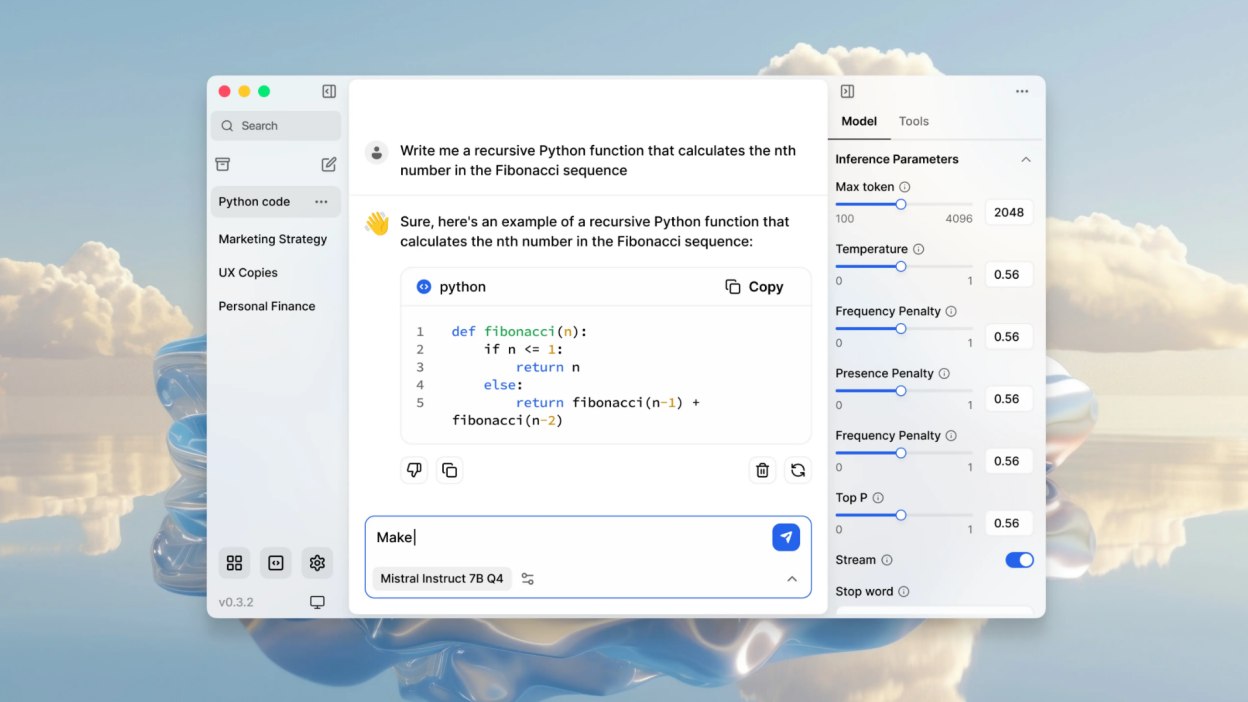

2 LM Studio: Great for Fine-Tuning Settings

LM Studio is another great tool to install LLM locally, especially if you like playing with temperature, top-p, and token settings.

It supports models in GGUF format from big names like DeepSeek, Mistral, and Phi. It also auto-detects your system's GPU and RAM, and only shows models that will actually run on your machine.

Top features include:

- Chat history and prompt saving

- Parameter hint popups for learning on the go

- Clean UI that looks like ChatGPT

- Cross-platform support (Mac, Windows, Linux)

If you want full control over local LLM deployment, but don't want to code, this is a great option. It's more advanced than Nut Studio but still beginner-friendly.

3 Ollama: For Developers Who Want Control

Ollama is popular among open-source developers. It's powerful, flexible, and very customizable — but that means it's also a bit more complex.

You can import models from Hugging Face or PyTorch and run them with a simple command like ollama run llama2. It also supports mobile app integration and has an active developer community.

Main strengths:

- Huge model library

- Integration with external apps and databases

- Works offline with no need for API keys

But here's the catch: If you're not comfortable with command-line tools, Ollama might feel a bit intimidating at first. It's still one of the best local LLM tools out there, just not the most beginner-friendly.

4 Jan: A ChatGPT-Style App That Works Offline

Jan is like an open-source, offline version of ChatGPT. It works on Mac, Windows, and Linux — and it's totally free.

You get a bundle of preloaded local LLM models (over 70!) and can also import more from Hugging Face. Everything stays on your computer — your chats, model settings, and documents. That makes Jan a great pick if you care a lot about privacy.

Best features:

- Simple interface with multi-model support

- Local-only mode (no hidden data sharing)

- Extension support (like TensorRT, Inference Nitro)

I found Jan smooth and well-documented. However, it performs best on Apple Silicon Macs. If you're on Windows, you might find Nut Studio faster and easier to use.

FAQs About Running LLM Locally

1 Does a Local LLM Send Data to the Internet?

No. A local LLM runs fully on your device and does not send information online. All your chats and files stay private. Be careful of tools that look local but secretly connect to APIs. Choose trusted platforms like Nut Studio, which guarantee private LLM use with full offline support.

2 Can I Use Multiple GPUs to Speed Up Local LLMs?

Yes. You can use multiple GPUs to run local LLM distributed, boosting speed and reducing latency. Tools like vLLM and Exllama support multi-GPU inference. For example, Mistral 7B runs faster with two GPUs than one.

3 Can I use local LLMs fully offline?

Yes. Once installed, a local LLM works without internet. You can chat, generate text, and use documents completely offline. Tools like Nut Studio and Jan support full offline use — perfect for private tasks or low-connectivity environments.

Conclusion

Running a local LLM is no longer just for developers or tech pros. With the right tools, anyone can set up and use an AI model on their own device — fully offline, private, and under your control. Whether you want to run LLM locally for privacy, speed, or full customization, there's a tool that fits your needs. For most beginners, Nut Studio is the easiest way to get started. It supports 50+ local LLM models, works out of the box, and helps you build agents, knowledge bases, and more — all without coding.

-

Best LLMs for Resume Writing: Cloud vs. Local[2025 Tested]

Unbiased 2025 review of the best LLMs for resume writing—Claude 4, Gemini 2.5 Pro, GPT-5, Llama 3.2, Mistral, Phi-4—plus prompts, advanced LLM tips, and local setup.

5 mins read -

How to Use Your Personal AI Resume Checker to Get More Interviews

AI resume check: accept or reject? Use a personal AI assistant locally to tailor job descriptions & pass ATS screening. Try Nut Studio for free - Easy Setup.

5 mins read -

SLM vs LLM: Which Should Beginners Choose?

SLM or LLM: which for beginners? Discover benefits of small local AI, lower cost, and data privacy, plus model comparison and how to run them locally.

5 mins read -

[2026 Guide] Which LLM Is Best for Story Writing, Blogging, and Creative Content?

Wondering which LLM is best for story writing or blogging? Explore top models for essays, fiction, and creative content—ranked by use case.

15 mins read

Nut Studio

Nut Studio

Was this page helpful?

Thanks for your rating

Rated successfully!

You have already rated this article, please do not repeat scoring!