Most guides on how to run Mistral 7B locally are filled with confusing steps, long code blocks, and missing files. I've tested those methods—and gave up more than once. That’s why I put together this no-fluff guide that actually works.

If you're new to AI or just want a simple way to run Mistral locally on your own computer, this is for you. No terminal tricks. No Python installs. Just a clear, beginner-friendly setup that runs on both Windows and Mac.

CONTENT:

What Is Mistral 7B

Mistral 7B is a small but powerful model built by Mistral.ai. It's open-source, lightweight, and designed to run fast—right on your laptop. Think of it as a mini ChatGPT you can keep on your own device.

This model runs with just 7 billion parameters, but don't let the size fool you. When I first tried it, I was surprised by how well it handled real tasks like writing blog posts, summarizing long PDFs, even translation—and yes, it's also one of the best LLMs for coding I've tested locally.

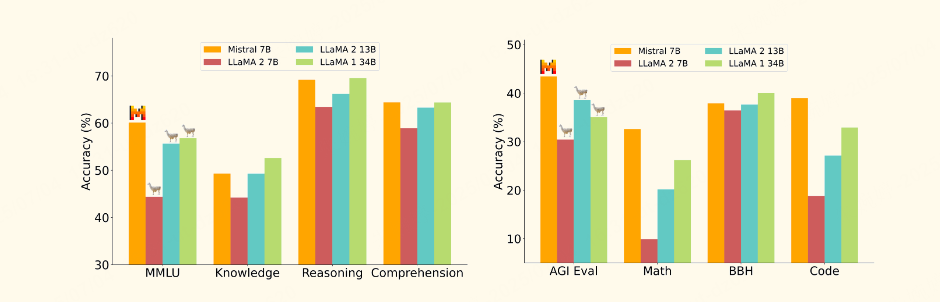

In official benchmark tests, Mistral 7B outperforms LLaMA 2 13B across all major tasks. It even beats LLaMA 34B in many reasoning and coding tests, while using less than a third of the memory. In real use, this means you get the power of a massive AI model—but packed into a lightweight, local setup. It responds quickly and doesn't need the internet.

That's why so many people now want a Mistral local LLM setup. It gives you privacy, speed, and full control. If you're wondering whether it's worth it to run Mistral 7B locally, the answer is yes—especially if you want solid performance without cloud limits.

Source From Mistral.ai

How to Run Mistral 7B Locally on Windows and Mac

So now you know what Mistral 7B can do—but how do you actually run Mistral locally without writing code or setting up complicated tools? Let's break it down by platform.

1 [Best for Beginners] Run Mistral 7B Locally on Windows with Nut Studio

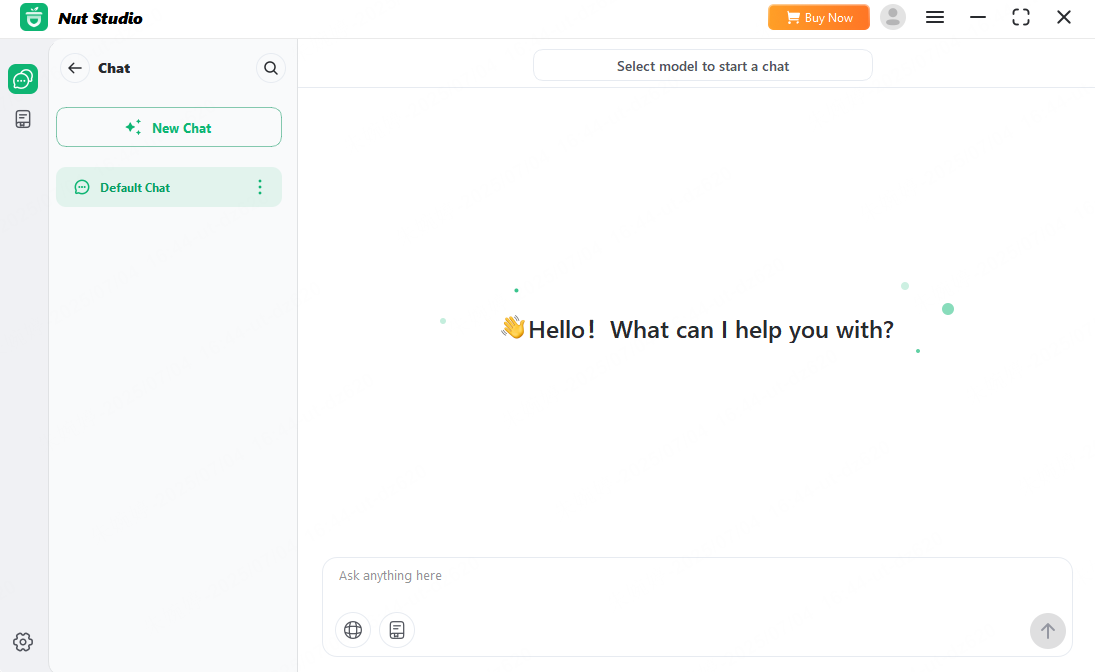

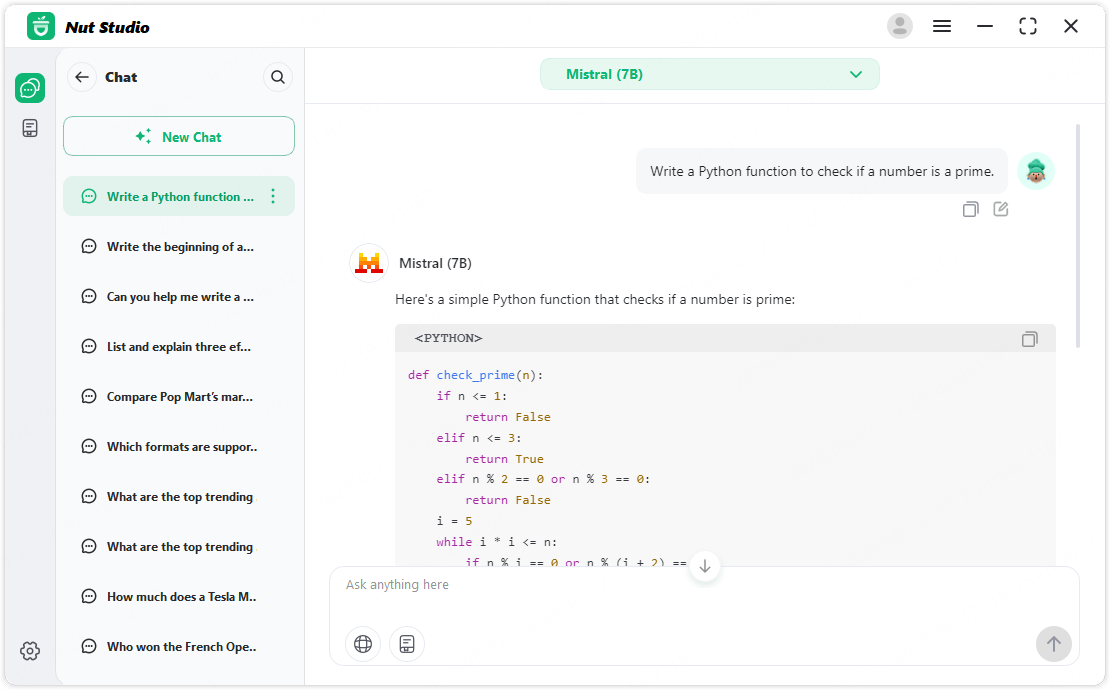

If you're using Windows and want the easiest way to run Mistral 7B locally, Nut Studio is your best bet. It's a free app that helps you install and run local AI models—no command line, no Python, no config files.

Nut Studio uses a clean, graphical interface. It automatically detects your system and GPU, then recommends a version of Mistral 7B that runs best on your hardware. It supports over 50 local LLMs, and lets you switch between different AI agents built for writing, studying, coding, or general chat—boosting your productivity right from your desktop.

- One-click installation with a visual interface—no command line or Python required;

- Automatically detects your system and hardware to recommend the best model version;

- Supports running 50+ lightweight local LLMs including Mistral, LLaMA, DeepSeek, and more;

- Offers multiple built-in AI agents for writing, studying, coding, and productivity tasks;

- Runs fully offline with no internet needed once the model is installed;

Steps of installing Mistral AI with Nut Studio

Step 1: Download Nut Studio and install it on your Windows PC

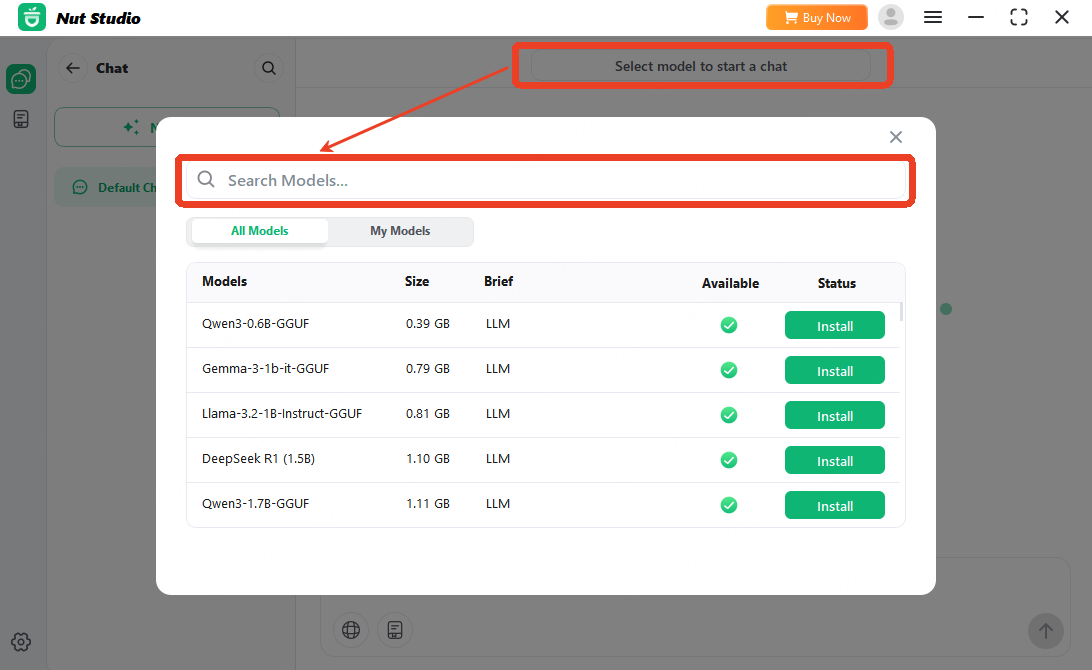

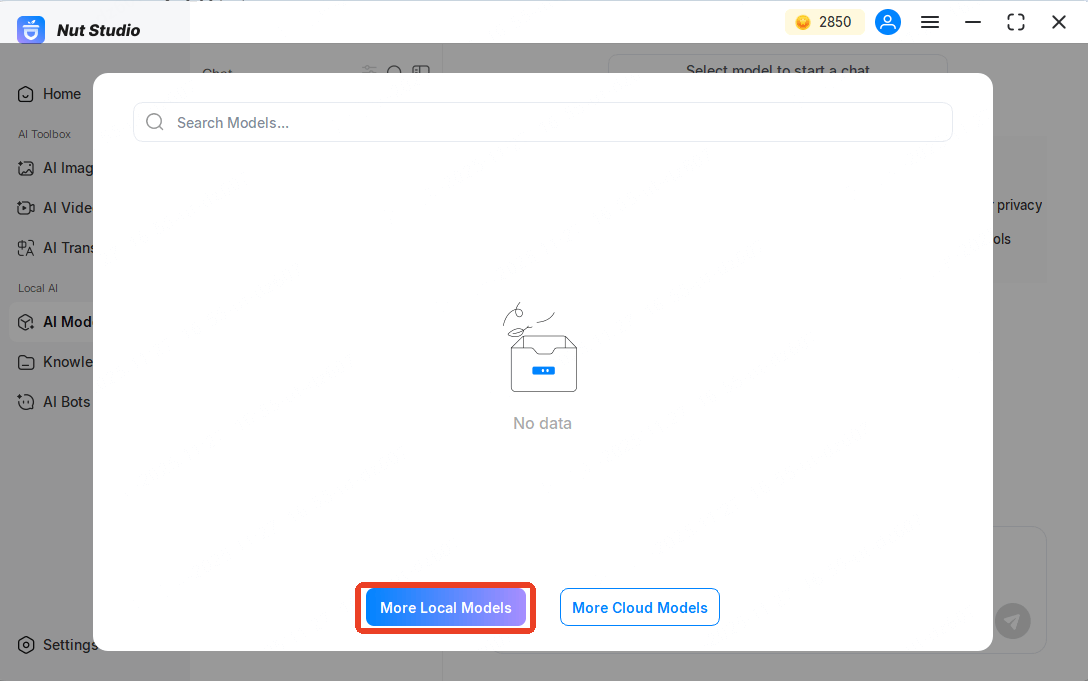

Step 2: Click "AI Model" at the left bar and click "select model to start chat".

Choose "Choose more local models". Type "Mistral" in the search box, then click "Install" to start one-click deployment.

Step 3: Wait a few minutes while the model downloads and sets up.

Once it's ready, you can start chatting with your local Mistral 7B model—completely offline.

2 Run Mistral 7B Locally on Mac Using Ollama

If you're on macOS, Nut Studio isn't available yet—but you can still run Mistral 7B locally on Mac using a powerful open-source tool called Ollama.

Unlike Nut Studio, Ollama works through the command line. It takes a few more steps to set up, but gives you full control over the model and runtime. It's a great option if you don't mind typing a few terminal commands.

Steps of deploying Mistral on macOS with Ollama

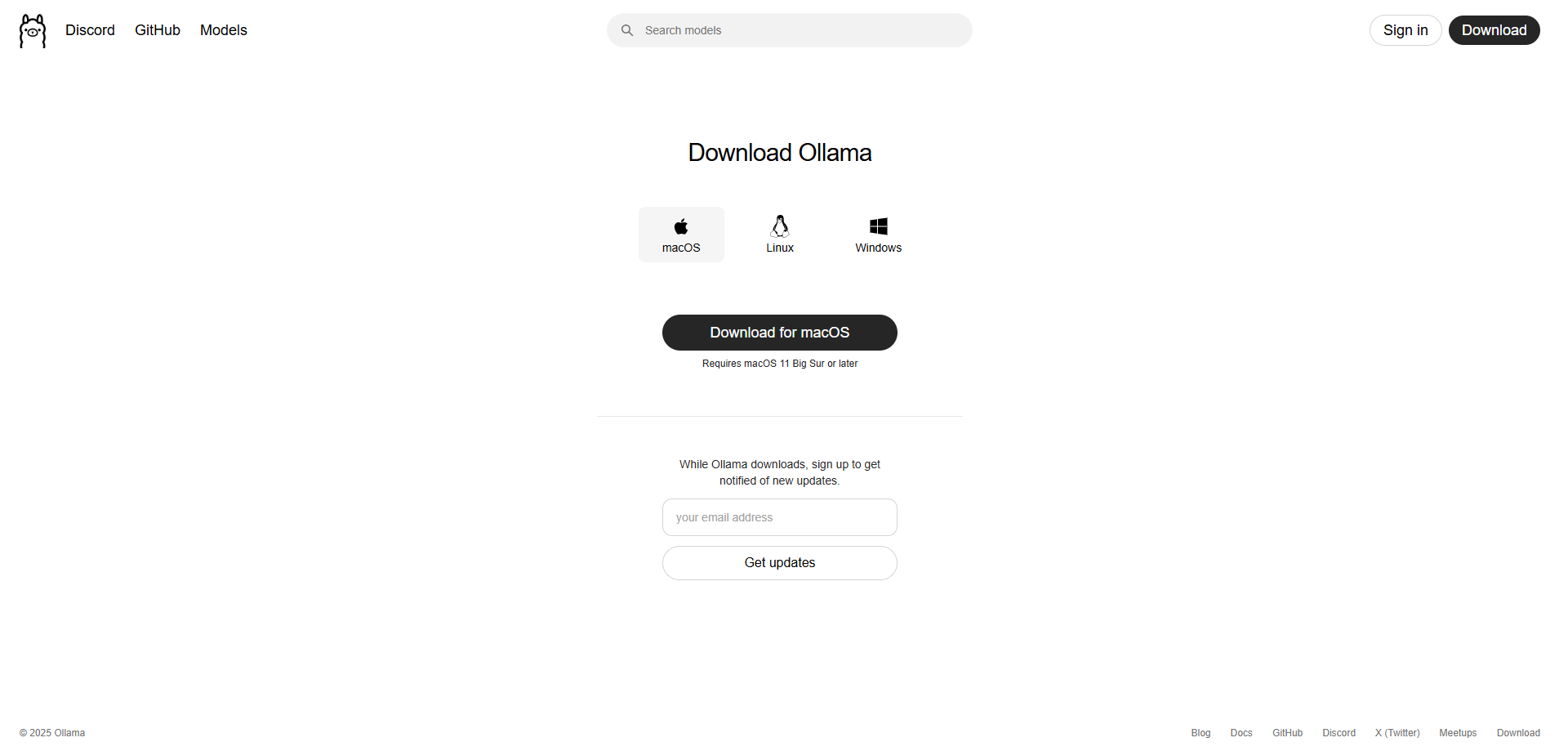

Step 1: Download and Install Ollama

Go to ollama.com and choose macOS to download the installer. You'll get a .zip file — double-click it to unzip, then drag the Ollama app into your Applications folder.

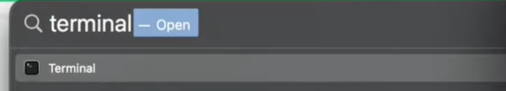

Step 2: Verify the installation

Open your Terminal, and run this command:

ollama --version

If it returns the version number of Ollama, then you've installed it successfully.

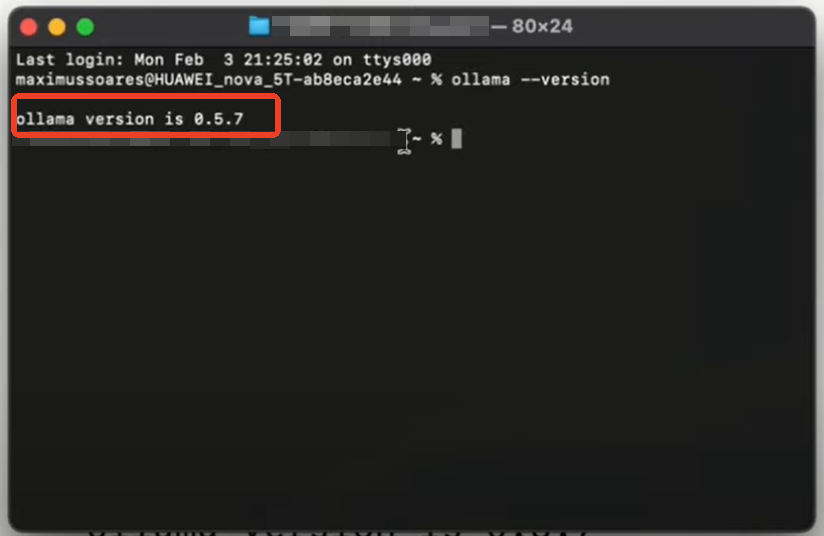

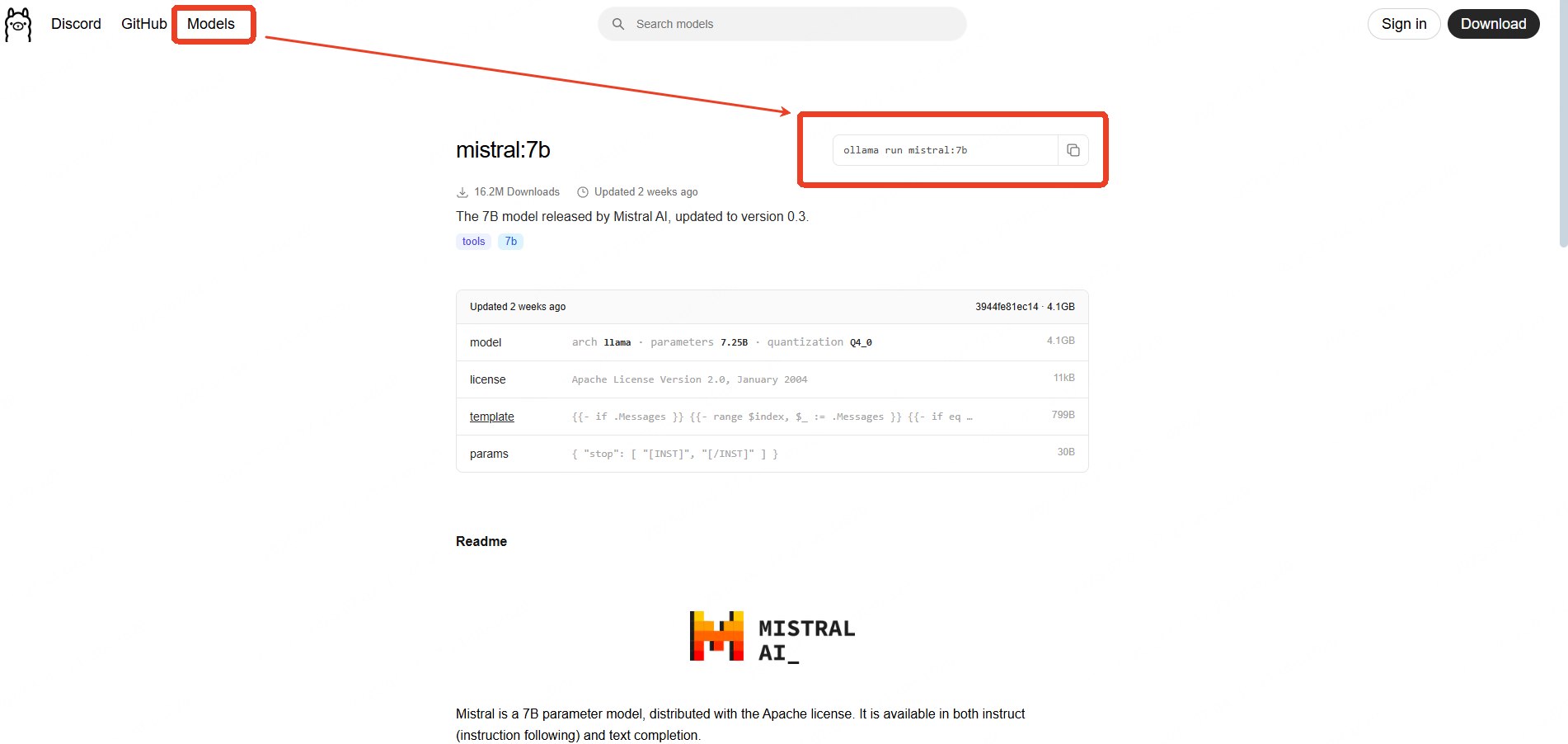

Step 3: Install and run Mistral 7B

ollama run mistral:7b

Ollama will start downloading the Mistral 7B model, including support for HuggingFace GGUF files, then automatically launch it when ready.

Step 4: Start chatting locally

Once the download finishes, you'll be able to chat with Mistral right inside your Terminal—no cloud, no accounts needed.

Compared to Nut Studio, this method needs more setup, but it gives you deeper customization and more flexibility. If you're a developer or enjoy DIY tools, it's a solid choice.

What Hardware Do You Need to Run Mistral 7B Locally

Before you try to run Mistral AI locally, you're probably wondering—can my computer even handle this?

The good news is: Mistral 7B is designed to be lightweight, so it runs on many personal laptops and desktops—even without a high-end GPU.

Here's a simple breakdown of the recommended hardware:

| Component | Minimum Requirements | Recommended for Best Performance |

|---|---|---|

| RAM | 8GB | 16GB or more |

| GPU (VRAM) | Optional (CPU works) | 6GB+ VRAM (e.g. RTX 3060 or better) |

| Storage Space | 10GB free space | SSD preferred for faster load times |

| CPU | Any modern processor | At least quad-core (Intel i5/Ryzen 5) |

If you're still debating how to run Mistral 7B locally, just remember: you don't need a gaming PC. A mid-range laptop with enough memory can already get you started.

Nut Studio automatically detects your hardware and picks the right model for you—no manual setup needed. It's the safest way to try local LLMs if you're unsure where to start.

FAQs About Mistal Local LLM

1 Can I run Mistral without a GPU?

Yes. You can run Mistral 7B using only your CPU. It will be slower than GPU inference, but it works fine for basic tasks like chatting or note-taking. You can use Nut Studio to auto-detect your system and pick a lightweight version that runs smoothly—even without a GPU.

2 How big is the Mistral 7B file?

Around 4–8GB, depending on the model format (like GGUF or original weights). Nut Studio and Ollama will handle the download for you—no manual setup needed.

3 Can I run it without internet?

Yes. Once Mistral 7B is installed, it runs completely offline. That’s one of the biggest benefits of a mistral local LLM—no cloud, no data sharing. With Nut Studio, you can open Mistral like any desktop app—just click and start chatting, no cloud needed.

4 Is Mistral better than GPT models?

It depends. Mistral 7B beats models like LLaMA 2 13B in many benchmarks and runs locally. But GPT-4 is still stronger for complex tasks—just not available offline or free.

5 Does Mistral support Windows 11?

Yes. Nut Studio fully supports running Mistral on Windows 11, with no coding required.

6 Will it work on Mac M1/M2?

Yes. Tools like Ollama support Apple Silicon natively. You can run Mistral 7B on MacBook M1/M2 directly from Terminal.

7 Is Mistral 7B good for coding?

Yes. In benchmarks, Mistral 7B performs close to specialized code models. If you're choosing between local models, it's one of the best LLMs for coding you can run offline.

Conclusion

If you want the easiest way to run Mistral locally, Nut Studio is the top pick for Windows. It skips the coding—just one click to install and chat. For Mac users, Ollama is a solid option if you're okay with the terminal. Both tools let you run Mistral 7B fully offline, with no cloud and full control. Ready to try local AI? Just follow the guide and see how simple it can be.

-

SLM vs LLM: Which Should Beginners Choose?

SLM or LLM: which for beginners? Discover benefits of small local AI, lower cost, and data privacy, plus model comparison and how to run them locally.

5 mins read -

[2025 Guide] How to Run DeepSeek Locally Without Any Coding

Run DeepSeek R1 offline on Windows, Mac, or Linux easily. No coding skills or setup stress required.

10 mins read -

demo

cs

14 mins read

Nut Studio

Nut Studio

Was this page helpful?

Thanks for your rating

Rated successfully!

You have already rated this article, please do not repeat scoring!