If you want to run Llama on Windows 11, this guide will help you do it easily and quickly. You'll learn how to run Llama 3 locally step by step, from what you need before starting to the best ways to install and use it on your Windows 10 or 11 PC. Whether you're new or experienced, this guide makes running Llama simple and smooth.

CONTENT:

What is Llama 3?

Llama 3 is a large language model (LLM) developed by Meta in 2024. It's designed to handle a wide range of AI tasks like chatting, writing, summarizing, and coding. One of its key features is its ability to follow instructions more accurately and respond in a natural, human-like way.

The model comes in multiple sizes, including 8B and 70B, and has been trained on over 15 trillion tokens. Common Llama 3 local use cases include brainstorming ideas, creative writing, answering questions as a specific character, and summarizing long documents.

Because Llama 3 is open and free for most users, many people now choose to run Llama locally instead of relying on cloud tools.

Llama3 vs Llama Comparison: What's New?

Now that you know what Llama 3 is, let's look at how it compares to earlier versions. Each release brought major updates, but Llama 3.1 takes things even further. If you're not sure whether to install Llama 3 or Llama 3.1, or how they improve over earlier versions, here's a simple breakdown to help you decide.

| Feature | Llama 3.1 | Llama 3 | Llama 2 | Llama 1 |

|---|---|---|---|---|

| Release Date | Mid to late 2024 | April 2024 | July 2023 | February 2023 |

| Model Sizes | Same sizes, 8B / 70B / 405B model in development | 8B / 70B / 405B model in development | 7B / 13B / 70B | 7B / 13B / 33B / 65B |

| Context Length | Up to 12K tokens in some setups | 8K tokens (longer with some tools) | 4K tokens | 2K tokens |

| Training Data | Larger, more diverse dataset | Over 15 trillion tokens | Around 2 trillion tokens | ~1.4 trillion tokens (public only) |

| Performance | Further improved accuracy & reasoning | Comparable to GPT-4 Turbo in many tasks | Beats GPT-3.5 in several benchmarks | Solid for research, but less optimized |

| Multilingual | Even stronger multilingual support | Strong multilingual capabilities | Moderate, mainly English | Very limited |

| License | Same license | Open for research & commercial use | Commercial-friendly, not fully open | Research only (non-commercial) |

| Fine-tuning | Enhanced fine-tuning stability and efficiency | Optimized for LoRA, QLoRA, PEFT, etc. | Supports LoRA, QLoRA, PEFT | Limited LoRA experimentation |

| Best For | More advanced AI assistants, longer conversations, complex tasks | Local AI apps, assistants, enterprise R&D | Education, demos, local experiments | Academic research |

Source from Meta

Key Takeaways:

- Llama 1 was a big step in research when it launched in early 2023. But it had a limited license and didn't work well outside of academic use.

- Llama 2 made the model more practical. It allowed commercial use, improved performance, and added support for tools like LoRA. People began to run Llama locally for real-world use like testing apps or building demos.

- Llama 3 launched in April 2024 with huge upgrades. It was trained on over 15 trillion tokens, supports context lengths up to 8K tokens, and performs at a level close to GPT-4 Turbo. It's available in 8B and 70B sizes, and a 405B version is in the works. It also has strong multilingual support and better reasoning ability. This version became popular for people who wanted to run with the Llama on their own PCs. The 70B version, in particular, is also among the top LLMs for storytelling and long-form creative writing.

- Llama 3.1, released in mid-2024, keeps the same model sizes but brings better accuracy, longer context, stronger multilingual support, and enhanced fine-tuning. If you're planning to run Llama locally, it's the best version so far.

You can try the latest versions like Llama 3.1 on your Windows PC with just one click—no coding needed.

[Windows] How to Run Llama 3/Llama 3.1 Locally?

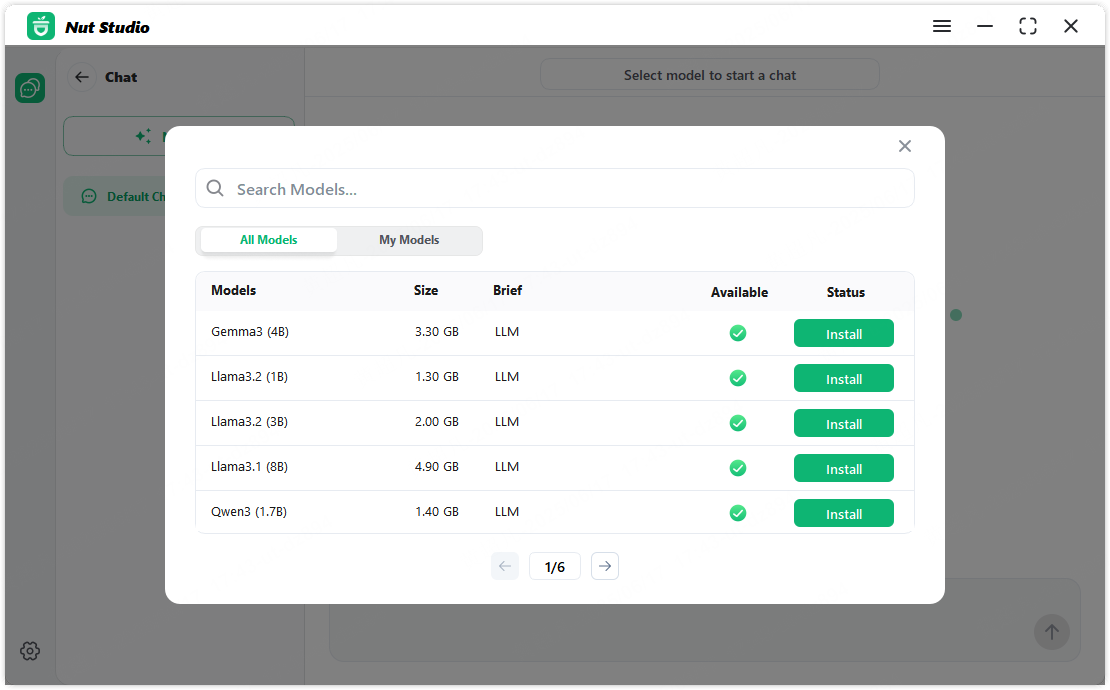

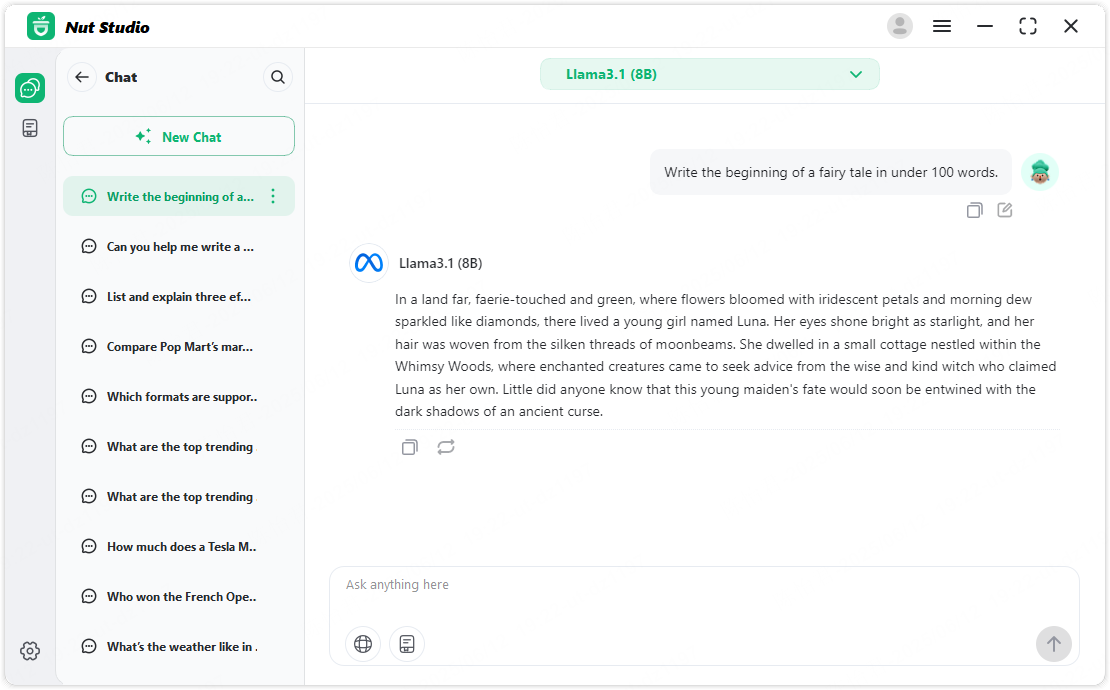

If you're ready to run Llama locally on your Windows 10 or 11 PC, you don't need to be a coding expert. Nut Studio can help you install powerful models like Llama 3.1 in just a few clicks. It’s a free desktop tool. You can use it to download and run over 29 open-source LLMs. That includes Llama 3 and Llama 3.1.

You don't need the terminal or write any code. It's great if you want to try Llama offline. Everything runs locally and keeps your data private. No setup stress at all.

Here's how you can run Llama on PC using Nut Studio:

Step 1: Download Nut Studio

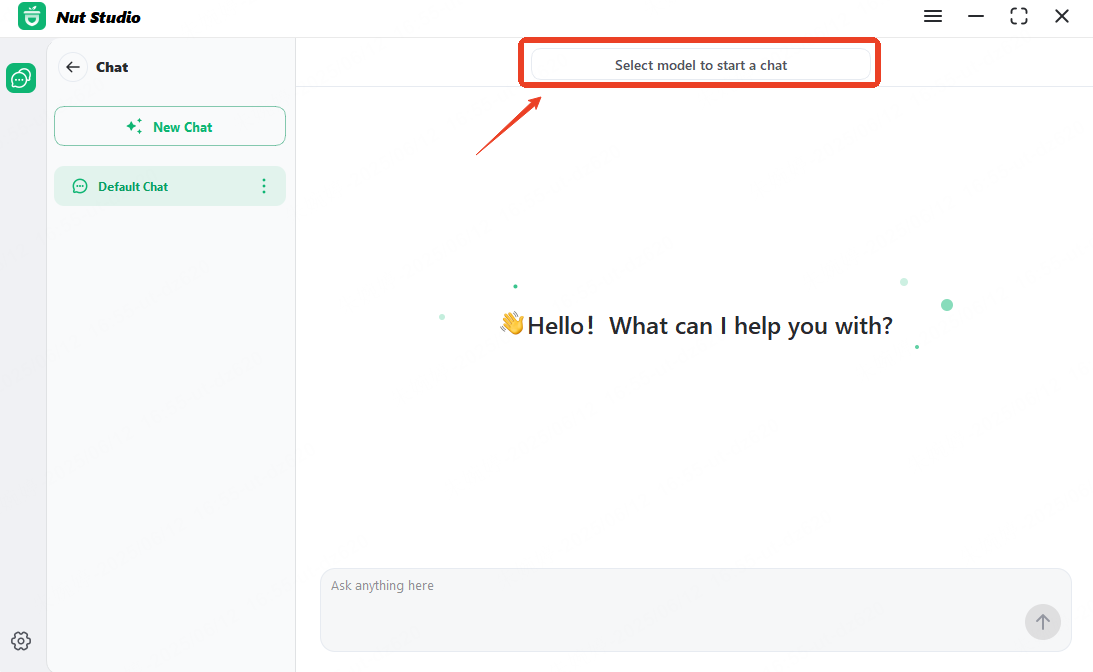

Step 2: Click the top control bar, press the "Select model to start a chat", and pick a Llama 3 version to install. Nut Studio handles all the setup in the background—including model weights, tokenizer, and configurations. No manual steps needed.

Step 3: Once the download is complete, you'll be chatting with Llama 3 locally—directly on your device.

How to Install Llama 3.1 Using Ollama?

If you prefer full control over the setup and don't mind using the command line, Ollama is a solid choice. It's an open-source tool made for running models like Llama 3.1 locally. You can use it to download, manage, and launch models from your terminal.

Want to run Ollama other than the terminal? Just use Nut Studio—a simple Ollama alternative that lets you do it with clicks instead of commands.

Here's how to set up Llama with Ollama on Windows:

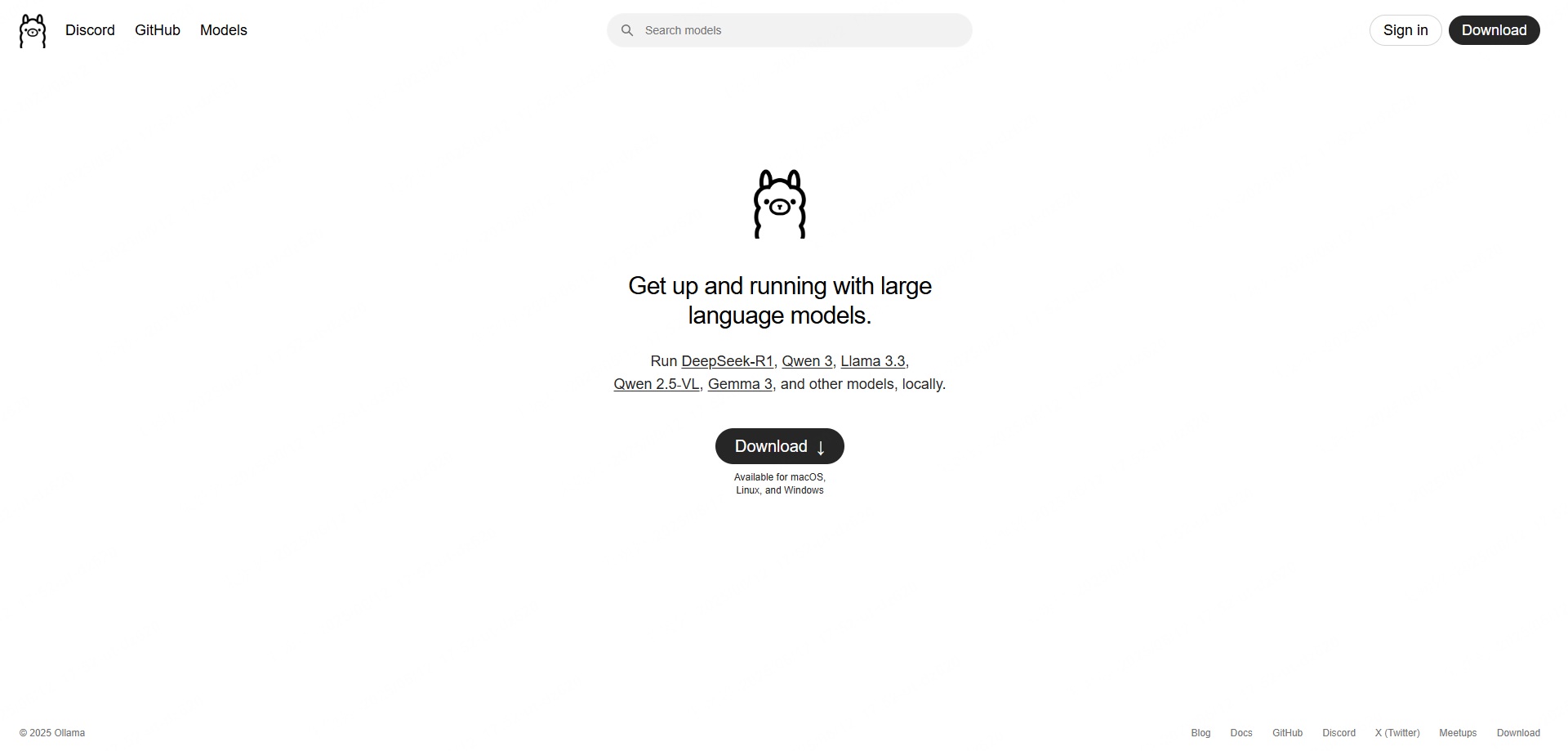

Step 1: Download and install Ollama

Visit ollama.com and choose your OS to download the installer.

For Windows, it comes as an .exe file — double-click the downloaded OllamaSetup.exe file to start the installation process.

Step 2: Check the ollama installation version

Press Win + R to open the Run window. Type cmd and hit Enter to open the command prompt.

Then type this command:

ollama -v

If you see a version number, it means Ollama is installed correctly. If not, try reinstalling from the official site.

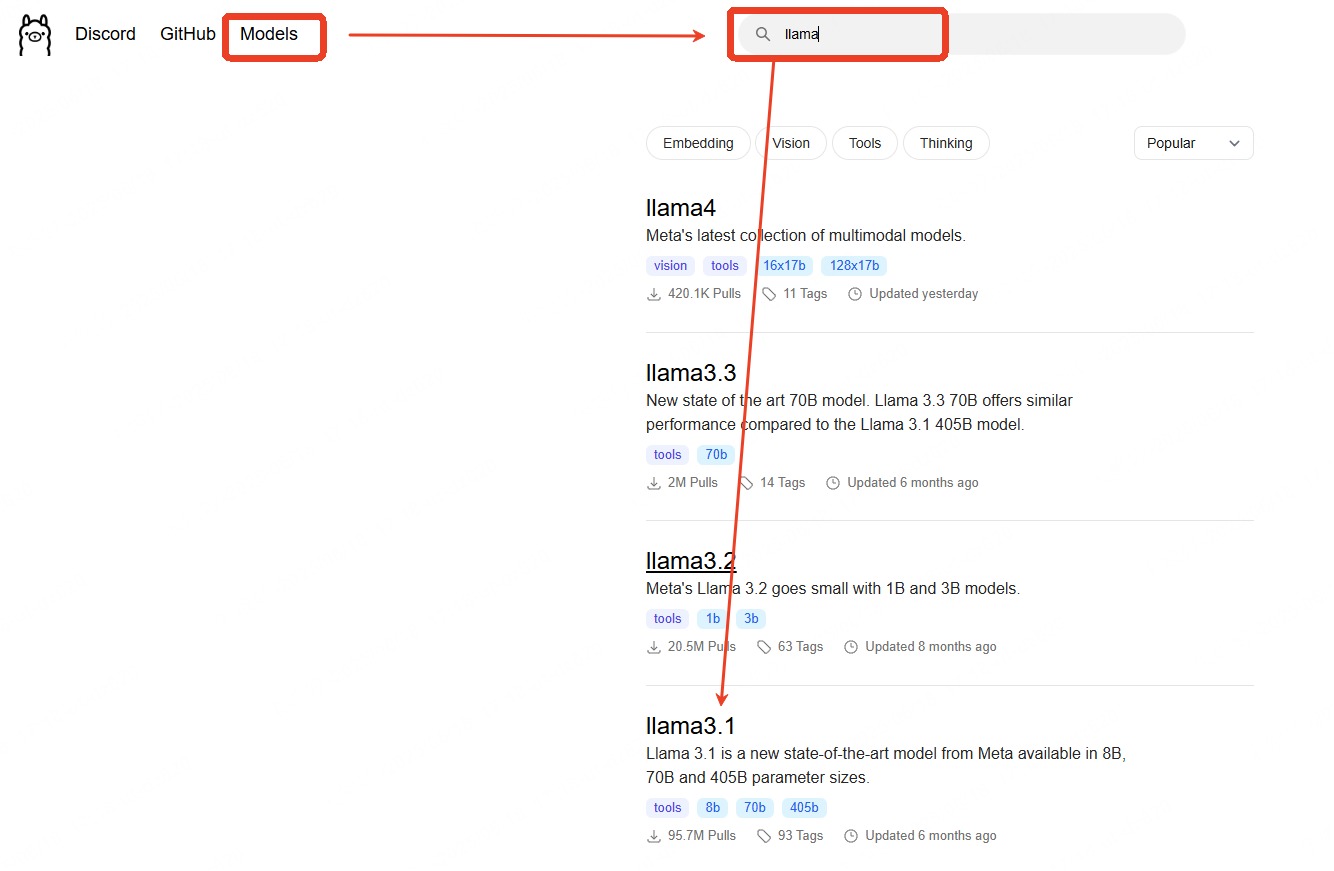

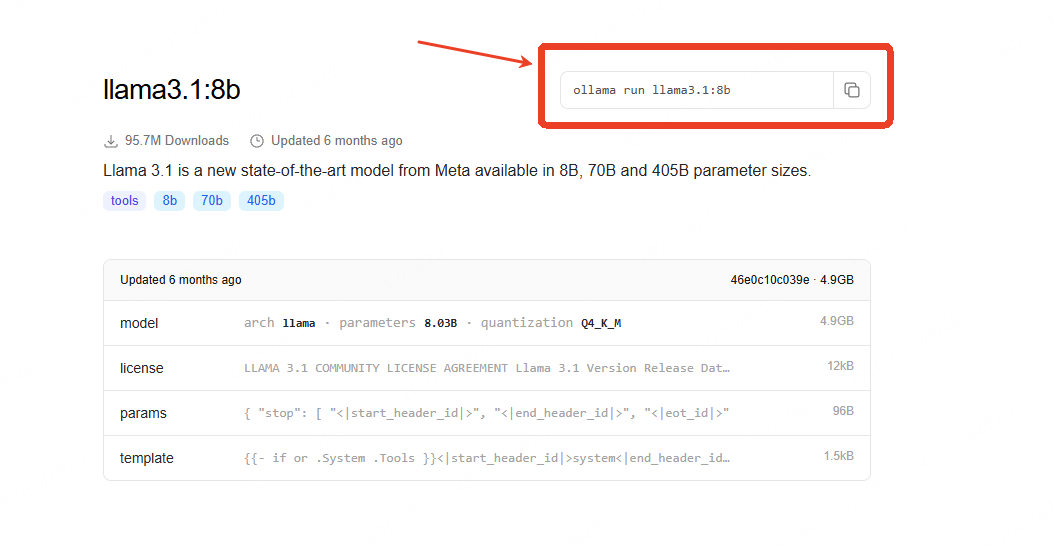

Step 3: Run Llama

Go to the Ollama model library and pick a version that fits your device.If you've downloaded a custom model file in GGUF format, you can import it into Ollama before running it locally.

Then, open the terminal and type:

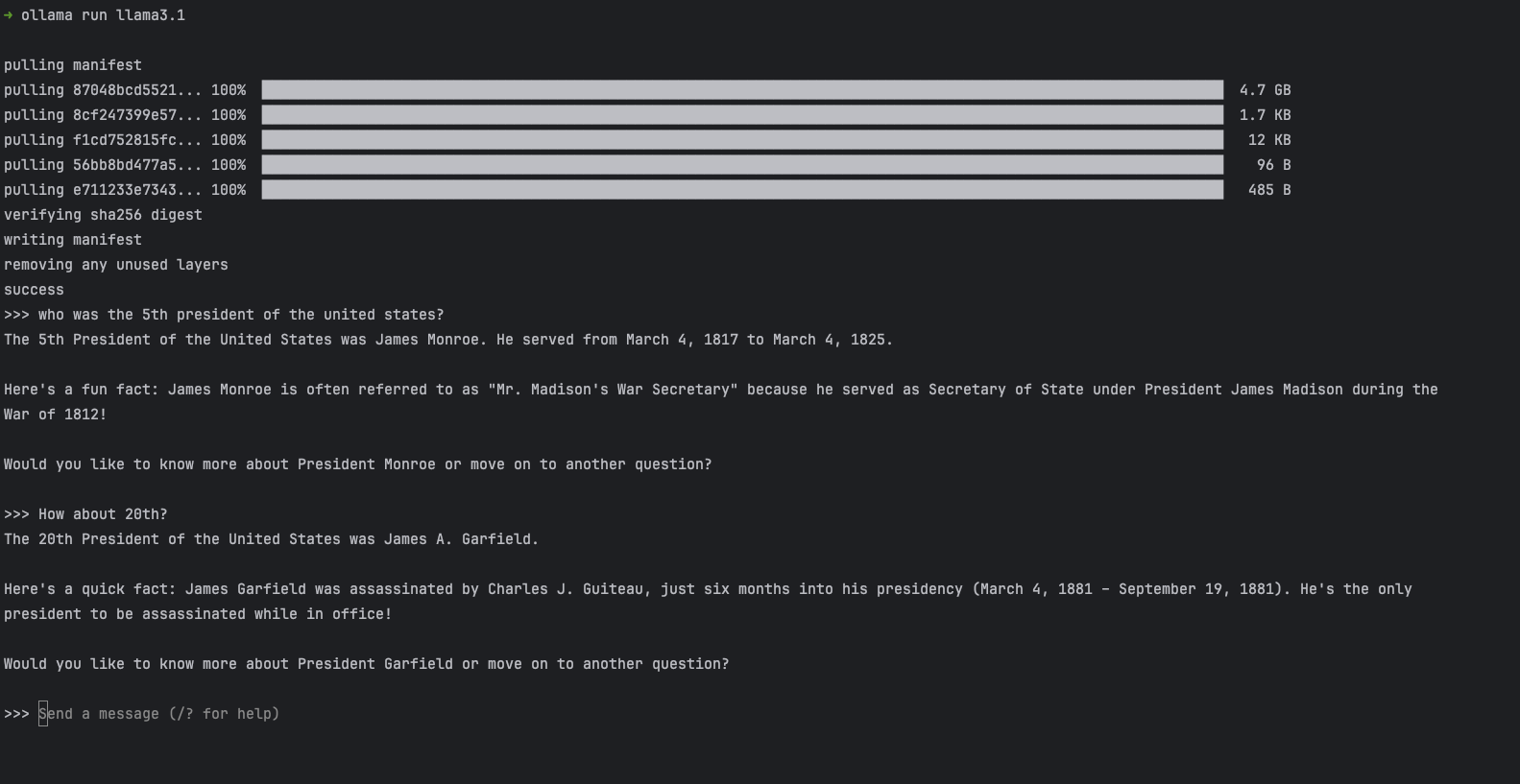

ollama run llama3.1

Step 4: Start Chatting

Ollama will download and launch the model. Once installed, you can start chatting directly in the terminal window.

Ollama works only in the command line and doesn't come with a built-in chat window.

If you prefer a simple GUI and want to skip the setup, Nut Studio is a great option.

What's the Local Llama 3/Llama 3.1/Llama 3.2 Hardware Requirement?

Each version of Llama 3 has specific GPU VRAM requirements, which can vary significantly based on model size. These are detailed in the tables below.

| Version Name | Key Architecture | Min. VRAM (Quantized) | Rec. System RAM | Recommended GPU | Best Use Case | |

|---|---|---|---|---|---|---|

| Llama 3.2 1B | Text-only | 4 GB | 8 GB | NVIDIA GTX 1650 | Simple chat, Q&A | |

| Llama 3.2 3B | Text-only | 4 GB | 8 GB | NVIDIA RTX 2060 | Summarizing, basic tasks | |

| Llama 3.1 8B | Dense Text-only | 12 GB | 16 GB | NVIDIA RTX 4090 | Best LLM for coding, document AI | |

| Llama 3.1 70B | Dense Text-only | 48 GB | 64 GB | NVIDIA A100 80GB x2 | Large-scale inference | / |

| Llama 3.1 405B | Dense Text-only | 250+ GB | 512+ GB | NVIDIA A100 80GB x12 | Enterprise training only | / |

| Llama 3 8B | Dense Text-only | 12 GB | 16 GB | NVIDIA RTX 4090 | Local assistants | |

| Llama 3 70B | Dense Text-only | 48 GB | 64 GB | NVIDIA A100 80GB | Large-scale inference | / |

Source from Meta Llama AI

The Llama 3 hardware requirements vary a lot between versions. Smaller models like Llama 3.2 1B and 3B run well on most modern PCs, even without a GPU. But for Llama 3.1 8B or anything larger, you’ll need a powerful GPU and more RAM.

If your GPU's VRAM is just enough to meet the listed specs, you can still run the model—but you might need to tweak a few things. For example, reduce the batch size, lower context length, or enable memory-saving mode if supported by the tool you're using.

You don't need to check specs by hand. Nut Studio automatically detects your system and recommends only the models your PC can support.

FAQs About How to Use Llama 3

1 Is Llama Free?

Yes. Most Llama 3 models are free to use under Meta’s open license. You can download and run them for research, learning, or local apps. However, check the license if you're using it for business or commercial use.

2 Can Llama Run Without Internet?

Yes. Once downloaded, Llama works without internet. You can run it fully offline on your PC. Everything stays local, making it more private and secure.

3 Is Llama 3 Free to Use?

Yes. Llama 3 is free to use, just like earlier versions. You don’t have to pay for the model itself. Use a tool like Nut Studio or Ollama to run it easily on your system.

4 Can I Run Llama 3 Locally Without a GPU?

Yes, but only small models. Without a GPU, you can run models like Llama 3.2 1B or 3B. Bigger models need more power and may run too slowly on CPU-only systems.

5 How Much RAM Do I Need for a Llama 3?

It depends on the version. Here's a quick guide to help you:

- Llama 3.2 1B / 3B: 8–16 GB RAM

- Llama 3.1 8B: 16–32 GB RAM

- Llama 3.1 70B: 64 GB+ RAM

If you're not sure what your PC can handle, Nut Studio can detect your specs and recommend the right Llama version for you.

Conclusion

Now you know how to run Llama on Windows 11 without any coding. Whether you choose Llama 3 or the latest 3.1 version, running it locally gives you full control and privacy. With Nut Studio, you can run Llama 3 locally in just a few clicks—no terminal, no hassle. It’s the easiest way to explore powerful open-source AI on your own PC.

-

SLM vs LLM: Which Should Beginners Choose?

SLM or LLM: which for beginners? Discover benefits of small local AI, lower cost, and data privacy, plus model comparison and how to run them locally.

5 mins read -

[2025 Guide] How to Run DeepSeek Locally Without Any Coding

Run DeepSeek R1 offline on Windows, Mac, or Linux easily. No coding skills or setup stress required.

10 mins read -

demo

cs

14 mins read

Nut Studio

Nut Studio

Was this page helpful?

Thanks for your rating

Rated successfully!

You have already rated this article, please do not repeat scoring!