On August 5, 2025, OpenAI introduced a significant development in accessible AI with the release of two open-weight models: gpt-oss-120B and gpt-oss-20B. These models are engineered to deliver state-of-the-art performance in local, offline environments, marking a major shift from cloud-dependent APIs.

While models like Qwen3, Llama, and Mistral have advanced open-source AI, the gpt-oss series represents a new paradigm. This guide will walk you through the setup, application, and best practices for both the gpt-oss-120B and gpt-oss-20B models.

Run the powerful GPT-OSS models locally with one-click setup. And enjoy a wide selection of local LLMs with complete privacy guaranteed.

CONTENT:

GPT-OSS 20B/120B Model Overview

OpenAI's gpt-oss series is designed to serve two distinct hardware tiers while maintaining competitive performance against established benchmarks.

Architecture & Parameters

| Model | Layers | Total Params | Active Params Per Token | Total Experts | Active Experts Per Token | Context Length |

|---|---|---|---|---|---|---|

| gpt-oss-120b | 36 | 117B | 5.1B | 128 | 4 | 128K |

| gpt-oss-20b | 24 | 21B | 3.6B | 32 | 4 | 128K |

The gpt-oss models both utilize a sophisticated Transformer architecture with an integrated Mixture of Experts (MoE) mechanism. While the larger gpt-oss-120b contains 116.83 billion total parameters (5.13 billion active), the gpt-oss-20b features 20.91 billion total parameters, with 3.61 billion active.

Trained on diverse, high-quality datasets through early 2025, these models incorporate advanced attention mechanisms and key architectural innovations. These include enhanced context handling, improved token efficiency, and specialized layers for reasoning tasks. They support a native 4K context length, extensible to 128K using YaRN and sliding window techniques. For efficiency, MXFP4 quantization is applied, reducing MoE weights to 4.25 bits per parameter while retaining 94% of the original accuracy.

GPT-OSS-120B: High-End Local Performance

This model brings datacenter-level reasoning capabilities to local hardware.

- Performance: Achieves near-parity with OpenAI's o4-mini on core reasoning benchmarks.

- Hardware Requirement: Runs efficiently on a single 80 GB GPU.

- Best For: Researchers and developers needing maximum performance for complex tasks, data analysis, and agentic workflows without relying on an API.

GPT-OSS-20B: Advanced AI for Everyone

This smaller variant is optimized for consumer-grade hardware, making it a more flexible AI choice than ever before.

- Performance: Delivers results comparable to OpenAI's o3-mini.

- Hardware Requirement: Runs on devices with as little as 16 GB of memory, including modern laptops.

- Best For: On-device applications, everyday coding assistance, rapid prototyping, and offline reasoning tasks.

System & Hardware Requirements: VRAM, CPU, RAM, Disk

| Item | GPT-OSS-20B (Minimum) | GPT-OSS-20B (Recommended) | GPT-OSS-120B (Enterprise) |

|---|---|---|---|

| Memory / VRAM | ~16 GB (GPU VRAM preferred; can use unified/shared on some systems) | 16–24 GB VRAM for comfortable headroom (longer context, tools) | 80 GB GPU VRAM (single card) |

| GPU | NVIDIA with ≥16 GB VRAM (e.g., RTX 4060 Ti 16GB, RTX 3090 24GB); Apple Silicon 16–24 GB works well |

Same as left; higher-end cards improve headroom/perf | NVIDIA H100 (80 GB) |

| CPU | Modern x86_64 or Apple Silicon; AVX2/NEON helps | Same | Server-class CPU with high PCIe bandwidth and lots of lanes. 24–64 cores, PCIe Gen4/Gen5, strong NUMA/memory bandwidth. |

| Storage | 12–16 GB+ for quantized weights (GGUF/MXFP4); more for BF16 safetensors | NVMe SSD recommended for fast loads & caching | NVMe SSD; enterprise storage as needed |

| OS / Runtime | Win 11 / macOS / Linux via Ollama, llama.cpp, vLLM | Same | Linux (typical); vLLM / enterprise runtimes |

| Perf Notes | Apple Silicon: ~20–30 tok/s with MPS | Higher VRAM = longer context & smoother tools | Single H100: ~50 tok/s/stream; ~200+ concurrent users feasible |

If you're targeting gpt-oss-20b, a system with 16–24 GB of GPU VRAM (or 16–24 GB unified memory on Apple Silicon) delivers smooth, practical performance—especially when paired with a NVMe SSD for fast loads and caching. It runs reliably across Windows, macOS, and Linux via popular runtimes like Ollama, llama.cpp, and vLLM, and benefits from modern CPU instruction sets (AVX2/NEON).

For teams that need maximum scale, gpt-oss-120b is a different class: plan on a single 80 GB GPU(such as the NVIDIA H100) to achieve production-grade throughput (around 50 tok/s per stream) and support large numbers of concurrent users. While Apple's M3 Max with 128GB could technically run the model, it is not recommended for achieving useful speeds.

In short, 20B fits prosumer desktops and Apple laptops; 120B belongs in well-provisioned servers.

What about smaller models? If you have less than 8 GB of VRAM, or if your specific use case doesn't require a massive model, there's a growing landscape of Small Language Models (SLMs). These are often more efficient and can be an ideal solution for resource-constrained environments. For more detailed information on SLMs and which models might be a good fit, be sure to read our SLM vs LLM guide.

How to Run GPT-OSS 20B/120B Locally

1 Nut Studio (Recommended for Beginners)

Nut Studio provides the most straightforward path to running GPT-OSS models locally. The application handles model downloads, quantization selection, and runtime configuration automatically.

Step 1: Download Nut Studio for your operating system from the official website. We currently support Windows, with Mac support coming soon.

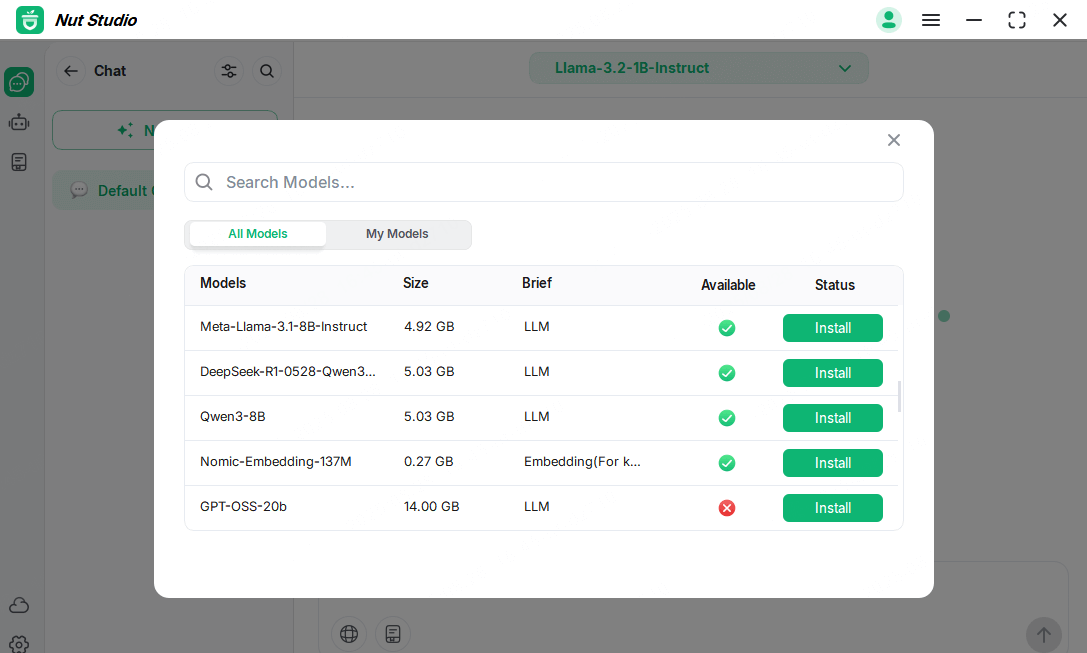

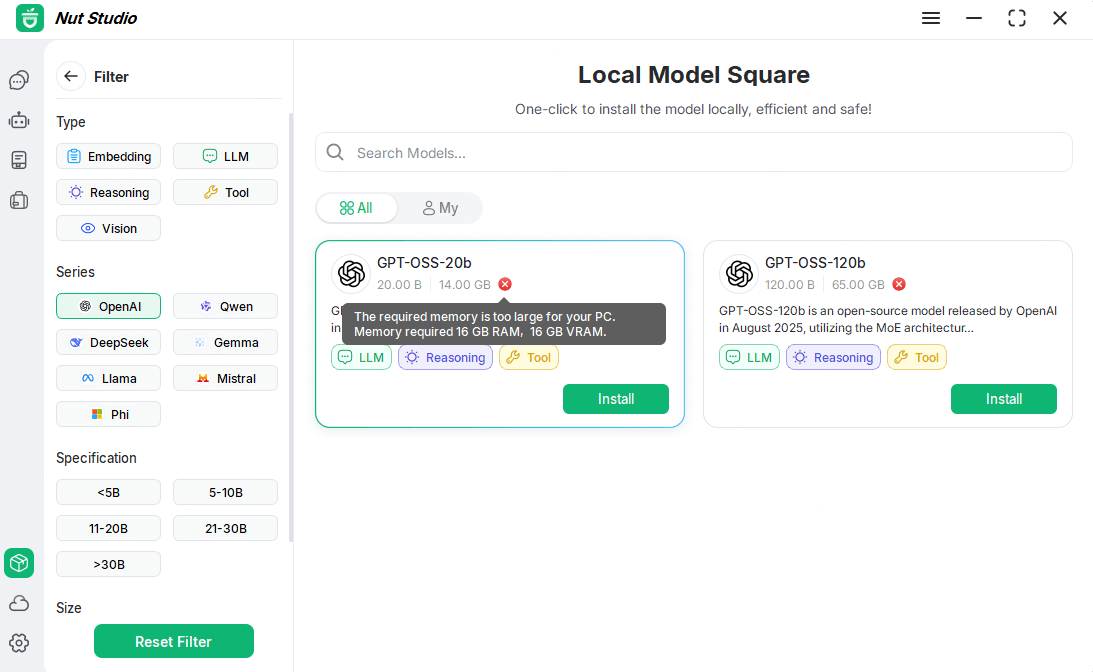

Step 2: Launch the application. You'll see the "Local Model Square" icon in the lower-left corner of the interface. This is your one-stop shop for all downloadable models.

In the left-hand menu under "Series," click on "OpenAI." This will filter the models to show their high-quality open-source releases, including the gpt-oss series.

Step 3: Install the models. You'll see two models: gpt-oss-20b and gpt-oss-120b. While the larger 120b model is much more powerful, it requires more VRAM, most consumer computers can't support.

Important: You could see from the picture, this computer has less than 16 GB of VRAM, so you may not be able to install the model. But don't worry! Nut Studio also supports cloud models that you can try for free if your hardware isn't strong enough.

Step 4: Once your model is successfully installed, you're ready to go! Try running it locally with a reasoning prompt like, "Explain in simple terms why a heavy rock and a light feather fall at the same speed in a vacuum."

To really see the difference, try the same prompt on another local model. You'll quickly get a feel for the unique strengths and personalities of each AI.

With Nut Studio, you can install models, build agents with intuitive tools, upload files to create knowledge bases, and integrate MCP (Model Context Protocol) tools seamlessly. We handle the model management complexities so you can focus on building applications rather than wrestling with configurations.

2 Ollama (Great for CLI Users)

Ollama is a simple command-line runner with an optional local API server.

Step 1: Install Ollama for your System.

- Windows:

winget install Ollama.Ollama(or use the MSI installer) - macOS:

brew install ollama - Linux:

curl -fsSL https://ollama.com/install.sh | sh

Step 2: Pull the model you want.

# Pull the model

ollama pull gpt-oss-20b

# Quick one-off prompt

ollama run gpt-oss-20b "Explain quantum computing in simple terms"

# Serve a local API (OpenAI-style endpoints)

ollama serveModel import tips for Ollama:

- Use pre-quantized GGUF files for optimal performance

- Adjust context length with

--context-lengthflag - Enable GPU acceleration with appropriate CUDA/ROCm drivers

3 vLLM (Recommended for Advanced Users)

For production deployments requiring high throughput:

from vllm import LLM, SamplingParams

# Initialize model

llm = LLM(model="openai/gpt-oss-20b")

# Configure sampling

sampling_params = SamplingParams(

temperature=0.8,

top_p=0.95,

max_tokens=1000

)

# Generate response

outputs = llm.generate(prompts, sampling_params)Server startup basics:

- Configure tokenizer paths correctly

- Set appropriate tensor parallel degree for multi-GPU setups

- Monitor VRAM usage and adjust batch sizes accordingly

If you want to understand the GGUF format, GGUF (GGML Unified Format) represents the latest evolution in model quantization formats. Check out this comprehensive guide: [2025 Beginner's Guide] How to Run Huggingface GGUF on Windows PC.

Troubleshooting & Optimization Guide

Installation & Dependencies

To avoid crashes and slowdowns, ensure you have the correct runtime and matching GPU drivers.

- Reinstall the appropriate runtime (CUDA 12.x, ROCm 5.x, or Metal) for your hardware.

- Verify that your GPU drivers are compatible with the chosen toolkit. Mismatches are a common cause of issues.

Quantization Formats

Use the correct quantization format for your tools. Avoid unnecessary conversions by downloading the right format directly.

- GGUF: Best for tools that use

llama.cpp. - Safetensors: Ideal for

vLLMand Hugging Face Transformers.

Fixing VRAM Errors

If you're running out of VRAM, try these steps to free up memory.

- Lower quantization: Use formats like MXFP4 or Q4_K_M.

- Reduce memory usage: Shorten your context and system prompt, and turn off verbose logging.

- Enable CPU offloading: For fine-tuning, you can also cap the KV-cache and use gradient checkpointing.

Performance Tuning

Optimize your model's performance by adjusting key settings.

- Batch size: Adjust this to target ~85–95% VRAM utilization without causing paging.

- Warm the cache: Run a short prompt first to reduce latency on subsequent runs.

Platform Notes

Keep these platform differences in mind for the best performance.

- Windows: Use native Ollama over WSL when you have sufficient VRAM.

- macOS: Leverage Metal Performance Shaders for optimal performance.

- Linux: Consider enabling large/huge pages for performance gains.

Skip the complex setup. Nut Studio handles everything automatically—from installation to optimization.

Benchmarks Comparisons & Practical Performance Tips

Benchmark Performance

OpenAI's launch data shows impressive results across standard evaluation metrics. The GPT-OSS-120B achieves near-parity with o4-mini on reasoning benchmarks including MMLU, HumanEval, and GSM8K. The GPT-OSS-20B model, despite its smaller size, delivers performance comparable to o3-mini across these same benchmarks.

Understanding "parity" requires nuance—these models achieve similar score ranges rather than identical results across all tasks. Real-world performance depends heavily on prompt engineering, quantization choices, and specific use cases.

Comparison with Other 20B-Class Models

Comparative analysis reveals that GPT-OSS-20B demonstrates superior instruction-following capabilities when handling complex, multi-step tasks. Competing models of similar scale, including Gemma 3, Qwen 3, and Mistral Small, exhibit consistent deviations from specified requirements in challenging instruction-following scenarios.

Speed and Accuracy in Practice

Token generation speed varies significantly based on hardware and configuration:

- MXFP4/GGUF quantization typically achieves 30-50 tokens/second on consumer GPUs

- Warmed KV cache improves latency by 20-30% for conversation continuations

- Batch processing can increase throughput by 2-3x for appropriate workloads

What GPT-OSS-20B/120B Can Do

General Reasoning and Content Generation

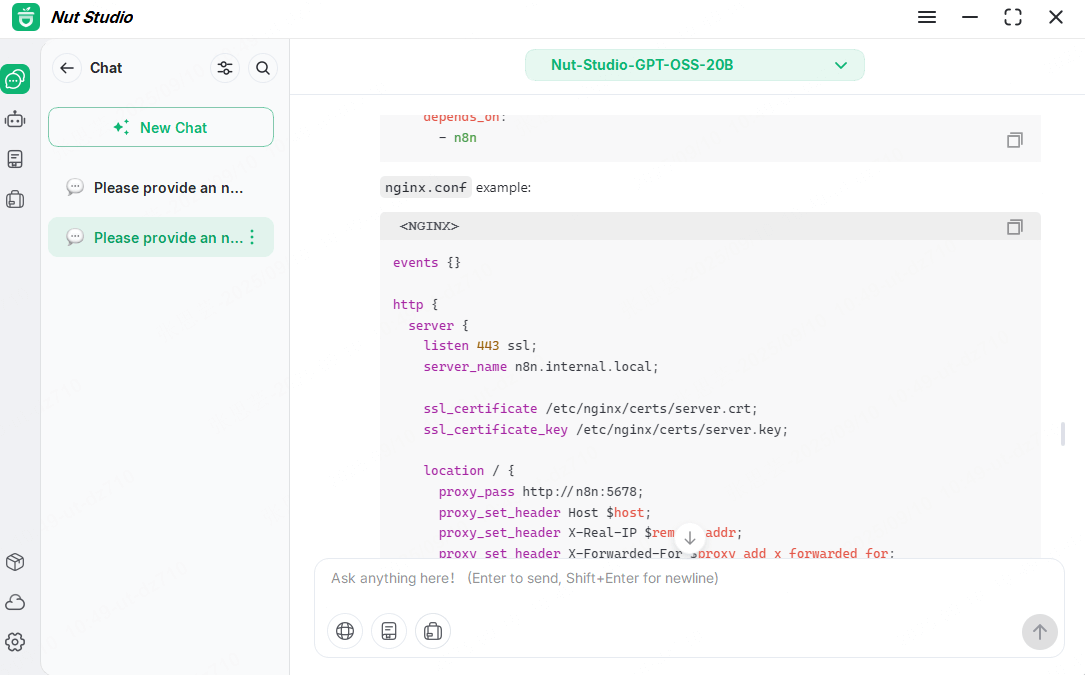

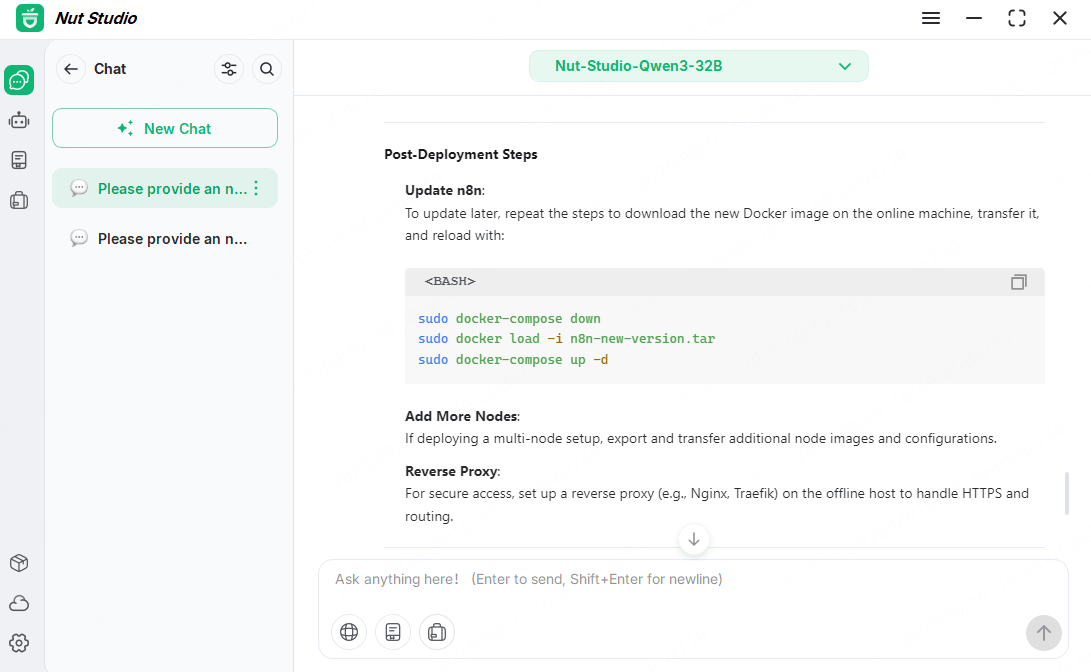

The models demonstrate exceptional performance in general reasoning tasks, coding assistance, and content summarization. During testing, I found the models particularly adept at breaking down complex problems into manageable steps. For instance, when I asked about setting up n8n in restricted environments, it naturally focused on offline-first solutions, walking me through the N8N_OFFLINE mode configuration and explaining how to pre-stage Docker images without external dependencies.

Rather than just providing code snippets, it covered the full deployment lifecycle - from initial setup through production hardening. When I tested scenarios involving compliance requirements, it included verification steps that would actually satisfy audit processes, like specific log checks and network isolation testing.

Qwen-32B performed well with straightforward deployment questions and delivered clean, functional code examples. However, I found it less consistent when dealing with the nuanced requirements of enterprise environments where security and operational considerations take precedence over quick implementation.

Tool Use and Agentic Workflows

Both models showcase exceptional tool use capabilities and few-shot function calling performance. They support OpenAI's new Harmony prompt format, which includes multi-role systems (system, developer, user, assistant, tool) and three-channel output for final responses, analysis reasoning, and commentary. This makes them particularly effective for agentic workflows and RAG-style applications.

Efficient Local Inference

The models are optimized for privacy-sensitive work and rapid iteration without expensive cloud infrastructure. The gpt-oss-20b achieves 160-180 tokens per second on consumer laptops while using approximately 12GB of memory, enabling near real-time conversation experiences completely offline.

RAG Implementations

Through extensive testing with various knowledge bases, I found optimal performance when providing 3-6 highly relevant passages rather than overwhelming the context with too many sources or providing insufficient information with fewer passages.

Choosing Between 120B and 20B

Select GPT-OSS-120B when:

- Your application requires superior first-pass accuracy on complex reasoning tasks

- You need to orchestrate multi-tool chains with minimal supervision

- Extended context windows (>32K tokens) are essential

- You have access to 80GB+ class hardware

Choose GPT-OSS-20B when:

- Running on laptops or edge devices with limited resources

- Fast iteration and development speed are priorities

- Privacy requirements mandate local execution

- Cost-effectiveness outweighs marginal performance gains

Frequently Asked Questions (FAQ)

What VRAM do I need for GPT-OSS-20B?

Minimum 16GB VRAM enables basic operation with quantized weights. For comfortable usage with longer contexts and tool integration, 24GB provides necessary headroom. Apple Silicon users need 16-24GB unified memory.

When should I choose GPT-OSS-120B over 20B?

Choose the 120B model when accuracy on complex reasoning tasks justifies the hardware investment. Applications requiring multi-step planning, sophisticated tool orchestration, or handling ambiguous instructions benefit from the larger model's capabilities.

Can I run GPT-OSS-20B offline with Nut Studio?

Yes, Nut Studio supports complete offline operation after initial model download. The application manages local model storage and inference without requiring internet connectivity.

Where can I find a safe GPT-OSS 20B download and model card?

Official sources include OpenAI's model repository, Hugging Face's model hub with verified badges, and established tools like Nut Studio that verify model integrity. Always check model cards for licensing terms and performance characteristics.

Conclusion

The release of OpenAI's gpt-oss-120B and gpt-oss-20B models bring new options for LLMs. By shifting from cloud-based APIs to open-weight models optimized for local environments, OpenAI is empowering a new wave of developers, researchers, and everyday users.

The gpt-oss-120B model brings datacenter-level performance to local hardware, making it an ideal choice for complex tasks and professional applications, while the gpt-oss-20B offers robust capabilities on consumer-grade devices. This tiered approach provides flexibility, allowing you to choose the right model based on your hardware and project needs.

Whether you're looking for a simple, one-click setup with Nut Studio, prefer the command-line control of Ollama, or require the high throughput of vLLM for production, these models are designed to fit a wide range of use cases. As the open-source AI ecosystem continues to evolve, their permissive licensing and local-first design philosophy enable innovations.

Download Nut Studio and access GPT-OSS models - completely free!

Nut Studio

Nut Studio

Was this page helpful?

Thanks for your rating

Rated successfully!

You have already rated this article, please do not repeat scoring!