Want to run DeepSeek R1 locally without any coding? This 2025 guide shows you the easiest way to install and use DeepSeek on your own device. Whether you're on Windows, Mac, or Linux, you'll learn how to pick the right version, install it in one click, and keep all your data private.

CONTENT:

- Why You Need Running DeepSeek Locally? DeepSeek Local VS. Online

- How to Choose Proper Local DeepSeek R1 Versions?

- [No Coding] How to Run DeepSeek R1 Locally for Windows?

- How to Setup DeepSeek Locally on Mac/Linux?

- What's the DeepSeek Local Requirement?

- FAQs About DeepSeek Local Deployment

- Conclusion

Why You Need Running DeepSeek Locally? DeepSeek Local VS. Online

If you've been using open-source AI tools like DeepSeek, you've probably wondered: Is my data really safe? That's where running DeepSeek locally comes in. Instead of sending your private chats to the cloud, you can keep everything on your own device.

Apart from data privacy, what else sets DeepSeek Local apart from the online version? Let's break it down:

| Feature | DeepSeek Local Model | DeepSeek Online Model |

|---|---|---|

| Cloud Computing | No | Yes |

| Cost Efficiency | Free | May charge for usage or tokens |

| Data Privacy | Yes | No |

| Offline Access | Yes | No |

| Easy to Use | No (Need coding or use tools like Nut Studio) |

Yes |

| Latency | No Latency | Depends on Internet Speed |

| Hardware Requirement | Yes (Requires RAM/CPU/GPU) |

No |

| Customization | Yes (Train with your own data, build knowledge base) |

No |

Run DeepSeek R1 with one-click with Nut Studio. No coding, No limits.

Key Takeaways:

- DeepSeek local keeps your data private, works offline, and has no cloud fees. It needs good hardware and some setup. Online DeepSeek is easier to use but depends on the internet and sends your data to the cloud.

- If you want privacy and to customize AI with your own data, run DeepSeek locally. It's great for developers and privacy fans. For casual users, the online version is simpler but less private.

How to Choose Proper Local DeepSeek R1 Versions?

If you want to run DeepSeek-R1 locally, you might see many versions and feel confused. Each version has a different size and power. Picking the right one depends on what you want to do and your computer's strength.

Here is a simple guide to help you choose the best DeepSeek R1 local model for your needs:

| DeepSeek R1 Versions | Best For | Use Case Example | User Type | Run Locally |

|---|---|---|---|---|

| DeepSeek R1 1.5B | Basic chats, quick tasks | Check math homework, journal notes | Students, beginners | |

| DeepSeek R1 7B | Q&A, summaries, multi-topic conversations | FAQ bot, PPT outline, blog idea sketch | Casual creators, chatbot builders | |

| DeepSeek R1 8B | Better chat & logic tasks | Explain code, compare options, short advice | Side project devs, logic users | / |

| DeepSeek R1 14B | Long texts, deep analysis | Write article, summarize research, teach | Researchers, educators, analysts | |

| DeepSeek R1 32B | Advanced writing, long dialogs | Strategy planning, team memo Q&A | Data teams, consultants, advanced coders | / |

| DeepSeek R1 70B | Most powerful, for deep semantic and creative tasks | Story writing, image+text brainstorm | Enterprises, advanced labs, AI researchers | / |

* The number after the version name (like 1.5B or 70B) shows how many parameters the model has. More parameters mean a stronger model but also need more hardware power.

Key Takeaways:

- Stick with 1.5B or 7B if you want fun chats, basic Q&A, or quick text work—your laptop or entry-level desktop can easily handle these models.

- The 14B model gives you solid performance for writing longer text or more in‑depth answers. Most modern PCs with mid‑range GPUs can run it comfortably.

- 32B and 70B models suitable for story writing, long dialogue, and brainstorming with images and text. It's often compared as one of the best LLMs for writing if you need both depth and creativity. But it not realistic for most home users—these require beefy multi‑GPU rigs with dozens of GBs of VRAM. As one Reddit user said:

"To run 70B at a decent quant, you'd want at least 48 GB VRAM… consider building a PC with 2 x used 3090"

[No Coding] How to Run DeepSeek R1 Locally for Windows?

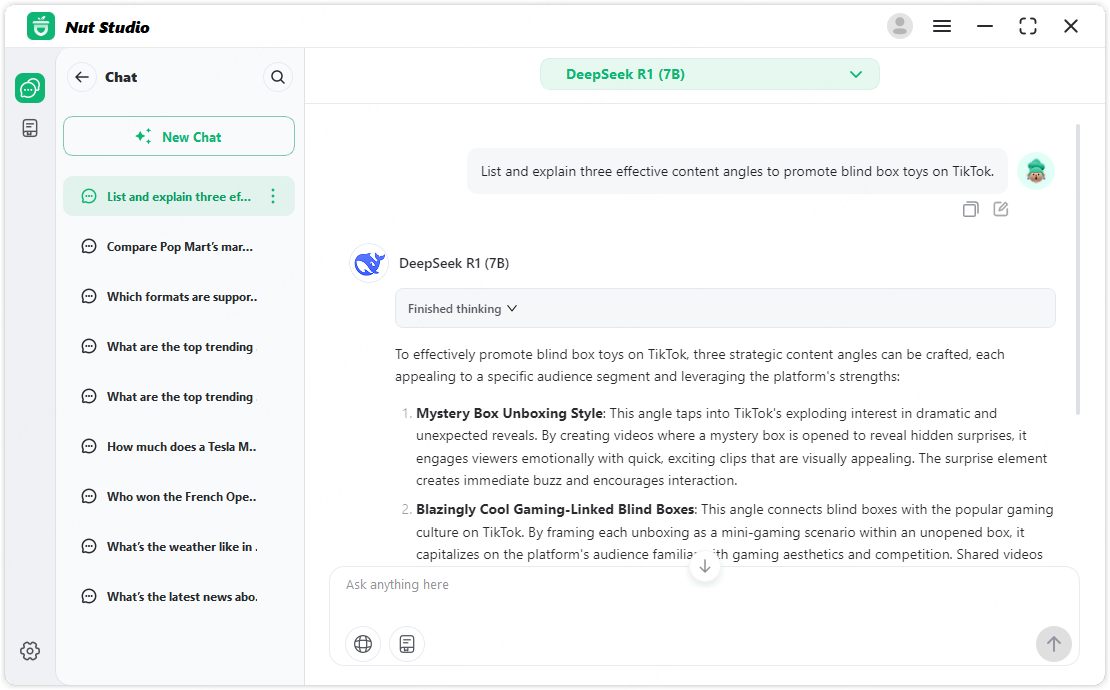

Nut Studio is a free tool for local LLMs. It offers the easiest way to install DeepSeek R1—just download, click once, and you're set. No tech skills needed. In minutes, you'll be running your own DeepSeek R1 model on your PC. You can build a custom knowledge base, and your data stays 100% on your device. Nut Studio currently supports Windows only. Mac or Linux users can use Ollama to run DeepSeek locally.

Step 1: Download and Install Nut Studio

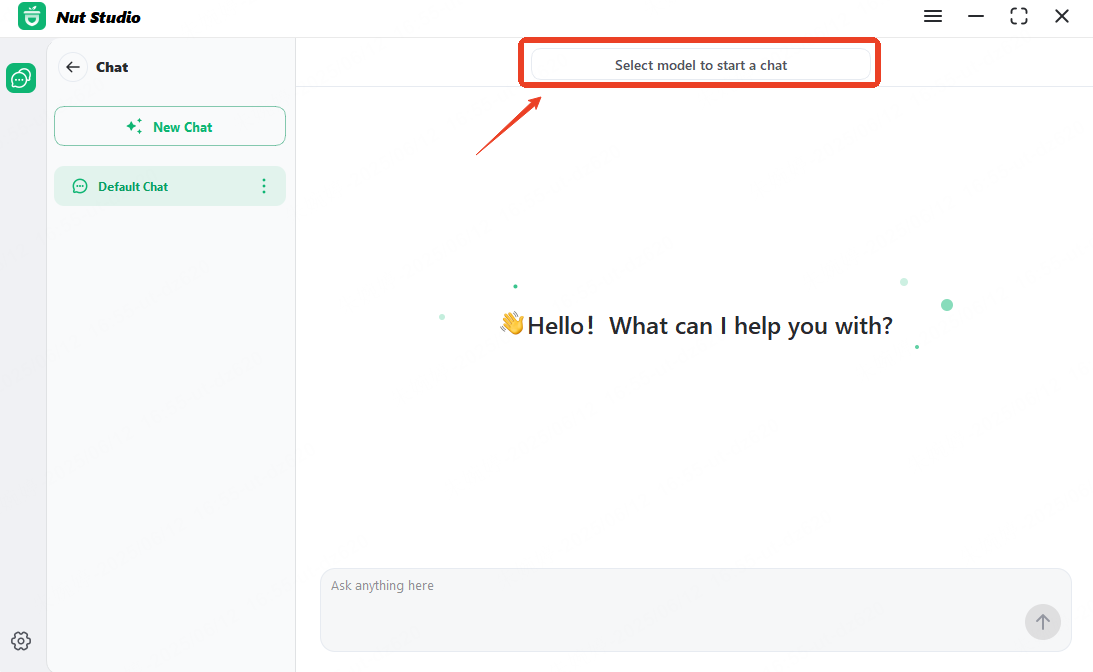

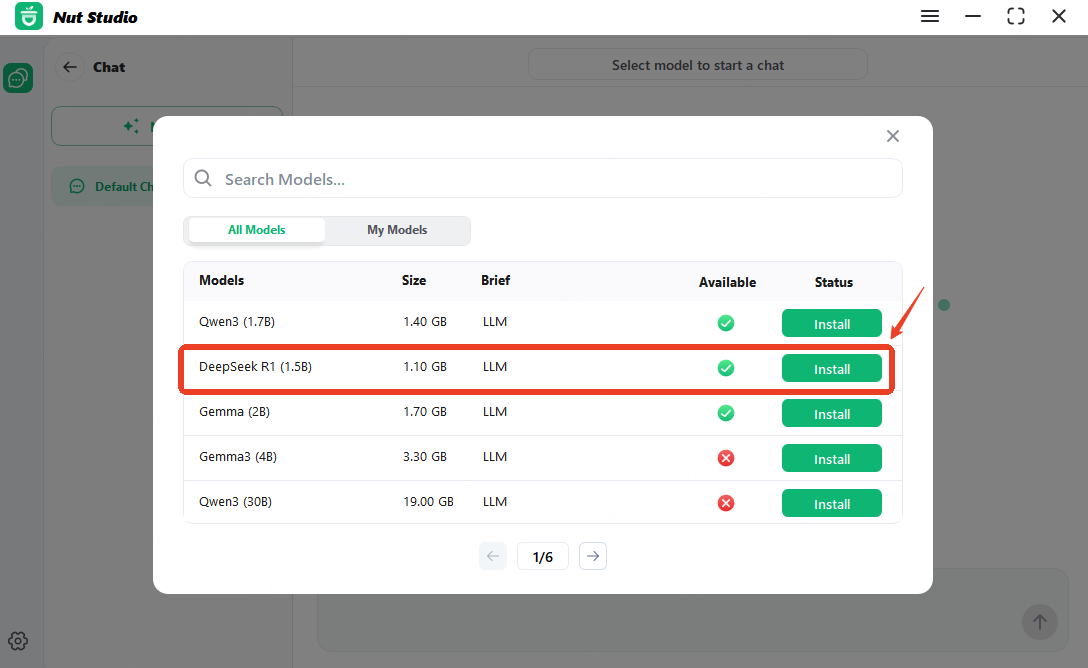

Step 2: Open the top control bar, press the "Select model to start a chat", and pick a DeepSeek R1 version to install.

Step 3: Wait a minute—then start chatting and customizing your DeepSeek R1 model. Everything runs locally, and your data stays private.

How to Setup DeepSeek Locally on Mac/Linux?

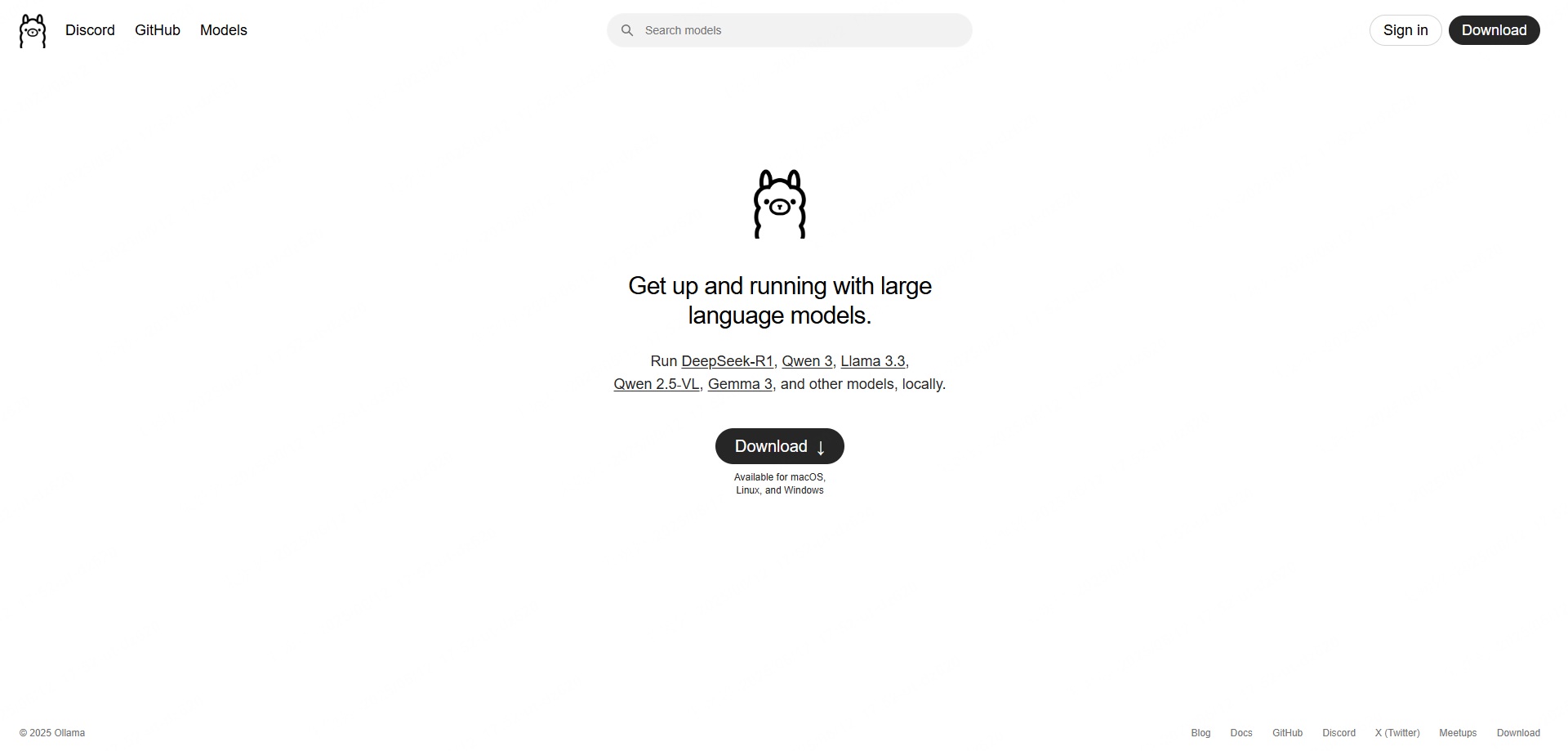

Want to install DeepSeek locally on a Mac or Linux system? Try Ollama — a simple yet powerful tool for running large language models (LLMs) on your device. It supports DeepSeek R1 models from 1.5B all the way up to 70B parameters. You can even import GGUF models into Ollama for extra flexibility. Best of all, it works across macOS, Linux, and Windows.

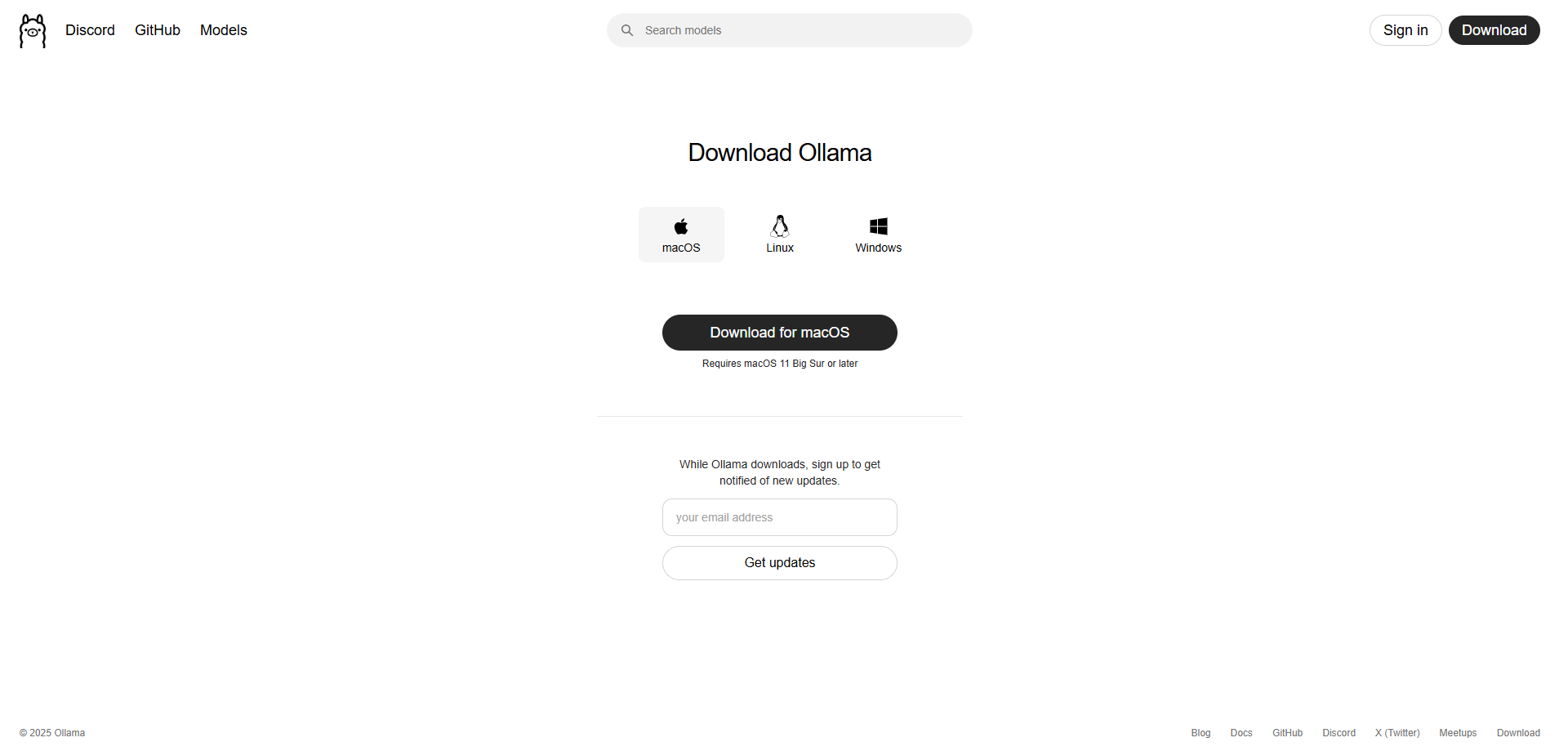

Step 1: Visit ollama.com and choose your OS to download the installer.

For macOS, it comes as a .zip file — double-click to unzip, then move the Ollama app to your Applications folder.

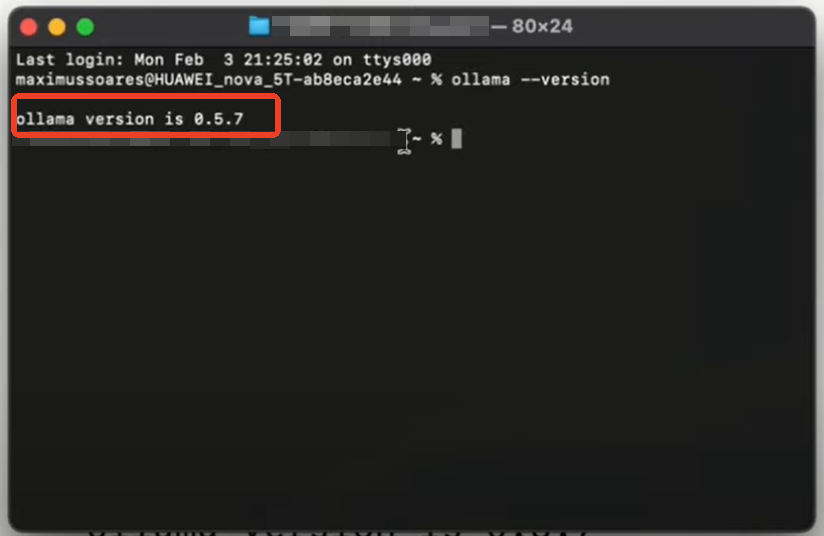

Step 2: Check the installation

Open Terminal and run the following command to check if Ollama is installed:

ollama --version

If it returns the version number, you're good to go.

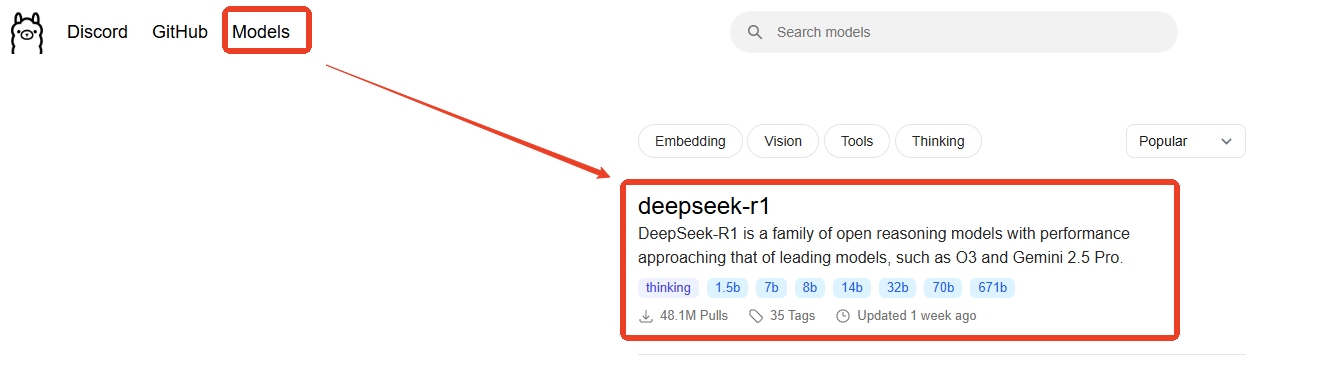

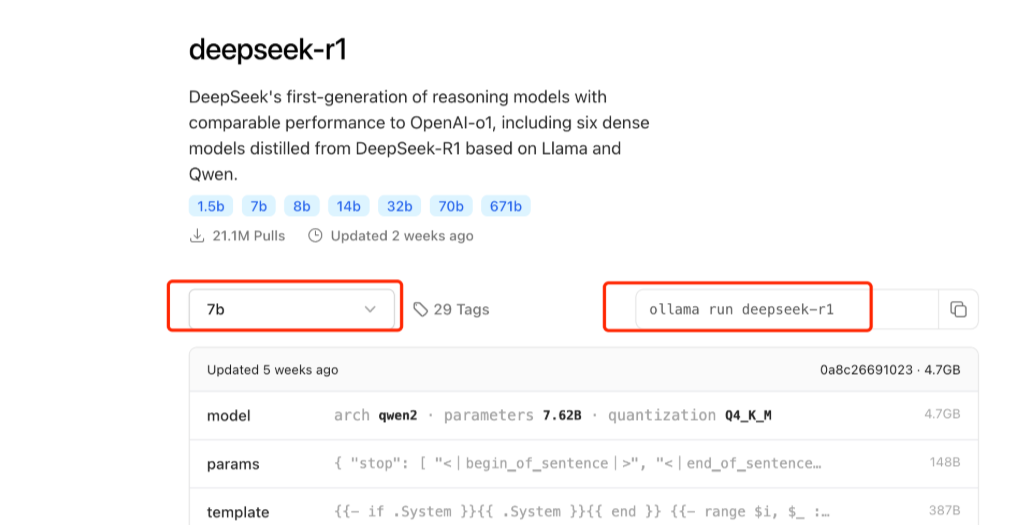

Step 3: Install and run DeepSeek R1

Go to the Ollama model library and choose a DeepSeek R1 version that matches your hardware. Then, in the terminal, run:

ollama run deepseek-r1:7b

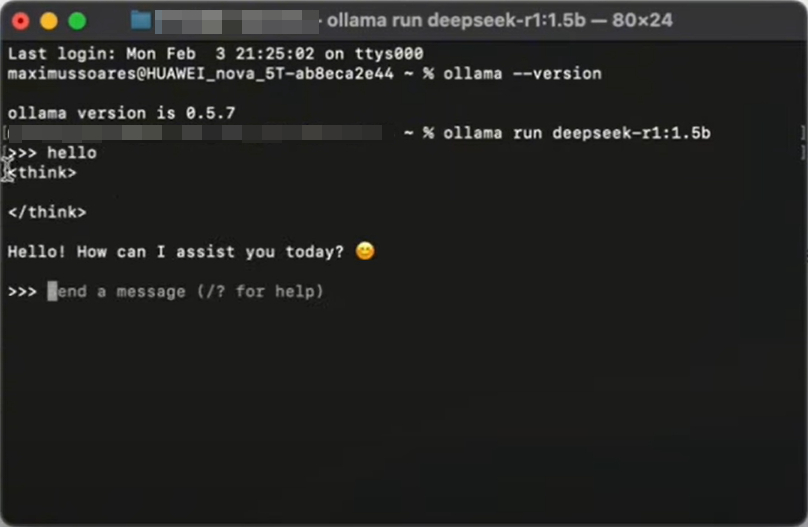

Step 4: Ollama will download and launch the model. Once installed, you can start chatting directly in your terminal.

Ollama runs in the command line and doesn't come with a GUI. If you're on Windows, we recommend using Nut Studio, which provides a full chat interface and auto hardware detection for DeepSeek deployment. You can also explore more Ollama alternatives if you prefer a GUI-based experience across platforms.

What's the DeepSeek Local Requirement?

Download deepseek R1 and run locally on a PC gives you full control over your AI model, but not every version runs on every device. Each version—from the lightweight 1.5B to the massive 32B—has different hardware needs. Below is a clear breakdown of the minimum and recommended system requirements for each DeepSeek R1 version across Windows, macOS, and Linux. If you're unsure what your PC can handle, scroll down for a simple solution.

Local DeepSeek R1 1.5B — System Requirements

| System | Minimum Requirements | Recommended Requirements |

|---|---|---|

| Windows |

- CPU: Intel i5/Ryzen 5 - RAM: 8GB - CPU NVIDIA GTX 1650 (4GB) |

- CPU: Intel i7/Ryzen 7 - RAM: 16GB - CPU NVIDIA GTX 3060 (12GB) |

| macOS | - M1/M2 chip (8GB) | - M1 Pro/Max or M3 chip (16GB+) |

| Linux |

- CPU: 4-core - RAM: 8GB - CPU NVIDIA T4 (16GB) |

- CPU: 8-core - RAM: 16GB - CPU NVIDIA 3090 (24GB) |

Local DeepSeek R1 7B/8B — System Requirements

| System | Minimum Requirements | Recommended Requirements |

|---|---|---|

| Windows |

- CPU: Intel i7/Ryzen 7 - RAM: 16GB - CPU NVIDIA GTX 3060 (12GB) |

- CPU: Intel i9/Ryzen 9 - RAM: 32GB - CPU NVIDIA GTX 4090 (24GB) |

| macOS | - M2 Pro/Max chip (32GB) | - M3 Max chip (64GB+) |

| Linux |

- CPU: 8-core - RAM: 32GB - CPU NVIDIA 3090 (24GB) |

- CPU: 8-core - RAM: 16GB - Multiple GPUs (e.g. 2x RTX 4090) |

Local DeepSeek R1 14B — System Requirements

| System | Minimum Requirements | Recommended Requirements |

|---|---|---|

| Windows |

- RAM: 32GB - CPU NVIDIA GTX 3090 (24GB) |

- RAM: 64GB - CPU NVIDIA GTX 4090 with quantized model |

| macOS | - M3 Max chip (64GB+) | - Limited to quantized version with restricted performance. |

| Linux |

- CPU: 2x RTX 3090 (via NVLink) - RAM: 64GB |

- Multiple GPUs (e.g. 2x RTX 4090 48GB) - RAM: 128GB |

Local DeepSeek R1 32B — System Requirements

| System | Minimum Requirements | Recommended Requirements |

|---|---|---|

| Windows | - Not recommended (insufficient VRAM) | - Requires enterprise GPU (e.g. RTX 6000 Ada) |

| macOS | - Not supported (hardware limits) | - Use cloud API instead |

| Linux |

- CPU: 4x RTX 4090 (48GB VRAM each) - RAM: 128GB |

- Professional GPU (e.g., NVIDIA A100 80GB) - RAM: 256GB + PCle 4.0 SSD |

Source from CSDN

Key Takeaways:

- Small models (1.5B–7B) run smoothly on most gaming desktops with 8–32 GB RAM and an RTX 3060 or better GPU. These are great for students, casual users, or anyone trying LLMs for the first time.

- Mid-range models (8B–14B) need more power—3080+ GPUs and up to 64 GB RAM. Ideal for educators, researchers, or AI enthusiasts looking to do deeper work.

- Large models (32B+) are not practical for personal PCs. They require enterprise-level GPUs and 128–256 GB RAM, so they're best for labs, cloud deployments, or enterprise teams.

- Quantized models can lower hardware needs while keeping solid performance—handy for home setups or lightweight machines.

Nut Studio can automatically detect your hardware and recommend compatible models.

No need to check specs manually — just install and run the right version with one click.

FAQs About DeepSeek Local Deployment

1 Is running DeepSeek locally safe?

Yes. Running DeepSeek locally is secure because everything stays on your own device. Your data never gets uploaded to the cloud. This makes it a safer option—especially when building a private knowledge base with personal or sensitive files.

2 Is DeepSeek free to run locally?

Yes, DeepSeek R1 models are free to download and run locally, thanks to their open-source licenses. You don't need to pay for the models themselves. But remember—running DeepSeek locally may cost electricity and depends on your hardware power (especially GPU and RAM). Tools like Nut Studio let you deploy DeepSeek without coding or extra setup fees.

3 Can you use DeepSeek without internet?

Yes, once DeepSeek R1 is installed, it runs fully offline. You can chat, fine-tune, and run prompts with no internet connection. This is ideal if you care about data privacy or just want an AI assistant that works without cloud access.

4 What GPU do you need to run DeepSeek?

That depends on which DeepSeek R1 version you want. For example:

- 1.5B: GTX 1650 or better

- 7B: RTX 3060 or better

- 14B: RTX 3080 or 3090

- 32B+: Requires A100 or multiple GPUs

You can check our full comparison table above. If you're unsure, Nut Studio can auto-detect your setup and suggest the best DeepSeek model your PC can handle.

5 Do you need a GPU to run DeepSeek?

No, not always. Some smaller models (like DeepSeek R1 1.5B) can run on CPU only, though performance will be slower. For real-time response or smooth use, a dedicated GPU is recommended.

Conclusion

With the right setup, you can run DeepSeek locally for faster performance and full data control. Nut Studio makes the process easy—just one click to download, install, and start chatting. It's the best way to enjoy local AI without coding.

Nut Studio

Nut Studio

Was this page helpful?

Thanks for your rating

Rated successfully!

You have already rated this article, please do not repeat scoring!