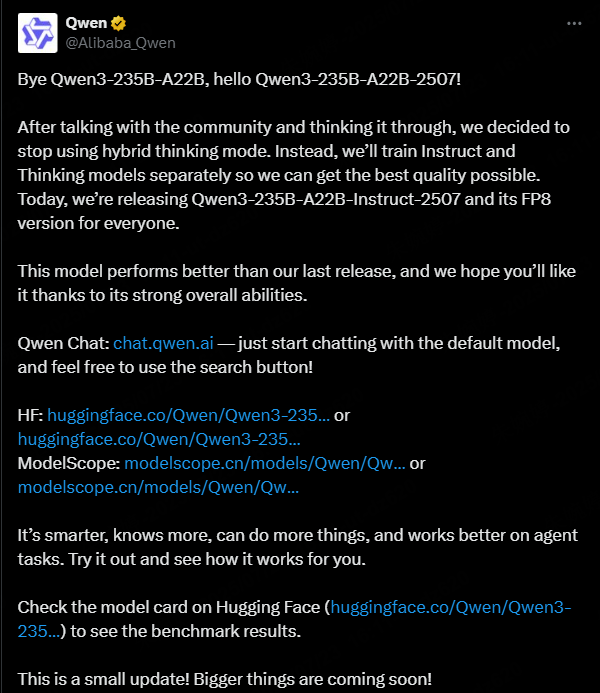

Qwen3-235B-A22B just made waves again. On July 22, 2025, Alibaba's Qwen team rolled out a major update: Qwen/Qwen3-235B-A22B-Instruct-2507. This upgrade didn't just boost performance — it reminded everyone that Qwen3 is still one of the most powerful open-source models around.

Since that release, interest in the full Qwen3 lineup has surged. From the massive 235B version to the lightweight Qwen3-0.6B, each model offers something unique. Today, I’ll walk you through how these models compare, and which one might be right for your setup.

CONTENT:

What is Qwen3?

So, what is Qwen3 exactly? You can think of it as the latest and smartest generation of Alibaba's open-source large language models — built to reason, write, translate, and even code. I've followed the Qwen series for a while, and Qwen3 feels like a serious step forward. Not just a faster model, but a much more thoughtful one.

Qwen started back in 2023 under the name Tongyi Qianwen, mostly focused on Chinese language tasks and daily conversations. Then came Qwen2 and Qwen2.5, which pushed further into coding and logic tasks. But it was Qwen3, released in April 2025, that really changed the game. The team redesigned the architecture from the ground up. They also expanded its training data to cover over 119 languages and more than 36 trillion tokens. And yes — they added support for up to 128K tokens of context, which is amazing if you're dealing with long documents, chats, or knowledge bases.

Now, is Qwen3 a reasoning model? Absolutely. It scored 76.6 on MMLU and 83.9 on GSM8K, which puts it right up there with the best. I've personally tested it with step-by-step problem-solving prompts, and it doesn't just guess — it reasons. You can even switch between fast "direct response" and slower "step-by-step" modes, depending on how deep you want the thinking to go.

What's also interesting is how Qwen3 is split into two families: dense models (like the 1.7B or 30B versions) and Mixture-of-Experts models (like the huge 235B). Each version has the same basic skills, but different strengths depending on the job — from chatbots on edge devices to research assistants on powerful GPUs.

Qwen3 Models Compared: 235B, 30B, 1.7B, and 0.6B

Qwen3 isn’t just one model. It comes in four sizes — and each serves a different purpose. Here’s a quick look:

| Model | Parameters | Context Length | Performance | Best Use |

|---|---|---|---|---|

| Qwen3-235B-A22B | 235B | 128K | SOTA | Replacing GPT-4, advanced RAG |

| Qwen3-30B-A3B | 30B | 128K | High | Mid-range GPU, coding & reporting |

| Qwen3-1.7B | 1.7B | 128K | Light | Entry-level PC, fast startup |

| Qwen3-0.6B | 0.6B | 128K | Ultra-light | Mobile apps, IoT, offline tools |

1 Qwen3-235B-A22B

This is the crown jewel of the Qwen3 lineup. It dropped on July 22, 2025, with over 10T tokens in training. When I tested it on document QA and logic puzzles, it held context like a champ and even beat Claude 3 in long-form RAG queries.

It's huge — you'll need powerful GPUs — but if you want GPT-4-level accuracy with open weights, this is it.

2 Qwen3-30B-A3B

The Qwen3-30B-A3B is my go-to model for local tasks. It runs smoothly on a decent RTX 3090 or better and works great for report generation, code editing, and creative writing.

Unlike smaller models, it doesn't break context often. With 128K input support, it's ideal for anyone needing a balance of power and efficiency.

When I use Nut Studio, I usually load this one first — switching between models takes one click.

3 Qwen3-1.7B

This one's perfect if you're just starting out. The Qwen3 1.7B is small enough to run on most laptops and fast enough for daily tasks like chatting, copywriting, and content QA.

It's way more stable than Mistral 7B when it comes to holding tone and meaning. You don't need extra setup either — it just works out of the box with Nut Studio.

4 Qwen3-0.6B

Think of this as a tiny AI elf living in your phone. The Qwen3-0.6B is under 1GB, but can still summarize documents, translate text, or do small-scale chatting.

It’s great for edge devices and IoT tools. With Nut Studio, I've used it to build offline agents that switch between Qwen3, LLaMA, and DeepSeek in seconds.

How to Use Qwen3 Models Locally (With Ollama/Nut Studio)

When I first tried running Qwen3 locally, I thought i'd be a quick setup. But between model sizes, formats, and system requirements, it got confusing fast. If you're in the same boat, don't worry — running Qwen3-0.5B to 72B on your own machine is absolutely doable. Whether you prefer a simple GUI like Nut Studio or a terminal-based setup with Ollama, I'll walk you through both.

Method 1 Run Qwen3 with Nut Studio

If you're wondering how to run Qwen3 locally without touching code, I can't recommend Nut Studio enough. I used it to deploy Qwen3-30B on my PC, and the setup took under five minutes — seriously. No command line. No broken paths. Just install, select a model, and click run.

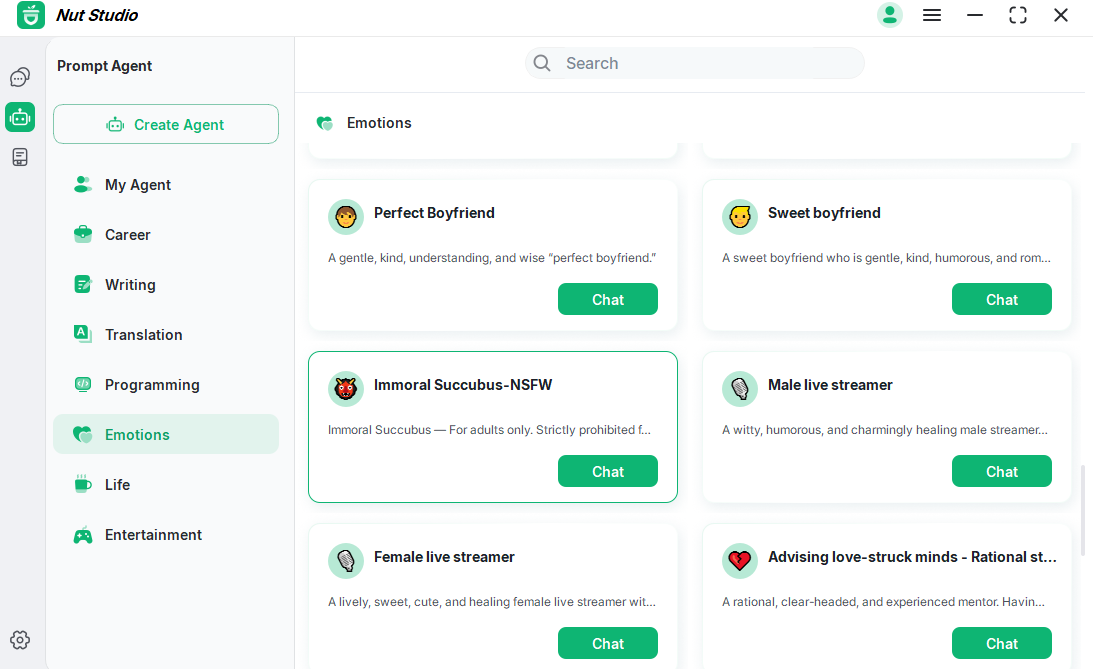

What surprised me most? Nut Studio doesn't just support Qwen3 — it works with over 50 different open-source models, making it a great all-in-one tool whether you're into story writing, productivity, or looking for the best AI models for roleplay.

- Built-in support for Qwen3-0.6B, 1.7B and 32B, plus many others like Mistral and LLaMA3

- Sliders for temperature, top-p, and context length

- Built-in prompt library and over 180 pre-made AI agents

- Runs smoothly even on mid-tier GPUs (tested with 1.7B and 30B)

Steps of running local Qwen3 with Nut Studio:

Step 1: Download Nut Studio on your PC

Step 2: Choose your Qwen3 model version (I recommend starting with 1.7B if you're new)

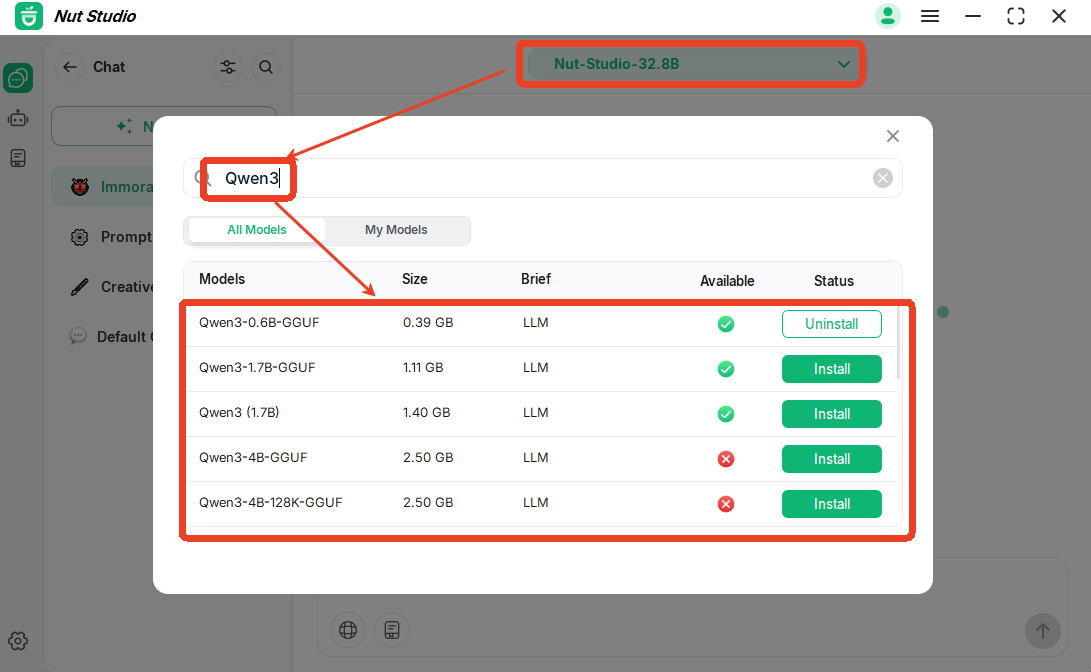

Click the top control bar and select "Choose model to start chat". Type "Qwen3" in the search box, choose your wanted version then click "Install" to start one-click deployment.

Step 3: Pick a built-in AI agent — or create your own

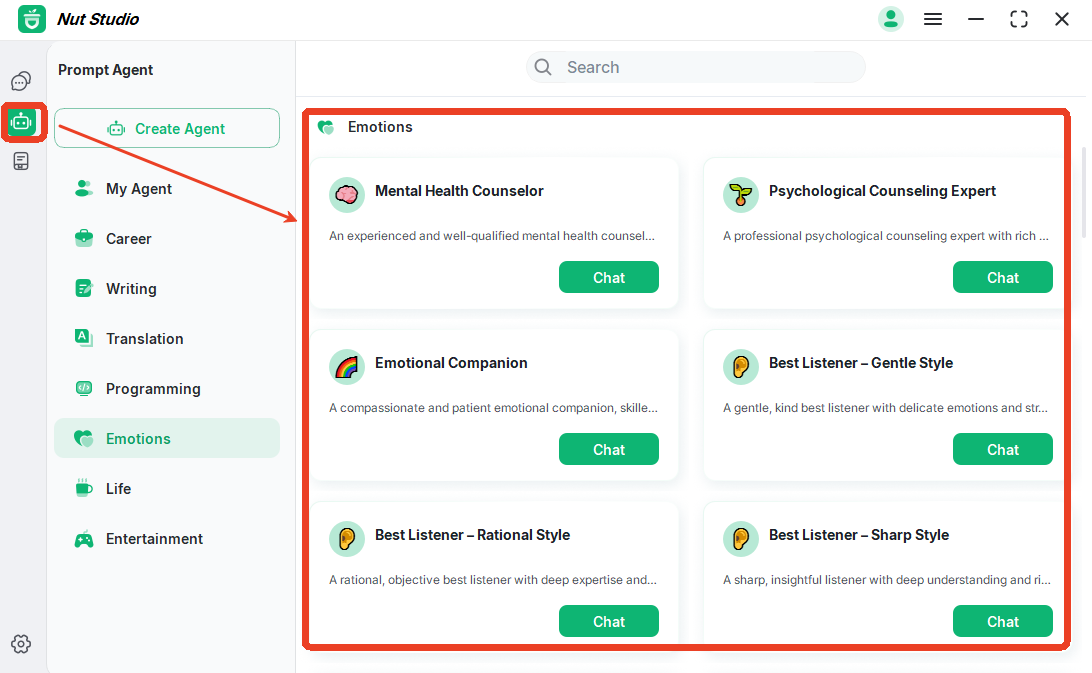

If you have no idea how to chat with Qwen3 model, Nut Studio has 180+ built-in AI agents, covering career, writing, emotions and more.

Step 4: Fine-tune your AI with custom settings

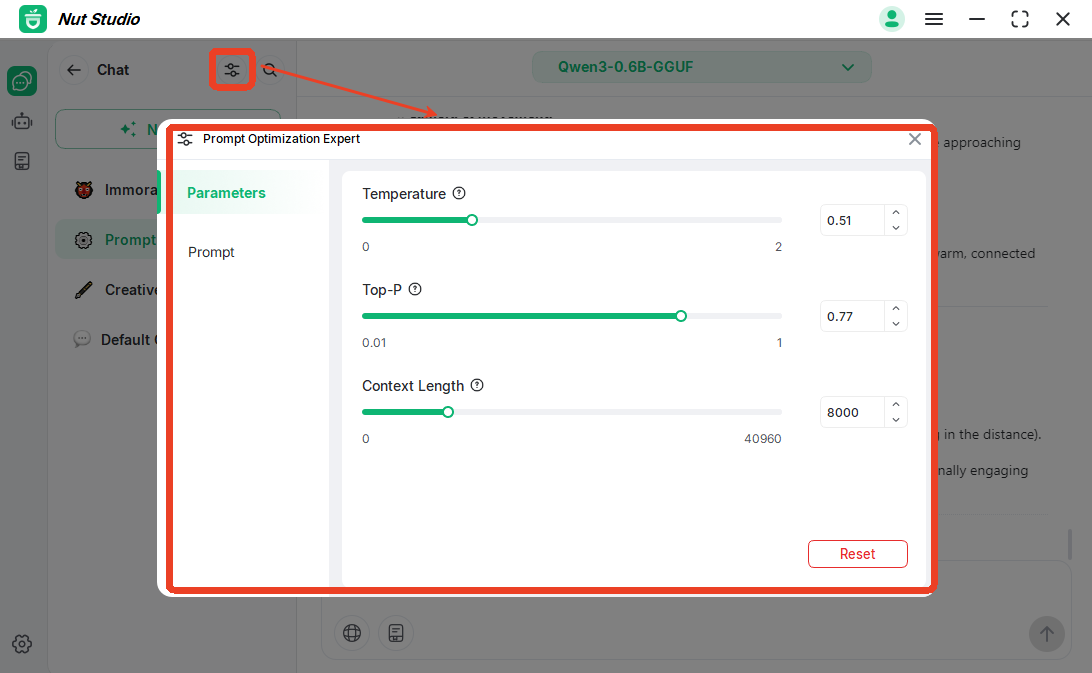

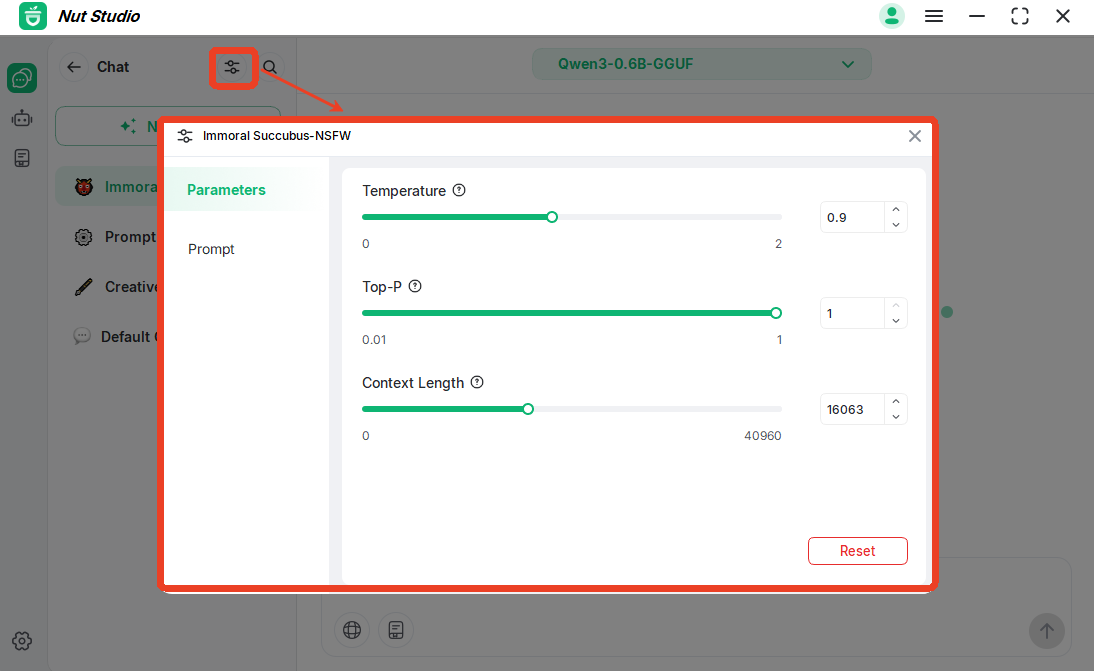

Tweak temperature, top-p, and other parameters to get the response style you want.

Method 2 Deploy Qwen3 with Ollama

If you enjoy working in terminals and tweaking every detail, the Ollama + GGUF method gives you full control. I used it to test smaller models like Qwen3-0.6B on a Linux laptop, and it ran surprisingly well. But be warned — you'll need to:

- Set up Python or Conda environments

- Download .gguf model files from Hugging Face or similar

- Run ollama run qwen3-xxx with manual flags

This approach is best if you're comfortable editing scripts or running models with custom backends. It's flexible but time-consuming.

If you're not sure how to install or run Ollama on your device, check out our step-by-step guide: How to Use Ollama on Windows and macOS.

Getting the Best Out of Qwen3: Prompting Tricks & Temperature Settings

Let's unlock the real power of Qwen3. Two things matter most: prompts and temperature.

What Is Temperature?

Think of temperature as Qwen3's personality dial. A low temperature (e.g. 0.1) makes it more serious and logical. A higher one (like 0.8) gives it more creative freedom — jokes, poems, even weird stuff.

When I want accurate answers (like for code or math), I set temperature to 0.2–0.3. For story writing or brainstorming? I push it to 0.7–0.9.

Top-p and max token limits also shape the result. Nut Studio makes all of this easy to change.

Best Prompt Settings I Tested

Here are some tested setups that worked great for me:

| Use Case | Prompt Style | Temp | Top_p | Notes |

|---|---|---|---|---|

| Coding Help | "You are a senior Python developer..." | 0.2 | 0.9 | Concise, factual replies |

| Creative Writing | "Act as a friendly novelist who..." | 0.8 | 1.0 | Rich language, big imagination |

| Translation | "Translate the following text into fluent..." | 0.3 | 0.85 | Precise, smooth translations |

Nut Studio's 180+ AI agents include predefined roles like Developer, Writer, Translator, even Therapist. These roles come with optimized system prompts and temperature settings — ready to go.

This means you don't have to write long prompts from scratch.

FAQs About Qwen3

1 What is Qwen3-A3B?

It refers to the architecture used in Qwen3-30B-A3B — a balanced version optimized for reasoning and speed.

2 How good is Qwen3 really?

It's among the top open models, beating Mixtral and Gemini Pro 1.5 in multilingual logic tests. Benchmarks back it up.

3 How to uninstall Qwen3.5?

Just remove the model files or uninstall via Ollama or Nut Studio. And yes, Qwen3 > Qwen3.5 in both performance and context length.

Conclusion

Whether you're running lightweight tasks or building advanced local RAG setups, the Qwen3 lineup has something for every use case. From the ultra-powerful Qwen/Qwen3-235B-A22B to the more accessible Qwen3-30B-A3B, these models offer top-tier performance with full local control. Paired with tools like Nut Studio or Ollama, running them is easier than ever — no cloud, no limits, just pure open-source power.

Nut Studio

Nut Studio

Was this page helpful?

Thanks for your rating

Rated successfully!

You have already rated this article, please do not repeat scoring!