Ready to ditch the CLI? Download Nut Studio Free and run local LLMs with 1‑click—no terminal needed!

If you're exploring Ollama alternatives for Windows or Mac, you're not alone. Many users love what Ollama offers—but it's not perfect for everyone. Some want a faster tool, some need a better interface, and others just don't want to touch the terminal at all.

In this guide, I'll compare the top alternatives to Ollama across Windows, macOS, and Linux. Whether you're a total beginner or a pro coder, this will help you find the best fit for your device and skill level. Let's break it down!

CONTENT:

- What Is Ollama and Why Are Users Looking for Alternatives?

- How to Use Ollama on Windows and macOS?

- Nut Studio — Best Ollama Alternative for Windows

- LM Studio — Top Ollama Alternatives for Mac

- vLLM — Software Like Ollama for Linux

- Ollama vs vLLM vs Nut Studio vs LM Studio — Which Local LLM Tool Should You Pick?

- FAQs About Ollama Alternatives

- Conclusion

What Is Ollama and Why Are Users Looking for Alternatives?

What is Ollama exactly?

Ollama is an open-source tool that lets you run large language models (LLMs) directly on your local machine. It's known for its simple CLI interface—fast, clean, and pretty easy to use once you get the hang of it. With one line of code, you can load models like Code Llama, DeepSeek, Mistral, and Phi, all without setting up complex environments. You can choose from a large model library on the Ollama website and run any of them locally with just one command. If you're exploring model customization, you can also import GGUF into Ollama for even more control.

But still, many users are now looking for an Ollama alternative. Why?

Well, for starters, Ollama doesn't have a GUI. That's fine if you're used to the terminal—but for beginners, it can be a real barrier. It also offers very little control. You can't tweak model settings, choose quantization formats, or manage files yourself, since everything runs behind the scenes. And because it only supports pre-trained models, you can't fine-tune or train your own. That's why many users start looking for an Ollama alternative that's easier to use, more flexible, or simply offers a better interface.

Nut Studio is a simple Ollama alternative for Windows that runs local models with one click and no terminal.

How to Use Ollama on Windows and macOS?

Now that you know what Ollama is, let's walk through how to use Ollama on your device. The setup is simple if you're comfortable with the terminal—but it's not always beginner-friendly.

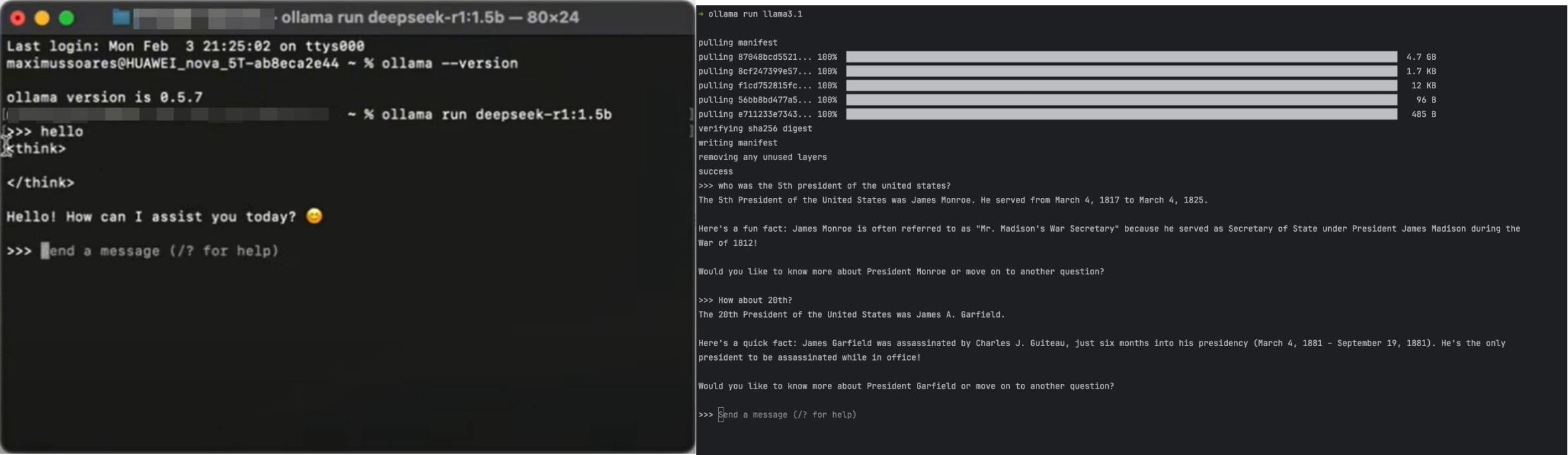

Here's how to use Ollama on macOS:

Step 1: Visit ollama.com and download the macOS installer. It comes as a .zip file—just unzip it and move the Ollama app into your Applications folder.

Step 2: Open Terminal and check if Ollama installed correctly by running:

ollama --version

If you see a version number, you're ready to go.

Step 3: Next, pick a model from the Ollama model library—like DeepSeek R1 that fits your hardware. Then run:

ollama run deepseek-r1:7b

Step 4: Ollama will download and launch the model. Once done, you can chat with the model right in your terminal.

Here's how to use Ollama on Windows:

Step 1: Go to ollama.com and download the Windows installer (.exe file). Double-click OllamaSetup.exe and follow the steps.

Step 2: Open Command Prompt by pressing Win + R, typing cmd, and hitting Enter. Check installation with:

ollama -v

Seeing the version number means the install was successful.

Step 3: Choose a model from the Ollama library—say Llama 3.1—and run:

ollama run llama3.1

Step 4: Ollama will download and launch the model. After that, you can chat directly inside the command prompt window.

Once installed, Ollama works well if you're okay using the terminal. But if you're not familiar with command-line tools, it can be confusing. There's no visual interface, and even simple tasks like switching models or viewing results require typing commands.

That's why many users—especially beginners—end up looking for apps like Ollama for Windows or an Ollama alternative for Mac that's easier to use, more visual, and doesn't need any code.

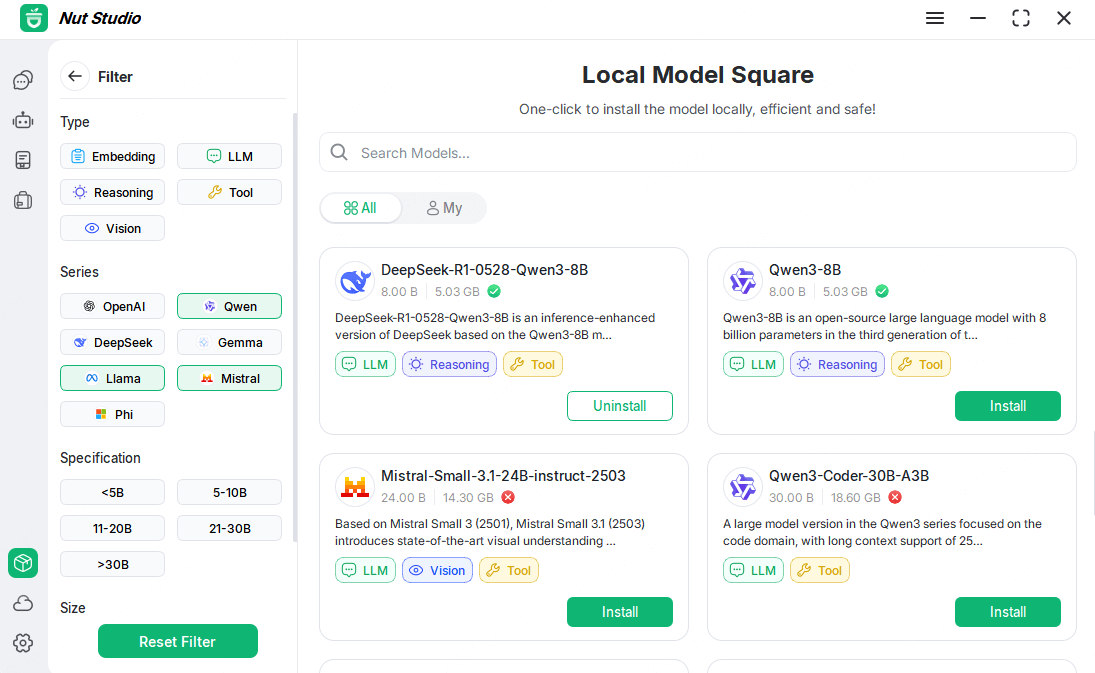

Nut Studio — Best Ollama Alternative for Windows

If you're on Windows and looking for an Ollama alternative that doesn't require coding, Nut Studio is a great pick. It gives you a full visual interface—no command line, no configs, no setup. Just open the app, pick a model, and click once to start. That's all it takes.

Nut Studio supports 50+ open-source models, including LLaMA 3, DeepSeek, Mistral, Phi, and Gemma. You can run them fully offline, or turn on internet access if you want features like web search or smart file reading.

It also comes with ready-to-use AI agents for common tasks—like writing, coding, studying, or chatting. You can turn any model into a personal AI assistant that works right on your PC.

- Download and launch 50+ top LLMs like LLaMA, DeepSeek, and Qwen — all in one place.

- Works fully offline. Use AI anytime — even with no Wi-Fi or mobile data.

- Build your own smart assistant by uploading PDFs, Word docs, slides, and more.

- Top AI video models for unlimited creations, PixVerse, Veo 3, Kling and more.

- Equipped 100+ ready-to-use AI agent templates covering career, writing, translation, programming, emotions, life, and entertainment to use offline.

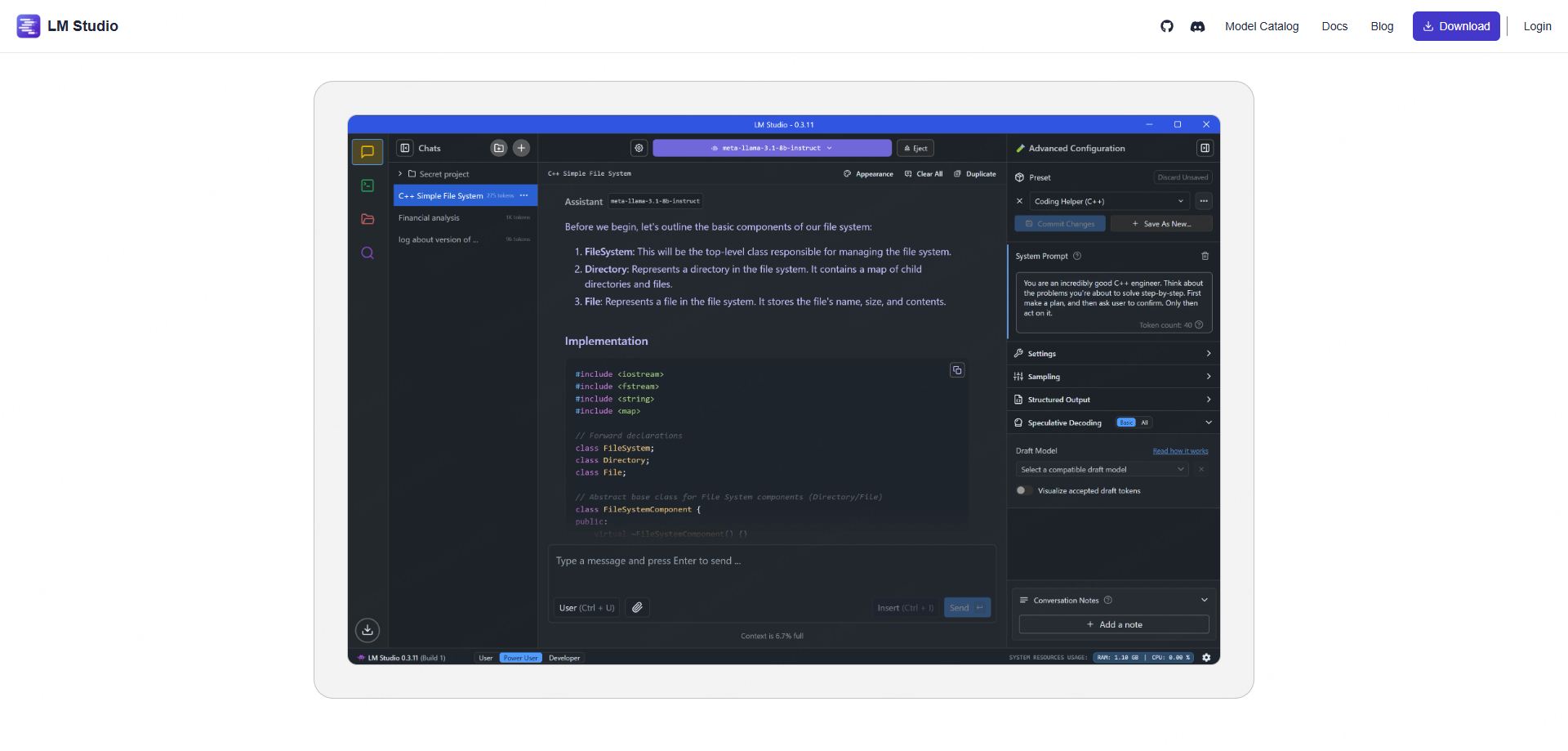

LM Studio — Top Ollama Alternatives for Mac

If you're using a Mac and want an Ollama alternative for macOS that feels more visual and interactive, LM Studio is a great option. It offers a clean, desktop-style interface that feels like ChatGPT—but running completely on your device.

LM Studio connects to Hugging Face, so you can discover and load models like LLaMA 3, Mistral, Phi, and many more. You can search by size, author, or architecture, then download and switch between them easily.

It also lets you customize the behavior of each model with system prompts, response settings, and stop tokens. You can even make the model act like a character and test how different models respond.

vLLM — Software Like Ollama for Linux

If you're running Linux and want software like Ollama, vLLM is one of the most powerful options available. But it's built for a different kind of user.

vLLM isn't an app—it's an inference engine that's optimized for speed. It's designed to serve large language models (LLMs) in batch mode, which means it can handle many requests at once with low latency and high throughput.

The key to its performance is something called PagedAttention. It helps vLLM manage memory more efficiently, especially when dealing with long prompts or multiple users. Compared to Ollama, which is great for simple, local usage, vLLM is built for scenarios where speed and scalability matter most—like backend services or research servers.

It supports many of the same models as Ollama and Nut Studio—like LLaMA 3, DeepSeek, and Mistral—but running vLLM usually requires Docker, a GPU, and some setup skills.

Ollama vs vLLM vs Nut Studio vs LM Studio — Which Local LLM Tool Should You Pick?

So far, we've looked at four popular tools—Ollama, Nut Studio, LM Studio, and vLLM—each built for different users and systems. But which one is right for you?

If you're just getting started with local AI tools, don't worry. You don't need to understand everything under the hood. The table below compares these tools in plain English—so you can quickly find the best fit based on what matters to you: interface, speed, ease of use, and model support.

| Feature / Tool | Ollama | vLLM | Nut Studio | LM Studio |

|---|---|---|---|---|

| Interface | CLI and limited GUI | CLI only | GUI (one-click, chat-style) | GUI |

| Model Library | Built-in website | Manual (via HF) | Pre-integrated & local | Hugging Face UI |

| Speed | Medium | Fastest | Fast (CPU-friendly) | Good (Apple-optimized) |

| Supports Fine-Tune | × | √ (manual setup) | × | × |

| Smart Agent Support | × | × | √ | × (basic prompt only) |

| Offline Capable | √ | √ | √ Full offline | √ |

| Best For | Terminal users | Developers w/ GPU | Beginners, assistants | Mac users exploring AI |

Key Takeaways:

- If you're a beginner, want a local AI assistant, and hate using the terminal, go with Nut Studio.

- If you're on a Mac, like visual tools, and want to explore different models, LM Studio is a great fit.

- If you're a developer running Linux with a GPU, vLLM gives you unmatched speed and performance.

- If you're comfortable with the terminal and want simplicity, Ollama still works fine—especially on macOS.

For most users—especially if you're on Windows or just want something that works right away—Nut Studio is the easiest and most beginner-friendly Ollama alternative.

Want simplicity over CLI complexity? Nut Studio beats Ollama and others hands-down for beginner-friendly use.

FAQs About Ollama Alternatives

1 Is Ollama Safe?

Yes, Ollama is generally safe to use. It runs locally on your machine and doesn't require a cloud account or internet connection for most models. However, like any tool that runs background services, it's good to review what gets installed—especially if you're in a sensitive environment. If you prefer a tool with clearer control and no background processes, alternatives to Ollama like Nut Studio offer a cleaner experience for local use.

2 How to Install Ollama?

To install Ollama, go to ollama.com, download the installer for your OS (macOS or Windows), and follow the steps.

1. For macOS, unzip and move the app to Applications. For Windows, run the .exe installer.

2. Then, open a terminal and use ollama run models.

3. If you're not comfortable with the command line, you might prefer apps like Ollama for Windows that come with a GUI—such as Nut Studio.

3 What are the best apps like Ollama for Windows?

For most Windows users, especially beginners, Nut Studio is the best alternative to Ollama I've tested. It offers one-click setup, a clean GUI, and supports local models like LLaMA, DeepSeek, and Mistral—all without using the terminal.

From my experience, it's the most accessible option if you just want a local AI that works out of the box. If you'd like to explore more options beyond Ollama, check out my full guide on Best AnythingLLM Alternatives.

4 Do I need a GPU to use Ollama or its alternatives?

No, a GPU is not required to use Ollama or most of its alternatives, but it helps. Tools like vLLM perform best with a GPU and are built for high-throughput tasks. On the other hand, Nut Studio and Ollama can run on regular CPUs, making them accessible even on mid-range laptops.

5 What is the easiest tool to run LLMs locally?

The easiest tool to run LLMs locally is Nut Studio. It's designed for non-technical users who want a smooth experience—just download, pick a model, and start chatting. No setup, no code, no stress. It also supports full offline mode, so your data stays private.

Conclusion

If you're searching for the best alternatives to Ollama, your choice depends on what you value most—ease of use, interface, speed, or control. For Windows users who want a simple, GUI-based experience without any coding, Nut Studio stands out as the top pick. Mac users can explore LM Studio for a smooth and visual local LLM setup. Meanwhile, Linux power users who need speed and scalability often prefer vLLM.

No matter your skill level or platform, there's a great Ollama alternative ready to help you run local LLMs with confidence and privacy.

-

Best AnythingLLM Alternatives: A Guide to Local LLMs

Find the best AnythingLLM alternatives to chat with your documents. Compare top local LLM tools like Nut Studio, Ollama, and Google's NotebookLM to choose the one that fits your needs.

5 mins read -

[2025 List] Best LM Studio Alternatives for Local LLMs on Windows & Mac

Searching for an LM Studio alternative? Check out top-ranked options for local LLMs on Windows and Mac, perfect for beginners and devs.

10 mins read

Nut Studio

Nut Studio

Was this page helpful?

Thanks for your rating

Rated successfully!

You have already rated this article, please do not repeat scoring!