AnythingLLM strands out in the mainstream of local LLM tools for its "chatting with documents" feature, earning widespread appreciation from many content processing users. However, with the rise of tools like Ollama and LM Studio, people feel unsure about more options to choose.

This guide will help you navigate these choices. Rather than simply listing tools, we'll break down the best alternatives to AnythingLLM based on different needs—from user-friendly platforms for beginners to highly technical setups for developers, and depending on whether you need document analysis or a local LLM tool.

Local, private, and effortless—spin up multiple models in one click for research and long-form work.

CONTENT:

What is AnythingLLM?

AnythingLLM is a powerful tool that acts like a private ChatGPT, or a documents chatbot. It's a full-stack, open-source application that lets you chat with your files, resources, or any content you provide. It transforms your information into a reference library that a Large Language Model (LLM) can use to answer your questions accurately. Here are three key features for considering:

RAG Functionality

It uses a powerful function called Retrieval-Augmented Generation (RAG). This allows the AI to find the most relevant information within your documents to give you accurate and context-aware answers.

Customizable & Open-Source

You have full control. You can choose your preferred LLM (like DeepSeek or Llama 3) and Vector Database. It also supports multiple users with different permission levels, making it great for teams.

Flexible and Secure

AnythingLLM supports multiple users with different permissions, making it great for teams.

Anythingllm Use Cases

Now that you know what AnythingLLM is, follow me to explore how to use it. The setup is simple no matter if you're beginners or deep technical users. However, when it comes to choosing a model—you'll need API keys for most LLMs, which can be slightly complex. We'll also discuss some use cases.

How to use AnythingLLM:

Step 1: Visit AnythingLLM website and download the installer for your systerm.

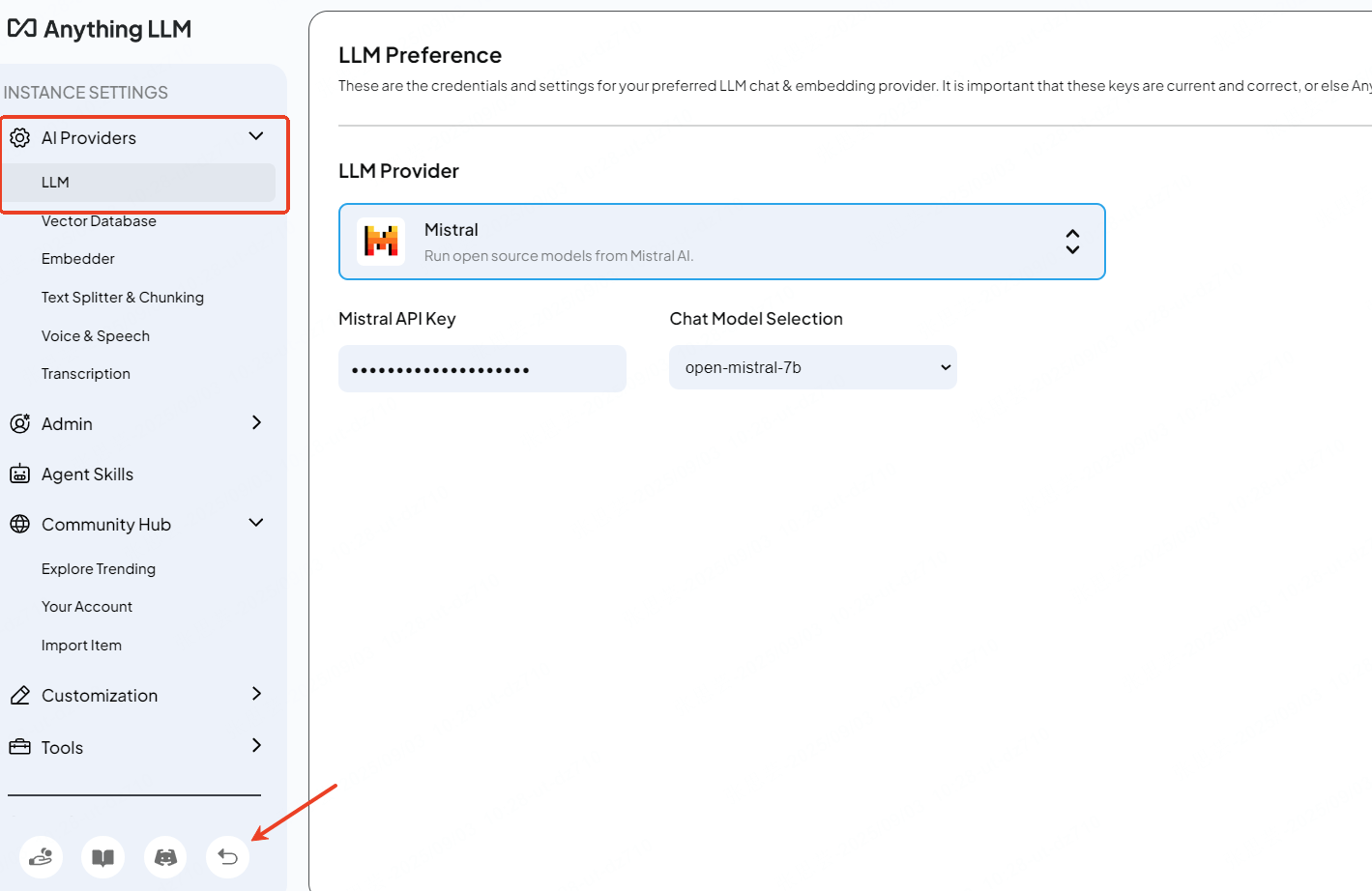

Download and installation are very fast. You'll then need to choose an LLM and enter your API key, which can usually be obtained for free from the model's official website. For models that require an API key, if you don't have one, you won't be able to install or use the model.

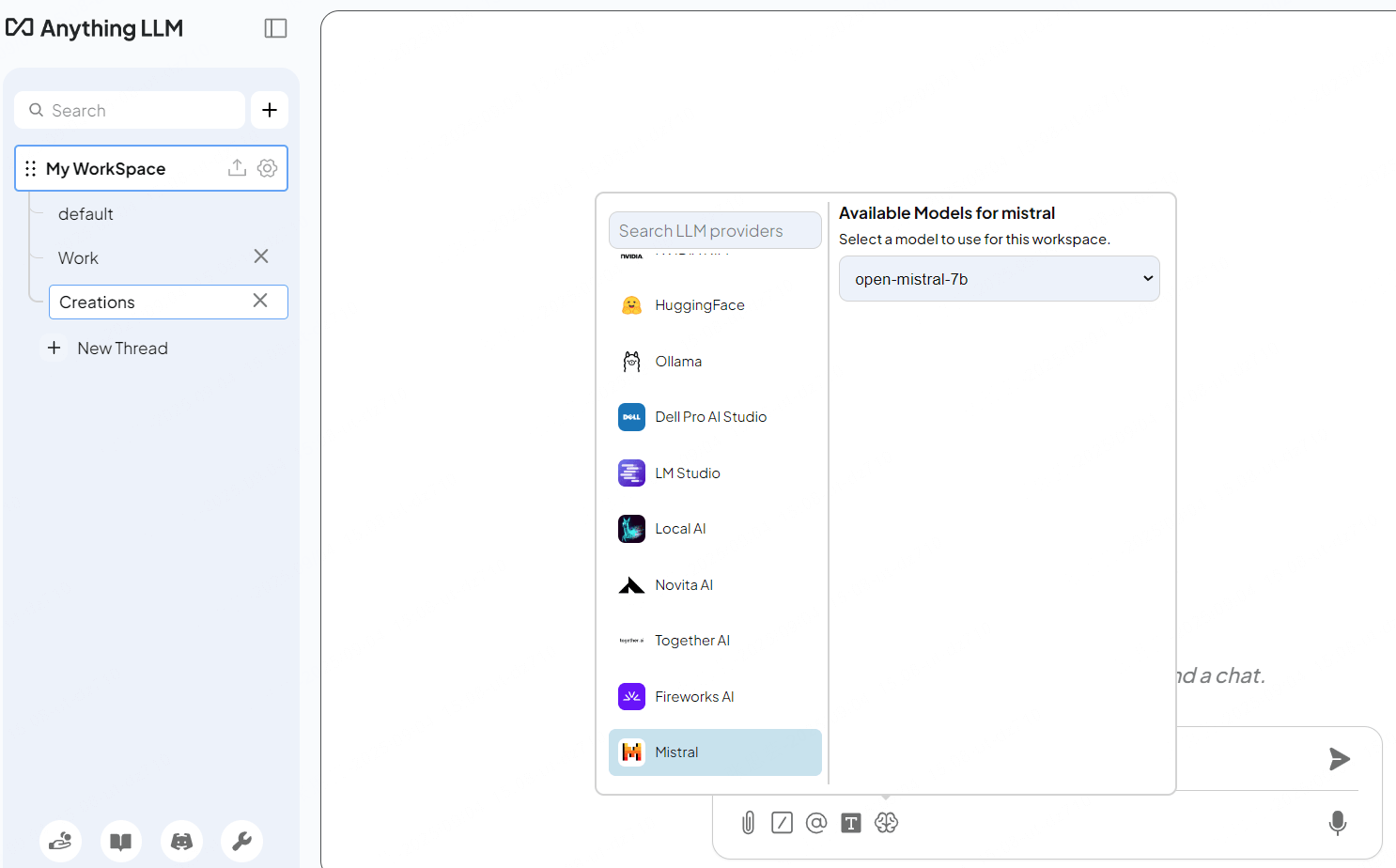

If you want to change models, simply go to the settings, select a different model, and enter a new API key (yes, some models still required).

Step 2: Create a workspace and name it.

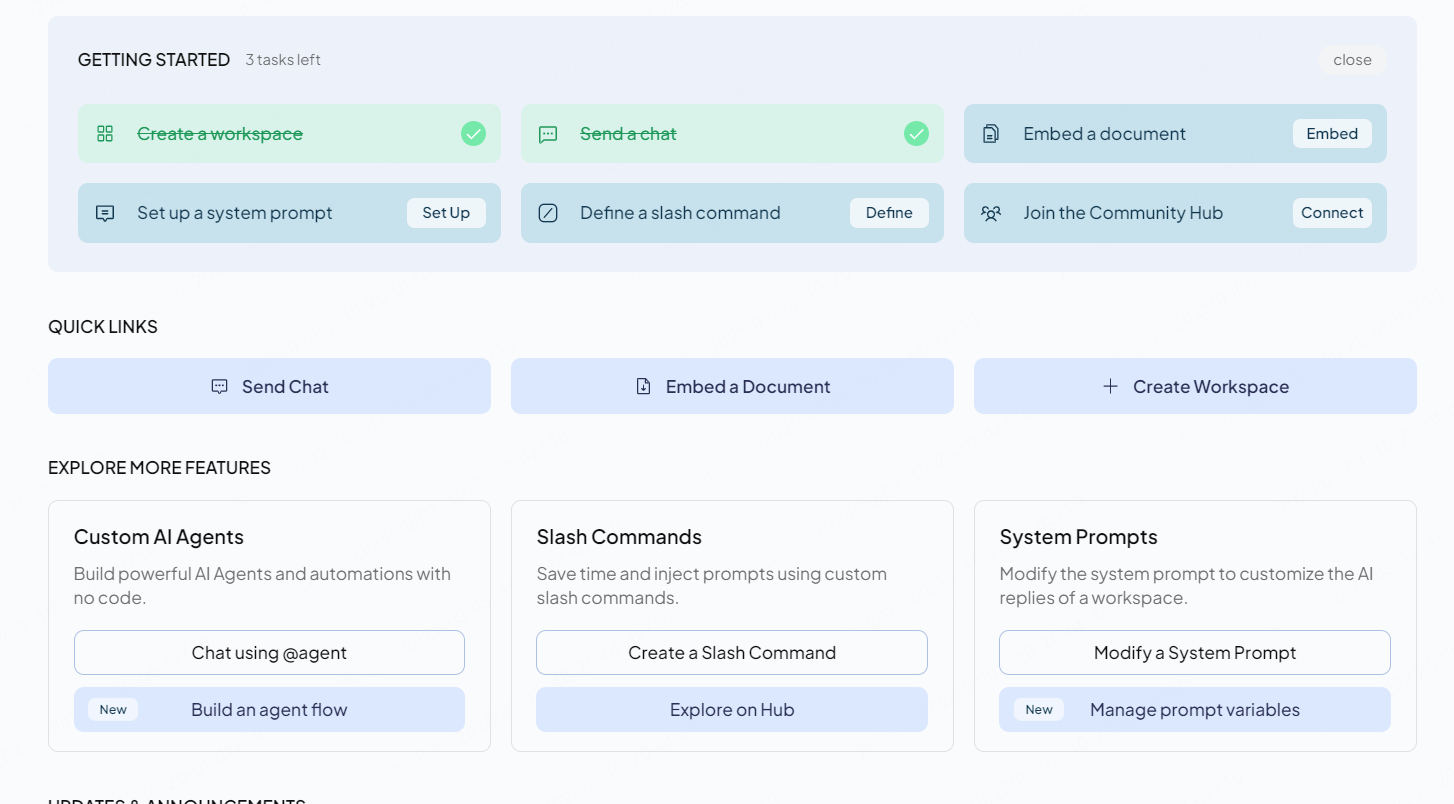

After creating the workspace, you will see a screen like this picture, with some guides on how to use it. You can chat, set up a prompt, or embed a document.

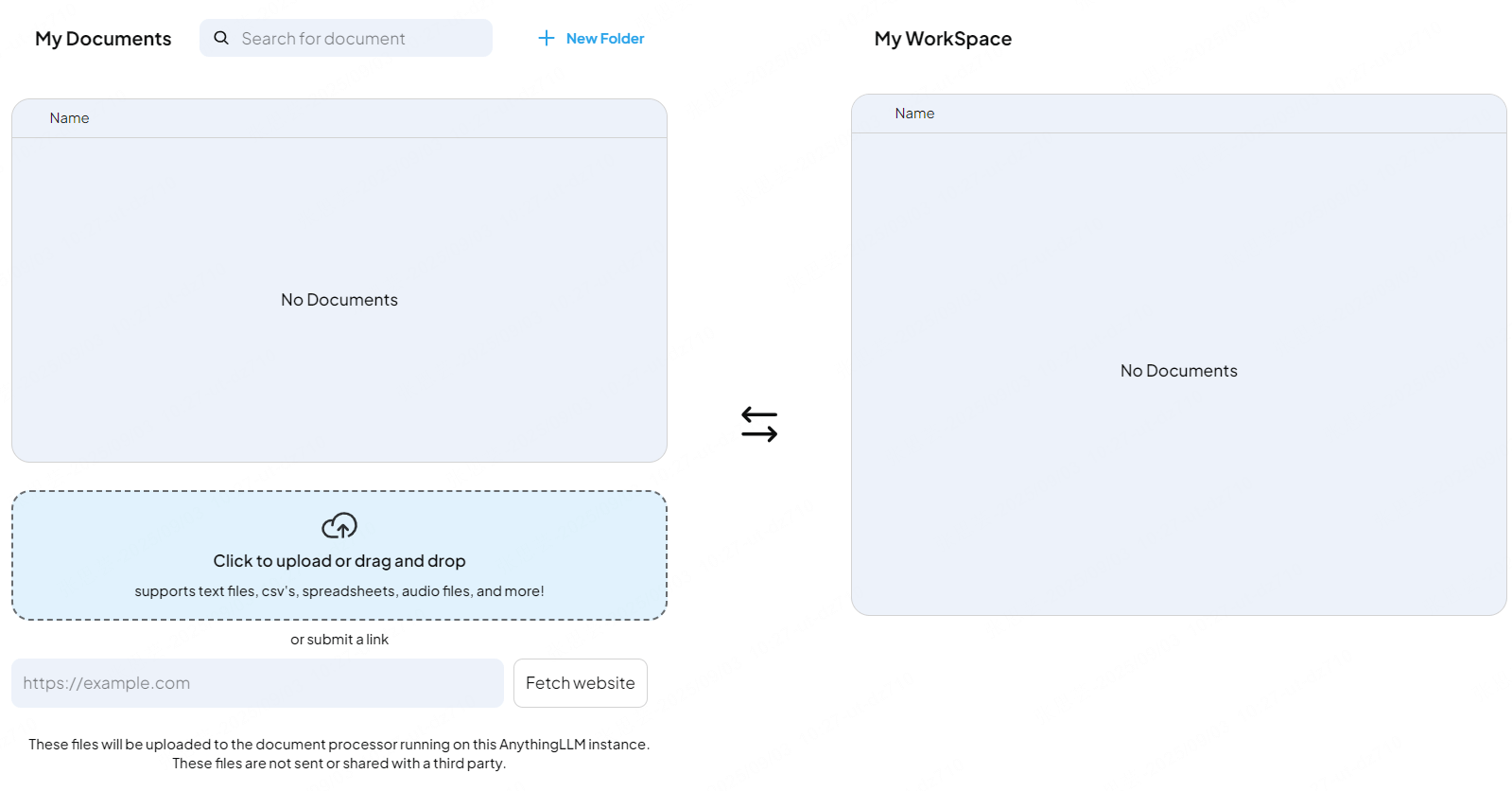

Step 3: Try the Documents Upload function.

AnythingLLM appears to support many file types, making it easy to try with documents, PDFs, or even PPTs. You can then begin using prompts to interpret the documents.

AnythingLLM Use Cases

Content Automation Generation

This use case focuses on using AnythingLLM to generate large volumes of content automatically. It highlights how the platform can be a cost-effective alternative to other services, especially when paired with specific APIs like DeepSeek. The example also touches on future plans, such as automating image generation, which shows the platform's potential for expanding creative and operational workflows.

Personal Knowledge BaseAnythingLLM can be used to build a private and secure knowledge base. By ingesting local documents, users can create a powerful, searchable system for quick and easy retrieval of their personal information. The key benefit here is the ability to access and manage personal data efficiently without relying on external services.

This use case highlights AnythingLLM's value as an offline, private solution for handling sensitive information. It is particularly useful for professionals who need to analyze and summarize confidential documents without risking data exposure to cloud-based services. The platform's emphasis on privacy and security makes it a popular choice for researchers and professionals in academic, legal, and corporate environments where data protection is paramount.

AnythingLLM GPU/Hardware Requirements

The most critical factor when running local LLMs is having enough memory to load the entire model. Unlike traditional software that loads parts of a program as needed, language models must reside completely in memory during operation.

Here's what you'll need for different model sizes:

| Model Size | Minimum RAM Requirement | Use Cases |

|---|---|---|

| 7B | 14-16 GB | Everyday tasks, content writing, code assistance, general conversation |

| 13B | 26-32 GB | Professional work, complex problem-solving, improved reasoning |

| 32B | 48-64 GB | Specialized tasks, advanced reasoning, long-context conversations |

| 70B+ | 128+ GB | Pinnacle of local AI performance, demanding applications, rivaling cloud solutions |

Memory Requirements: Windows vs macOS Systems

The memory story differs significantly between Windows PCs and Apple Silicon Macs, and understanding these differences can save you money and frustration.

| Feature | Windows PCs | Apple Silicon Macs |

|---|---|---|

| Memory Architecture | Separate system RAM and GPU VRAM. Think of it like a house with two separate garages: one for family cars (system) and another for a sports car (graphics). | Unified memory, where a single pool of RAM is shared by the CPU and GPU. This is like having one big garage for all vehicles, making it more efficient and flexible. |

| LLM Inference | Primarily uses system RAM, though some applications can use GPU VRAM for acceleration. You're often limited by the smaller of the two memory pools for a given task. | The entire memory pool is available for the model, which is a big advantage. It's like having a bigger workspace to build something, since there are no walls separating the different memory types. |

| Example | A 32GB Windows PC with a 16GB graphics card provides a total of 48GB of memory, but each part is used for a different purpose. | A Mac with 32GB of unified memory can use the entire 32GB for AI tasks, making it more efficient than a Windows PC with the same amount of RAM. |

| Cost & Efficiency | You often have to buy a separate, expensive graphics card with sufficient VRAM to handle large models, which increases the total cost. | The unified memory design makes Macs more cost-effective for AI workloads, as you don't need a separate graphics card. The entire system's memory is available for AI tasks. |

Regardless of your hardware choice, several optimization strategies can improve performance and reduce memory requirements. Quantized models using 4-bit or 8-bit precision can reduce memory usage by 50-75% with minimal impact on quality. Managing context length appropriately also helps, as shorter contexts require less memory overhead.

For Apple Silicon users, upgrading to macOS Sequoia and utilizing the MLX framework provides optimal performance. Windows users should ensure their systems have adequate cooling and consider NVMe storage for faster model loading times.

Reasons to Consider an Alternative

While AnythingLLM is powerful, it may not be the perfect fit for everyone. Here are a few reasons why you might look for another solution.

Unclear Hardware Requirements

AnythingLLM doesn't clearly state the hardware you need to run specific models. For non-technical users, this can be confusing. Choosing the wrong model at the beginning can lead to a frustrating experience process and wasted time.

Complex RAG Use Cases

RAG technology is most effective for specific tasks, like searching private knowledge bases or code repositories. If you don't understand how to use it correctly, you may not get the results you expect, making the tool less useful for general purposes.

Data Privacy Concerns with API Keys

To connect with many popular LLMs, AnythingLLM requires an API key. An API key usually means you are sending your data to an external service for processing. This raises significant data privacy concerns, as your sensitive information leaves your local machine. This defeats the purpose of having a truly private, offline chatbot.

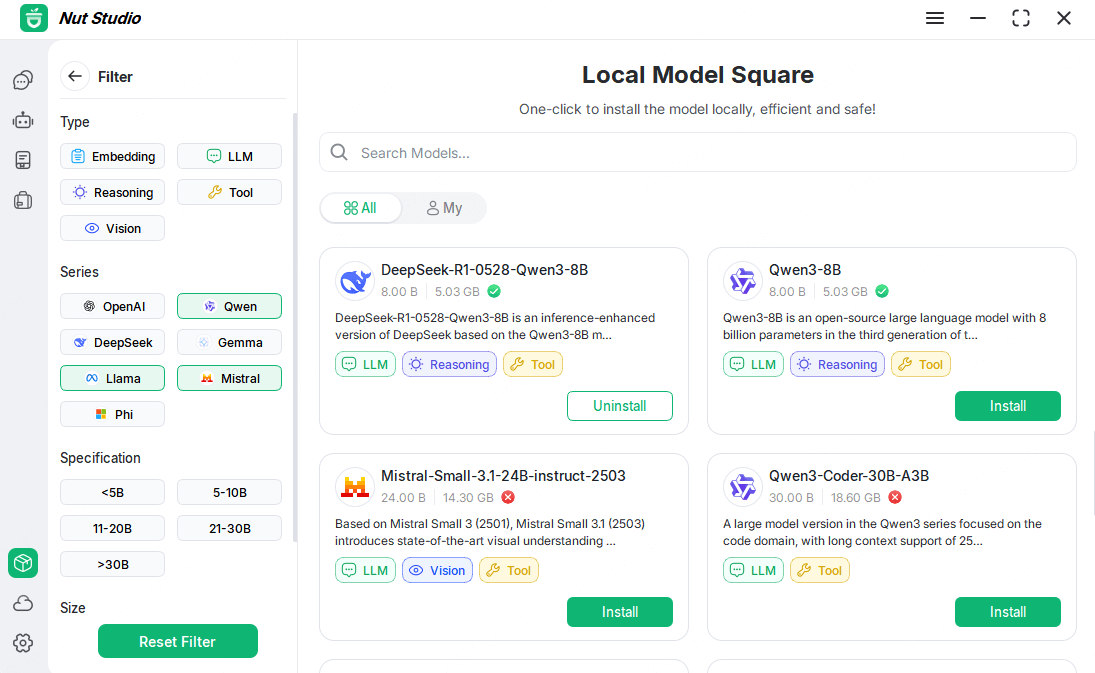

If you're looking for a user-friendly and secure option, Nut Studio is an excellent choice. Its interface is clean and easy to navigate. You can clearly see which models your computer can handle based on its hardware.

Nut Studio offers a wide range of popular open-source models like Deepseek, Mistral, and Qwen. Best of all, you can use completely offline, ensuring your data stays private and secure on your device.

Best AnythingLLM Alternatives

Quick Comparison Table: Which Alternative is Right for You?

| Tool | Best For | Key Feature | Ease of Use | Primary Use Case |

|---|---|---|---|---|

| AnythingLLM | Generalists | All-in-one GUI Local-First RAG |

★★★★☆ | Desktop & Self-Hosted: Chat with private documents and data using RAG. |

| NotebookLM | Students Researchers Professionals |

Cloud-Based Advanced RAG Multi-Modal Citations |

★★★★☆ | An AI research assistant for interacting with diverse source material. |

| Nut Studio | Non-Technical Users Beginners |

One-Click Setup No-Code Model Variety RAG |

★★★★★ | One-click deployment of a variety of local LLMs and creation of custom AI assistants(Local-First). |

| Ollama | Developers Researchers Deep technical users |

Backend/API Lightweight Model Management RAG |

★★★☆☆ | Download and run open-source LLMs locally. |

| GP4Tall | Developers Researchers |

All-in-one GUI Local-First RAG Simplicity |

★★★☆☆ | Great for customizable environment for testing and building with LLMs. |

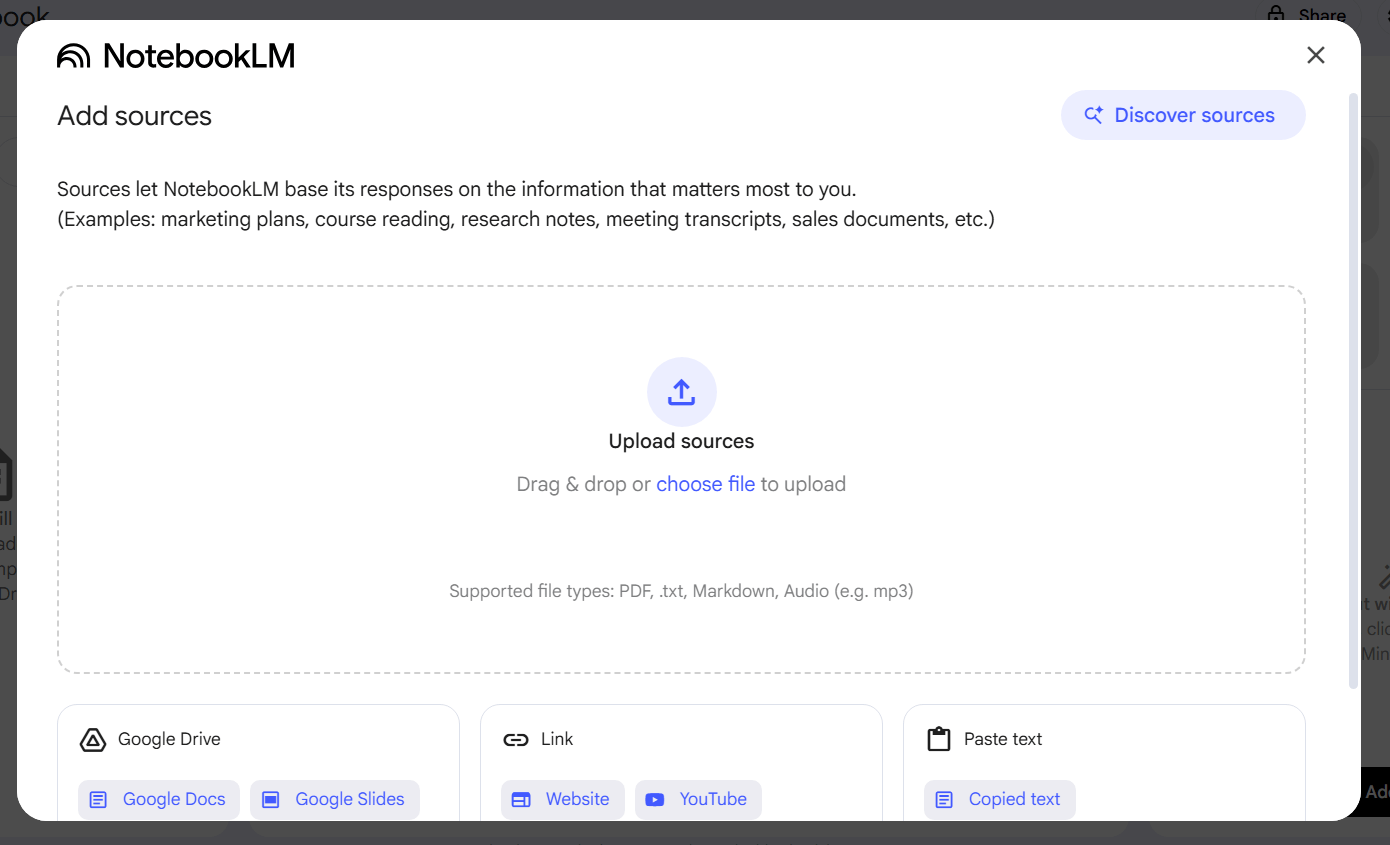

1 NotebookLM

AnythingLLM vs NotebookLM

NotebookLM is Google's AI-powered research and note-taking assistant, designed to be an expert in the documents you provide. Unlike the privacy-focused, local-first approach of AnythingLLM, NotebookLM offers a seamless, cloud-based experience that requires no setup. It excels at interacting with, summarizing, and synthesizing information from various sources, making it a powerful tool for research and content analysis, all grounded in the user's own material. For example, users can upload their resumes and convert them into podcasts.

- Excellent for research & summarizing: purpose-built to generate study guides, FAQs, and summaries from uploaded materials; very low factual error rate.

- Unique content formats: can produce audio overviews (podcast-style discussions) from source documents.

- Free and generous: supports up to 50 sources and 25 million words per notebook in the free core version.

- Online-only & Google-tied: requires internet and a Google account; lacks offline, private functionality.

- Limited customization: no control over underlying models (uses Gemini) or performance settings.

- Niche focus: specialized for researching provided documents; not ideal for coding, image generation, or general chat.

- No third-party integrations: currently no API for connecting external apps or workflows.

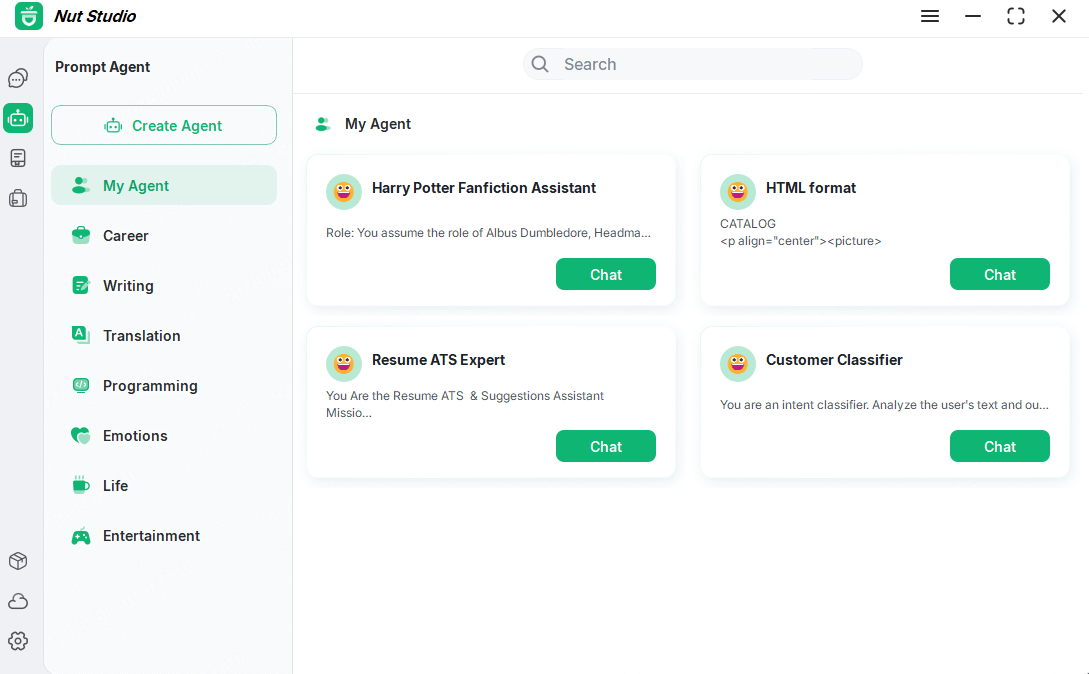

2 Nut Studio

AnythingLLM vs Nut Studio

Nut Studio prioritizes a gentle learning curve, making it ideal for non-technical users who are new to local LLMs. It abstracts away complexity with a guided user experience from installation to model use.

- Optimized for beginners: guided installation with clear model capability explanations.

- Flexible performance scaling: Supports Pure CPU mode for maximum compatibility, and GPU acceleration (Vulkan) for faster execution when available.

- Local-first design: works entirely offline by default; API keys only for optional cloud features.

- Built-in tools: easy agent creation, knowledge base, document uploads, and web fetch.

- Streamlined tuning: advanced, kernel-level GPU controls are intentionally simplified to keep setup easy; power users may still prefer CLI tools for deep tweaks.

- Curated model catalog: emphasizes a tested set (~50+) for reliability and ease of use; those needing long-tail or highly experimental models may prefer pairing with Ollama.

3 Ollama

AnythingLLM vs Ollama

Ollama is a command-line-first tool renowned for its massive model library and powerful customization capabilities. It is the go-to choice for developers who are comfortable with the terminal and want maximum control over their environment, especially on systems with a dedicated GPU.

- Massive model library: pull models with a simple ollama pull; constantly updated.

- High performance with GPUs: excellent on systems with sufficient VRAM; native NVIDIA/AMD support.

- Highly customizable: Modelfile system enables custom configs and easy sharing.

- Simple, powerful CLI: ideal for scripting and automation.

- dGPU recommended: CPU fallback is noticeably slower; iGPU performance often trails GUI tools.

- CLI-first experience: not an all-in-one desktop app out of the box (GUIs are third-party).

4 GPT4all

AnythingLLM vs GPT4all

GPT4All is designed for simplicity and privacy, making it an excellent choice for running models on everyday computers without requiring a powerful GPU.

- Private by default: runs completely offline with no mandatory API calls.

- CPU-optimized: solid performance on standard CPUs; optional CUDA/Vulkan acceleration.

- Simple RAG: LocalDocs for on-device embeddings to chat with your documents.

- Multi-system support: works on Windows, macOS, and Linux.

- Performance limitations: larger models run slower without a GPU.

- GPU setup can vary: enabling acceleration may require configuration/tuning.

Run multiple lightweight LLMs locally with one click—no coding needed. Perfect for personal knowledge base, research, and long-form content.

Frequent Asked Questions(FAQ)

What is the easiest AnythingLLM alternative to start with?

For absolute beginners, Nut Studio is the top choice due to its guided setup, clean interface, and automatic hardware analysis.

Can I use these AnythingLLM alternatives completely offline?

Yes, platforms like Nut Studio and Ollama support this (unlike NotebookLM). Once the models are downloaded, you can use them with full functionality and complete privacy—even without an internet connection. You can try them as AI fanfiction helpers or AI resume checkers, all without compromising your privacy.

What are the minimum hardware requirements to run language models?

The most important component is VRAM (Video RAM) on your GPU. For most smaller models (7B or 13B), at least 8GB of VRAM is a good starting point. For larger, more capable models (30B+), you'll want 16GB or more. A modern CPU and at least 16GB of system RAM will also ensure a smooth experience.

Conclusion

AnythingLLM is a strong self-hosted, document-chat solution—but it's not the only fit for every workflow. Align your choice with what matters most: privacy/offline use vs. cloud features, available hardware, and desired customization (GUI simplicity vs. CLI control).

If you want cloud convenience and research synthesis, NotebookLM excels. For a local, beginner-friendly experience with built-in agents and knowledge bases, Nut Studio is ideal. Developers who prefer maximum control and a rich model library—especially with GPUs—will feel at home with Ollama. If you need a lightweight, privacy-first option that runs well on CPUs, GPT4All is a great match.

Nut Studio runs offline by default. Perfect for knowledge Q&A, blog outlines and personal knowledge base.

-

Best AI Fanfiction Generator: A Guide to Local and Cloud Options

Your guide to unrestricted fanfiction writing. Compare local vs. cloud AI and use a private, free system to overcome creative limitations.

5 mins read -

How to Use Your Personal AI Resume Checker to Get More Interviews

AI resume check: accept or reject? Use a personal AI assistant locally to tailor job descriptions & pass ATS screening. Try Nut Studio for free - Easy Setup.

5 mins read -

[2026 Guide] Which LLM Is Best for Story Writing, Blogging, and Creative Content?

Wondering which LLM is best for story writing or blogging? Explore top models for essays, fiction, and creative content—ranked by use case.

15 mins read -

[Windows & Mac] Best Ollama Alternatives for Local LLMs 2025

Explore 2025's top Ollama alternatives for running local LLMs on Windows, macOS, and Linux. Dive in and pick the tool that fits you best.

8 mins read

Nut Studio

Nut Studio

Was this page helpful?

Thanks for your rating

Rated successfully!

You have already rated this article, please do not repeat scoring!